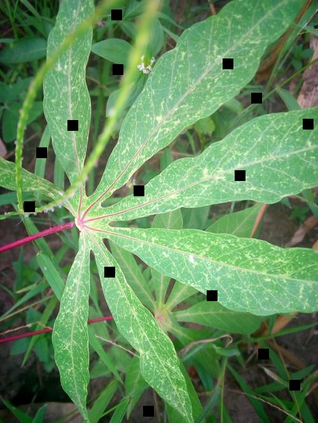

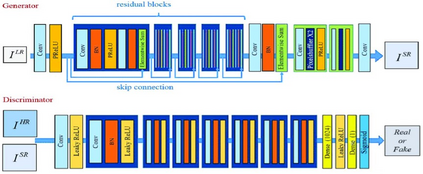

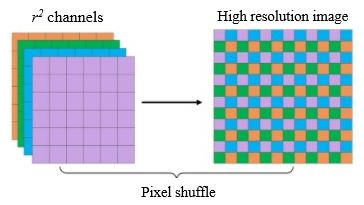

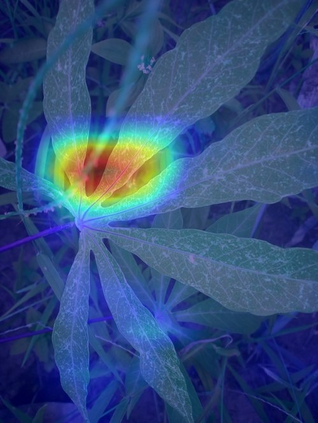

Fine-grained image classification involves identifying different subcategories of a class which possess very subtle discriminatory features. Fine-grained datasets usually provide bounding box annotations along with class labels to aid the process of classification. However, building large scale datasets with such annotations is a mammoth task. Moreover, this extensive annotation is time-consuming and often requires expertise, which is a huge bottleneck in building large datasets. On the other hand, self-supervised learning (SSL) exploits the freely available data to generate supervisory signals which act as labels. The features learnt by performing some pretext tasks on huge unlabelled data proves to be very helpful for multiple downstream tasks. Our idea is to leverage self-supervision such that the model learns useful representations of fine-grained image classes. We experimented with 3 kinds of models: Jigsaw solving as pretext task, adversarial learning (SRGAN) and contrastive learning based (SimCLR) model. The learned features are used for downstream tasks such as fine-grained image classification. Our code is available at http://github.com/rush2406/Self-Supervised-Learning-for-Fine-grained-Image-Classification

翻译:精细图像分类包括确定具有非常微妙歧视性特征的类别的不同亚类。精细的数据集通常提供捆绑的方框说明和分类标签,以帮助分类过程。然而,用这种说明建立大型数据集是一项艰巨的任务。此外,这种广泛的批注耗时费时,往往需要专门知识,这是建立大型数据集的巨大瓶颈。另一方面,自我监督的学习(SSL)利用可自由获得的数据生成监督信号,作为标签。在巨大的无标签数据上执行一些托辞任务所学的特征证明对多个下游任务非常有用。我们的想法是利用自我监督的视野,使模型学习精细微图像类的有用表现。我们实验了三种模型:吉格锯作为托辞任务、对抗性学习(SRGAN)和基于对比学习(SimCLRR)模型。这些学习特征用于下游任务,例如精细的图像分类。我们的代码在 http://gistremb./rushcomfrigistration for http://grestistrual-Ib.