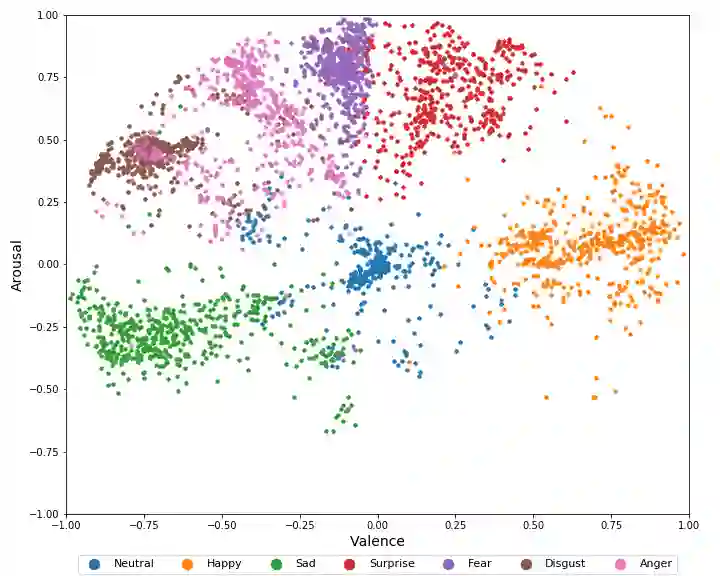

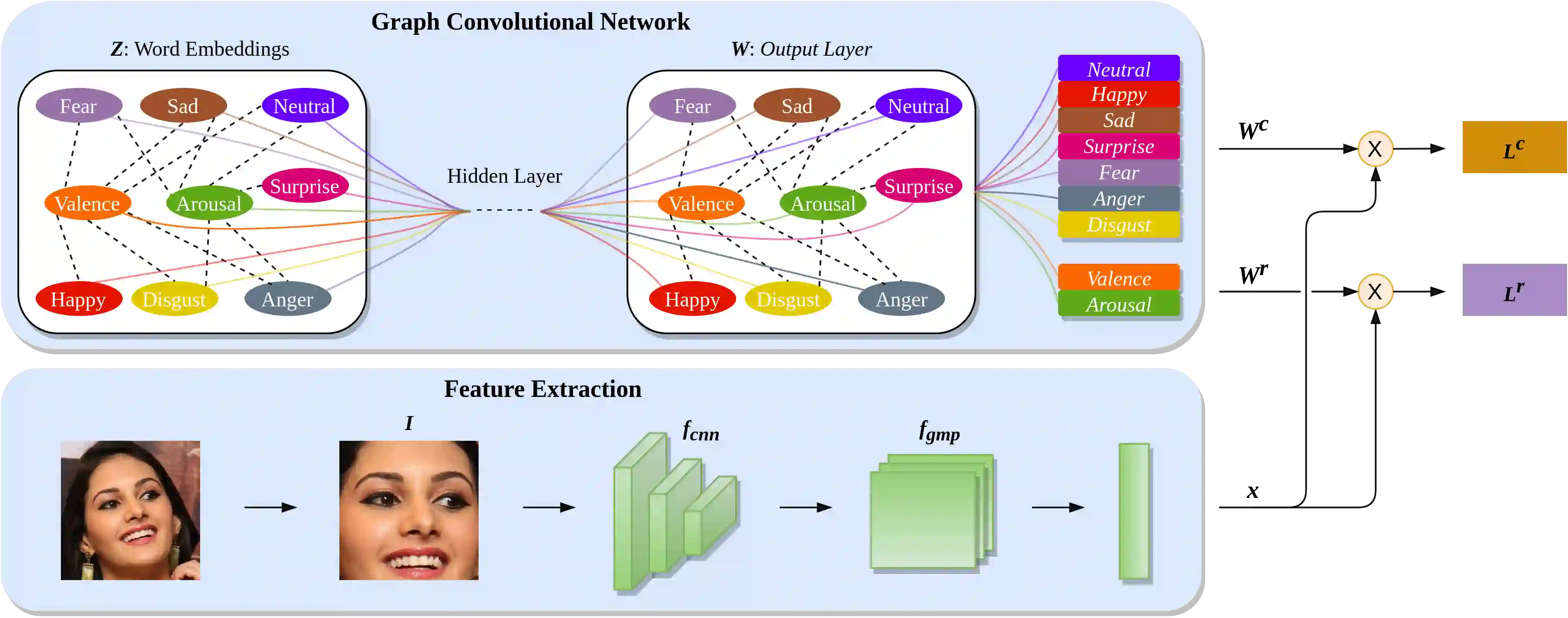

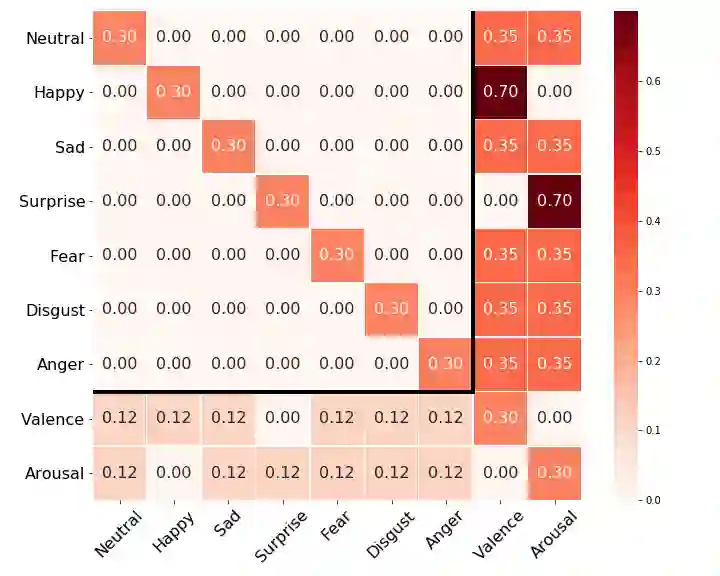

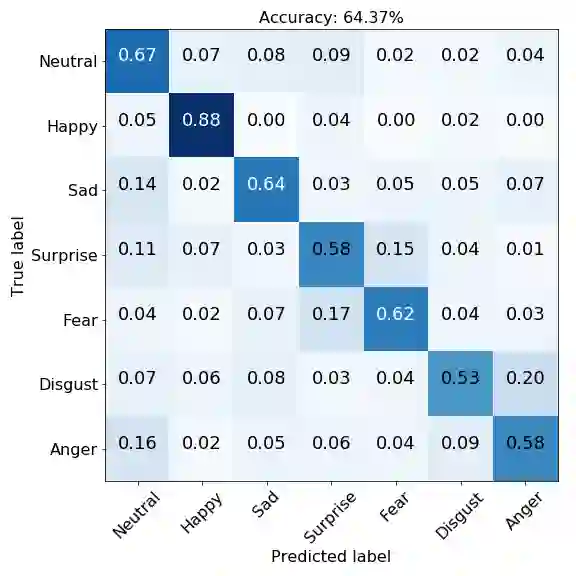

Over the past few years, deep learning methods have shown remarkable results in many face-related tasks including automatic facial expression recognition (FER) in-the-wild. Meanwhile, numerous models describing the human emotional states have been proposed by the psychology community. However, we have no clear evidence as to which representation is more appropriate and the majority of FER systems use either the categorical or the dimensional model of affect. Inspired by recent work in multi-label classification, this paper proposes a novel multi-task learning (MTL) framework that exploits the dependencies between these two models using a Graph Convolutional Network (GCN) to recognize facial expressions in-the-wild. Specifically, a shared feature representation is learned for both discrete and continuous recognition in a MTL setting. Moreover, the facial expression classifiers and the valence-arousal regressors are learned through a GCN that explicitly captures the dependencies between them. To evaluate the performance of our method under real-world conditions we perform extensive experiments on the AffectNet and Aff-Wild2 datasets. The results of our experiments show that our method is capable of improving the performance across different datasets and backbone architectures. Finally, we also surpass the previous state-of-the-art methods on the categorical model of AffectNet.

翻译:过去几年来,深层次的学习方法在许多与面相有关的任务中表现出了显著的成果,包括脸部表现识别(FER)在瞬间自动出现。与此同时,心理学界提出了许多描述人类情感状态的模式。然而,我们没有明确的证据表明哪些代表更合适,大部分FER系统使用直线或维维模式。在近期多标签分类工作中的启发下,本文件提出一个新的多任务学习框架,利用这两个模型之间的依赖性,利用图表革命网络(GCN)来识别眼部的面部表现。具体地说,在MTL环境中,为独立和连续的承认学习了共同的特征表现。此外,面部表达分类和价值激励者通过一个明确反映它们之间依赖性的GCN来学习。为了评估我们的方法在现实世界条件下的绩效,我们在AffectNet和Aff-Wild2数据集上进行了广泛的实验。我们以往的实验结果显示,我们在MTL环境中也能够改进不同数据结构的性能。