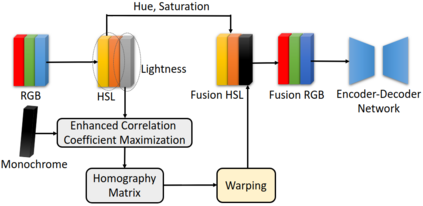

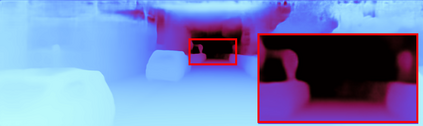

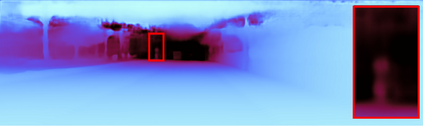

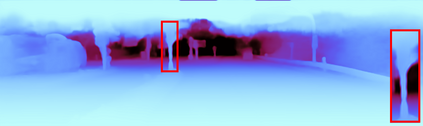

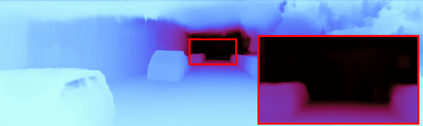

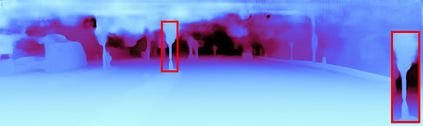

Depth estimation plays a important role in SLAM, odometry, and autonomous driving. Especially, monocular depth estimation is profitable technology because of its low cost, memory, and computation. However, it is not a sufficiently predicting depth map due to a camera often failing to get a clean image because of light conditions. To solve this problem, various sensor fusion method has been proposed. Even though it is a powerful method, sensor fusion requires expensive sensors, additional memory, and high computational performance. In this paper, we present color image and monochrome image pixel-level fusion and stereo matching with partially enhanced correlation coefficient maximization. Our methods not only outperform the state-of-the-art works across all metrics but also efficient in terms of cost, memory, and computation. We also validate the effectiveness of our design with an ablation study.

翻译:深度估测在 SLAM、 odomaric 和 自主驱动中起着重要作用 。 特别是, 单向深度估测由于成本、 内存和计算成本低而有利可图 。 然而, 单向深度估测并不足够预测深度图, 因为相机往往由于光线条件而无法获得干净的图像。 为了解决这个问题, 提出了各种传感器聚合方法 。 尽管它是一种强大的方法, 感应聚合需要昂贵的传感器、 额外的内存和高计算性能 。 在本文中, 我们展示了彩色图像和单色图像像素级像素级聚合和立体匹配, 与部分增强的相关系数最大化相匹配 。 我们的方法不仅超越了所有计量标准的最新工程, 而且在成本、 内存和计算方面的效率 。 我们还用减缩法研究来验证我们的设计的有效性 。