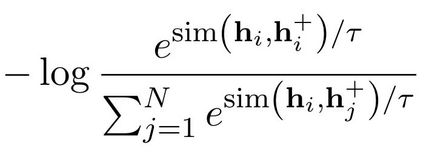

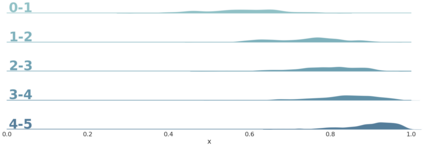

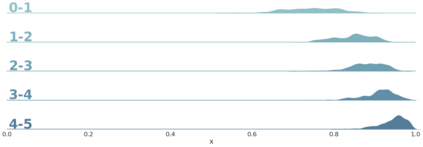

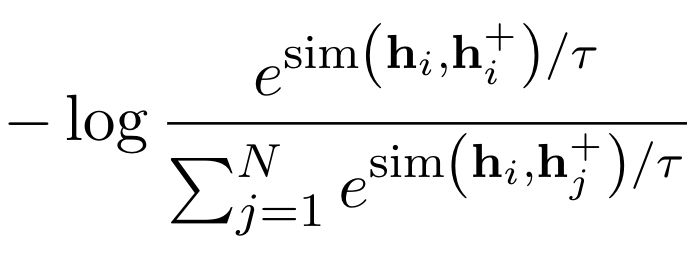

We propose DiffCSE, an unsupervised contrastive learning framework for learning sentence embeddings. DiffCSE learns sentence embeddings that are sensitive to the difference between the original sentence and an edited sentence, where the edited sentence is obtained by stochastically masking out the original sentence and then sampling from a masked language model. We show that DiffSCE is an instance of equivariant contrastive learning (Dangovski et al., 2021), which generalizes contrastive learning and learns representations that are insensitive to certain types of augmentations and sensitive to other "harmful" types of augmentations. Our experiments show that DiffCSE achieves state-of-the-art results among unsupervised sentence representation learning methods, outperforming unsupervised SimCSE by 2.3 absolute points on semantic textual similarity tasks.

翻译:我们提出DiffCSE, 这是一种未经监督的对比式学习框架,用于嵌入学习句子。 DiffCSE 学习与原句子和经编辑的句子之间的差别非常相干,经编辑的句子是用粗略遮掩原有句子获得的,然后从蒙面语言模型中取样。我们发现, DiffSCE 是一个差异式对比式学习的例子(Dangovski等人,2021年),它概括了对比式学习和学习表现,这些表现对某些类型的增强不敏感,对其他“有害”的增强类型敏感。我们的实验显示,DiffCSE在未经监督的句子代表式学习方法中取得了最新的结果,在语义相似任务的2.3个绝对点上比SimCSE多出未经监督的SimCSEE。