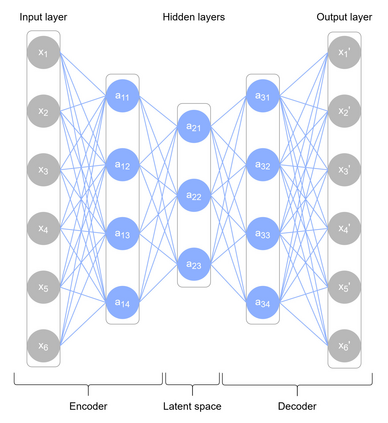

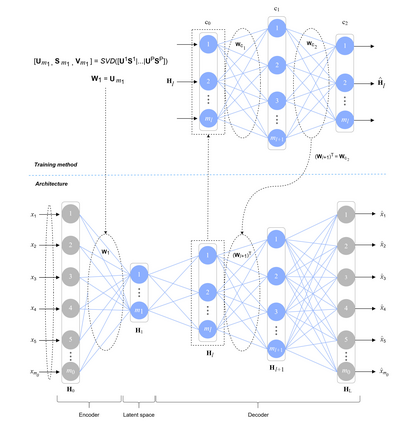

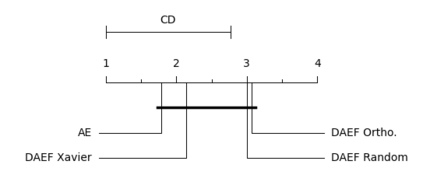

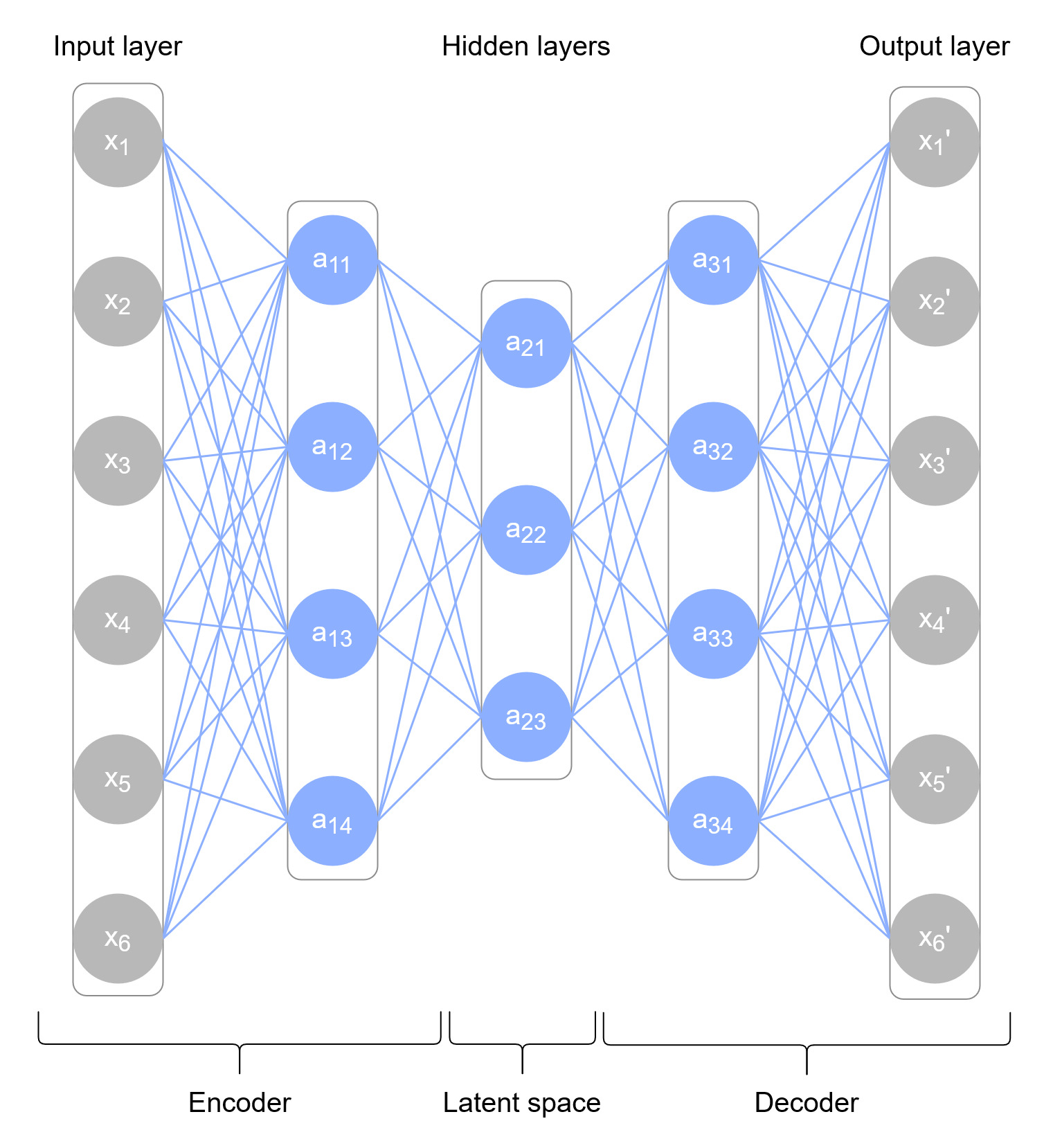

This paper presents a novel, fast and privacy preserving implementation of deep autoencoders. DAEF (Deep Autoencoder for Federated learning), unlike traditional neural networks, trains a deep autoencoder network in a non-iterative way, which drastically reduces its training time. Its training can be carried out in a distributed way (several partitions of the dataset in parallel) and incrementally (aggregation of partial models), and due to its mathematical formulation, the data that is exchanged does not endanger the privacy of the users. This makes DAEF a valid method for edge computing and federated learning scenarios. The method has been evaluated and compared to traditional (iterative) deep autoencoders using seven real anomaly detection datasets, and their performance have been shown to be similar despite DAEF's faster training.

翻译:与传统的神经网络不同,DAEF(为联邦学习的深海自动编码器)以非直线方式培训一个深自动编码器网络,这大大减少了培训时间,其培训可以分散进行(同时对数据集进行数个分割),并逐步(合并部分模型)进行,而且由于其数学的编制,所交换的数据不会危及用户的隐私。这使得DAEF成为边际计算和联合学习情景的有效方法。该方法经过评估,并与使用7个实际异常检测数据集的传统(直线)深自动编码器进行比较,尽管DAEF培训速度更快,其性能也证明类似。