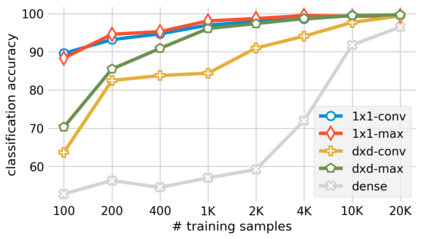

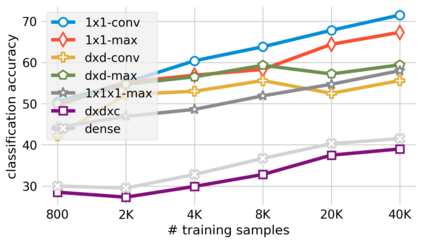

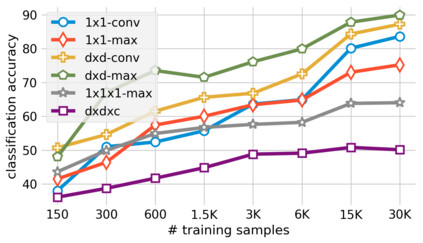

This paper is the first to explore an automatic way to detect bias in deep convolutional neural networks by simply looking at their weights. Furthermore, it is also a step towards understanding neural networks and how they work. We show that it is indeed possible to know if a model is biased or not simply by looking at its weights, without the model inference for an specific input. We analyze how bias is encoded in the weights of deep networks through a toy example using the Colored MNIST database and we also provide a realistic case study in gender detection from face images using state-of-the-art methods and experimental resources. To do so, we generated two databases with 36K and 48K biased models each. In the MNIST models we were able to detect whether they presented a strong or low bias with more than 99% accuracy, and we were also able to classify between four levels of bias with more than 70% accuracy. For the face models, we achieved 90% accuracy in distinguishing between models biased towards Asian, Black, or Caucasian ethnicity.

翻译:本文是第一个探索一种自动方式,通过光看其重量来检测深层进化神经网络的偏差的论文。 此外,它也是向了解神经网络及其如何运作的一步。 我们证明确实有可能知道一个模型是否偏差,或者不是仅仅看其重量,而没有具体输入的模型推理。 我们通过使用有色MNIST数据库的微小例子分析了深层网络重量的偏差是如何编码的。 我们还提供了一个现实的案例研究,用最先进的方法和实验资源从脸部图像中检测性别。 为了做到这一点,我们创建了两个数据库,每个数据库有36K和48K偏差模型。 在MNIST模型中,我们能够检测出它们是否具有超过99%的精确度强偏差或低偏差,我们还能够将偏差程度分为四级,精确度超过70%。 对于面模型,我们在区分偏向亚洲、黑人或高加索族裔的模型方面达到了90%的精确度。