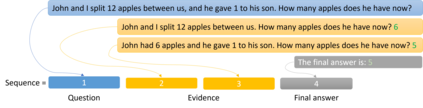

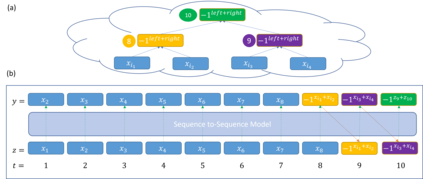

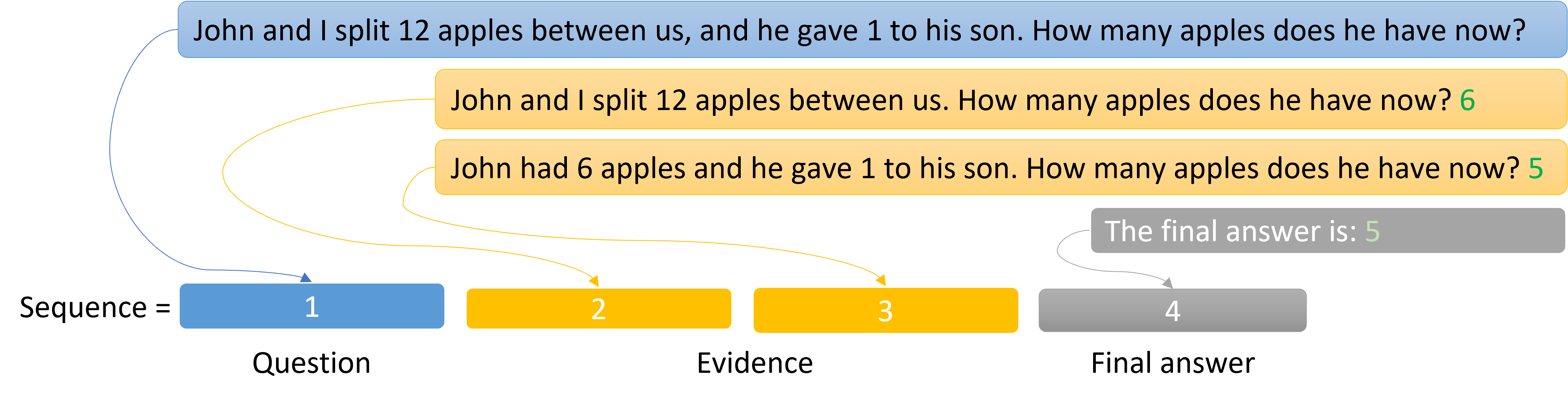

The field of Natural Language Processing has experienced a dramatic leap in capabilities with the recent introduction of huge Language Models. Despite this success, natural language problems that involve several compounded steps are still practically unlearnable, even by the largest LMs. This complies with experimental failures for end-to-end learning of composite problems that were demonstrated in a variety of domains. An effective mitigation is to introduce intermediate supervision for solving sub-tasks of the compounded problem. Recently, several works have demonstrated high gains by taking a straightforward approach for incorporating intermediate supervision in compounded natural language problems: the sequence-to-sequence LM is fed with an augmented input, in which the decomposed tasks' labels are simply concatenated to the original input. In this paper, we prove a positive learning result that motivates these recent efforts. We show that when concatenating intermediate supervision to the input and training a sequence-to-sequence model on this modified input, unlearnable composite problems can become learnable. We show that this is true for any family of tasks which on the one hand, are unlearnable, and on the other hand, can be decomposed into a polynomial number of simple sub-tasks, each of which depends only on O(1) previous sub-task results. Beyond motivating contemporary empirical efforts for incorporating intermediate supervision in sequence-to-sequence language models, our positive theoretical result is the first of its kind in the landscape of results on the benefits of intermediate supervision for neural-network learning: Until now, all theoretical results on the subject are negative, i.e., show cases where learning is impossible without intermediate supervision, while our result is positive, showing that learning is facilitated in the presence of intermediate supervision.

翻译:自然语言处理领域在能力方面经历了巨大的飞跃,最近引入了巨大的语言模型。 尽管取得了这一成功, 涉及若干复杂步骤的自然语言问题实际上仍然无法避免, 甚至最大的LM 也是如此。 这符合实验性学习各种领域所显示的综合问题的端到端学习失败。 有效的缓解是引入中间监督以解决复杂问题的次任务。 最近, 几项工程通过采取直接的方法将中间监督纳入复杂的自然语言问题, 显示出了巨大的收益: 中间- 顺序LM 注入了更多的投入, 而在中间- 顺序LM 的标签中, 基本上无法忽略。 在本文中, 我们证明这是一个积极的学习结果, 中间- 将中间- 监督纳入输入, 并培训一个从顺序到顺序的模型。 不可忽略的复合问题可以变得易懂。 我们显示, 任何一手的、 中间- 排序的LMM 与 标签的标签只是不易读取的, 其中间- 排序的结果都是在前一行的轨道上显示的。