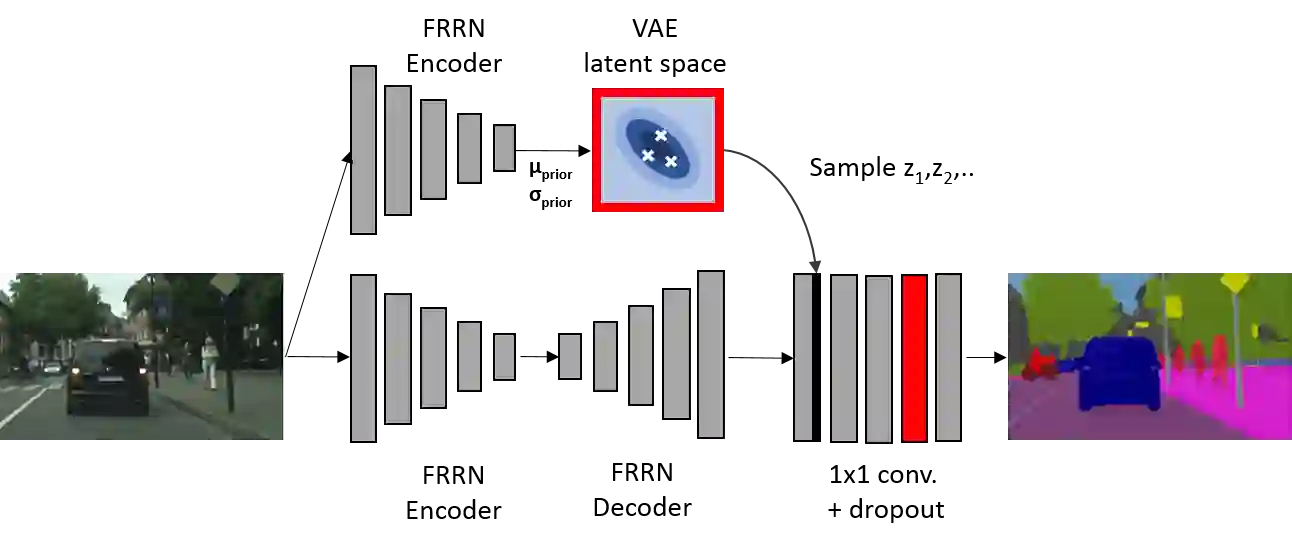

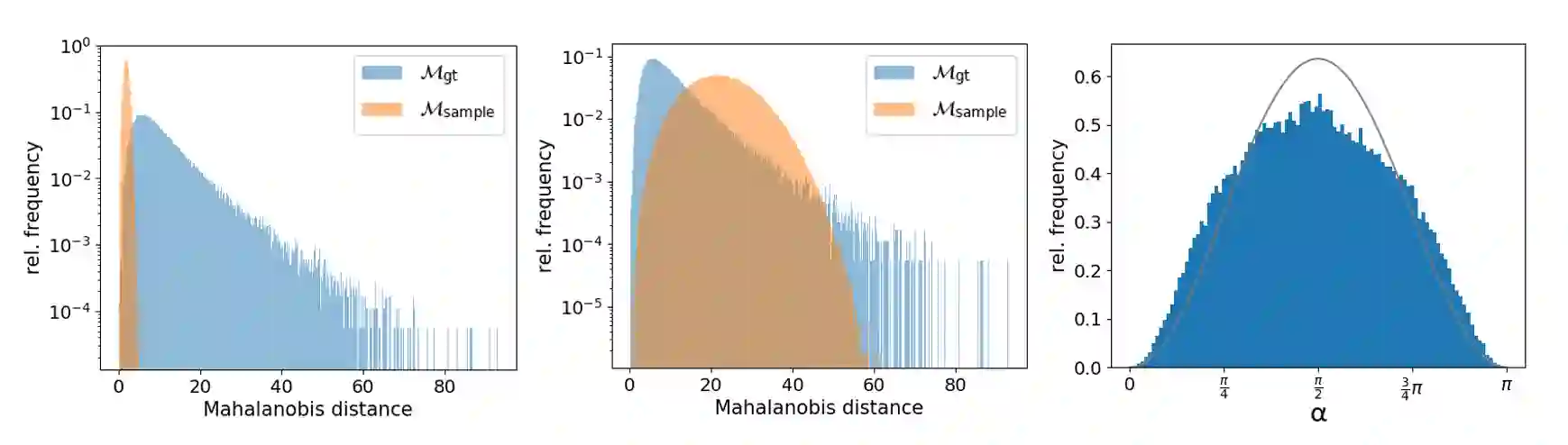

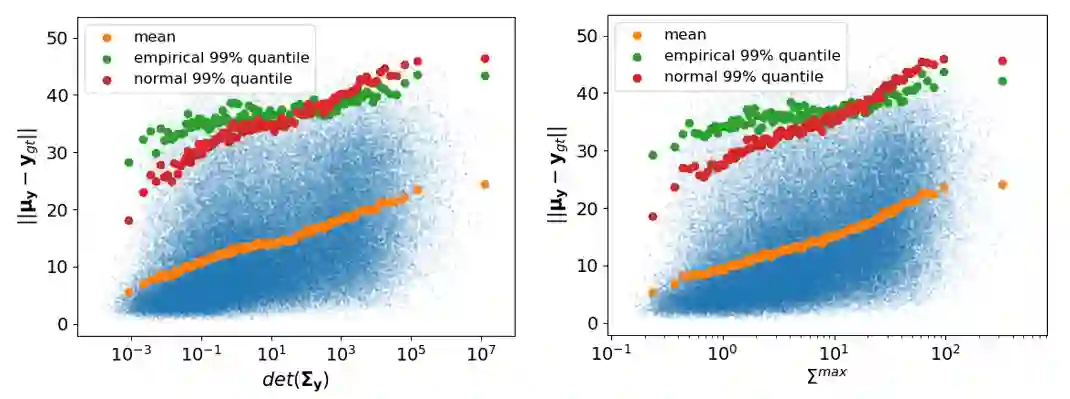

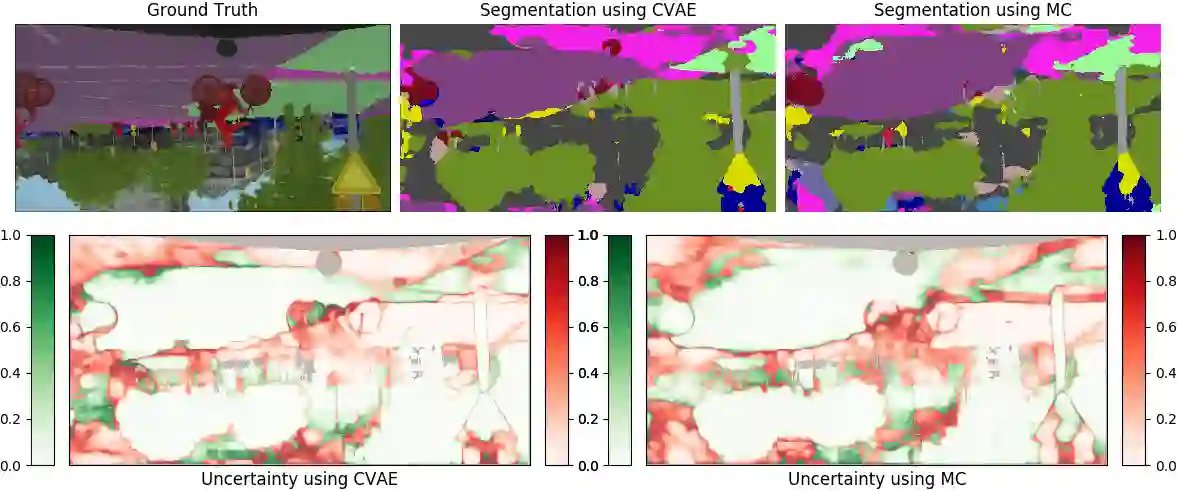

Statistical models are inherently uncertain. Quantifying or at least upper-bounding their uncertainties is vital for safety-critical systems such as autonomous vehicles. While standard neural networks do not report this information, several approaches exist to integrate uncertainty estimates into them. Assessing the quality of these uncertainty estimates is not straightforward, as no direct ground truth labels are available. Instead, implicit statistical assessments are required. For regression, we propose to evaluate uncertainty realism -- a strict quality criterion -- with a Mahalanobis distance-based statistical test. An empirical evaluation reveals the need for uncertainty measures that are appropriate to upper-bound heavy-tailed empirical errors. Alongside, we transfer the variational U-Net classification architecture to standard supervised image-to-image tasks. We adopt it to the automotive domain and show that it significantly improves uncertainty realism compared to a plain encoder-decoder model.

翻译:统计模型本质上是不确定的。量化或至少是上限限制其不确定性对于自主车辆等安全关键系统至关重要。标准神经网络虽然不报告这一信息,但有几种方法可以将不确定性估计纳入其中。评估这些不确定性估计的质量并不简单,因为没有直接的地面真实标签。相反,需要隐含的统计评估。关于回归,我们提议用一个基于马哈拉诺比斯远程的统计测试来评估不确定性的现实主义 -- -- 严格的质量标准。一项经验评估显示,有必要采取适合上限重尾误差的不确定性措施。此外,我们把变异的U-Net分类结构转移到标准监管的图像到图像任务中。我们将其应用到汽车领域,并表明它比普通的编码解码模型极大地改进了不确定性的现实主义。