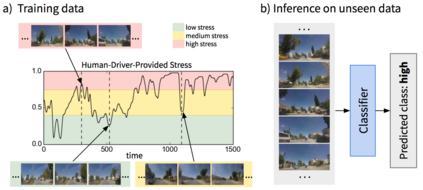

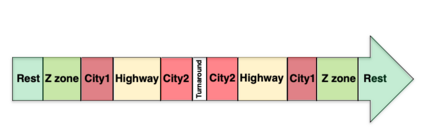

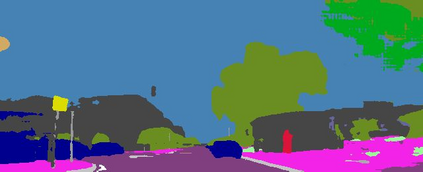

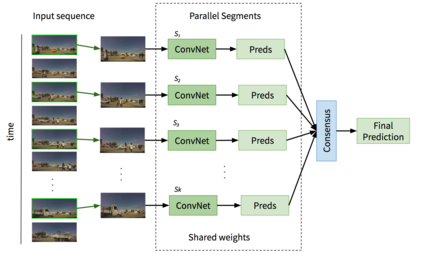

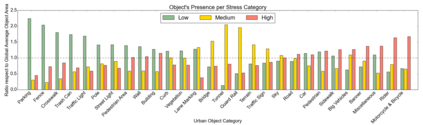

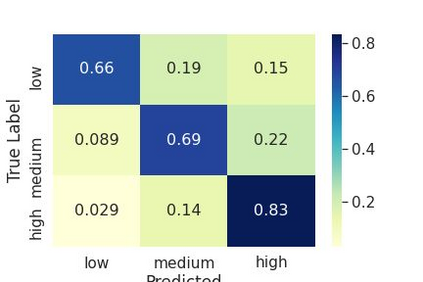

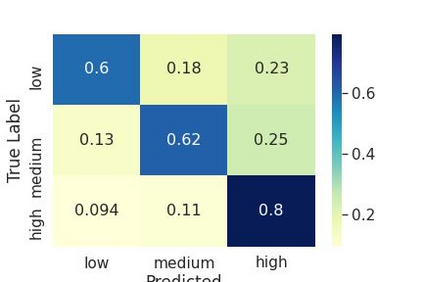

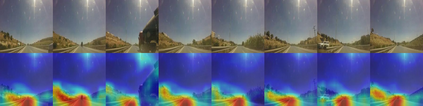

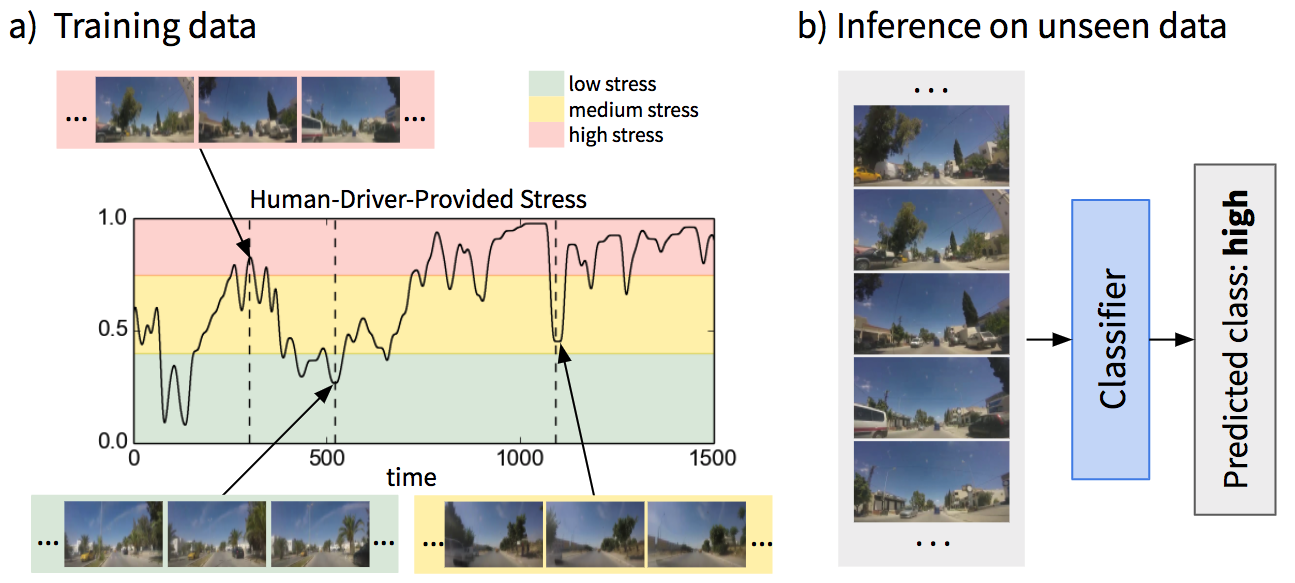

Several studies have shown the relevance of biosignals in driver stress recognition. In this work, we examine something important that has been less frequently explored: We develop methods to test if the visual driving scene can be used to estimate a drivers' subjective stress levels. For this purpose, we use the AffectiveROAD video recordings and their corresponding stress labels, a continuous human-driver-provided stress metric. We use the common class discretization for stress, dividing its continuous values into three classes: low, medium, and high. We design and evaluate three computer vision modeling approaches to classify the driver's stress levels: (1) object presence features, where features are computed using automatic scene segmentation; (2) end-to-end image classification; and (3) end-to-end video classification. All three approaches show promising results, suggesting that it is possible to approximate the drivers' subjective stress from the information found in the visual scene. We observe that the video classification, which processes the temporal information integrated with the visual information, obtains the highest accuracy of $0.72$, compared to a random baseline accuracy of $0.33$ when tested on a set of nine drivers.

翻译:几项研究显示生物信号在驱动力应激度识别中的适切性。在这项工作中,我们研究了一些较不经常探讨的重要内容:我们开发了一些方法,测试视觉驱动场景是否可用于估计驱动力的主观应力水平;为此,我们使用AffectiveROAD视频记录及其相应的应力标签,即连续由人类驱动提供的压力度度度度指标;我们使用常见的分级压力分级法,将其连续值分为三个等级:低、中、高。我们设计和评价了三种计算机视觉模型方法,以对驱动力的应力水平进行分类:(1) 物体存在特征,即使用自动场景分层计算功能;(2) 终端到终端图像分类;(3) 终端到终端的视频分类。所有三种方法都显示了有希望的结果,表明可以从在视觉场中发现的信息中接近驱动力的主观应力。我们观察到,与视觉信息结合处理时间信息的视频分类,获得最高准确度为0.72美元,而在一组9名驾驶员进行测试时随机基线精确度为0.33美元。

相关内容

Source: Apple - iOS 8