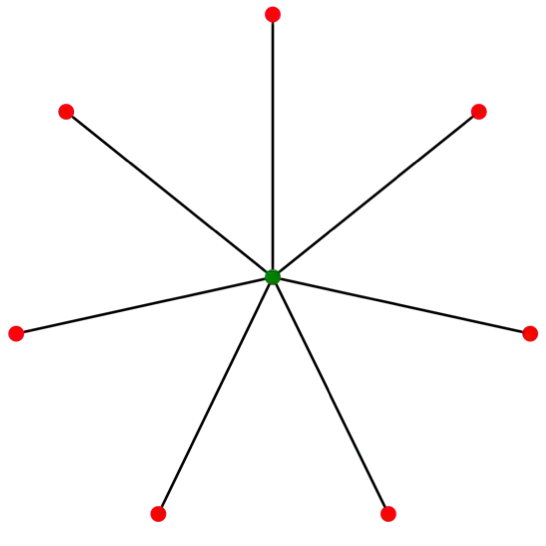

Robustness against adversarial attack in neural networks is an important research topic in the machine learning community. We observe one major source of vulnerability of neural nets is from overparameterized fully-connected layers. In this paper, we propose a new neighborhood preserving layer which can replace these fully connected layers to improve the network robustness. We demonstrate a novel neural network architecture which can incorporate such layers and also can be trained efficiently. We theoretically prove that our models are more robust against distortion because they effectively control the magnitude of gradients. Finally, we empirically show that our designed network architecture is more robust against state-of-art gradient descent based attacks, such as a PGD attack on the benchmark datasets MNIST and CIFAR10.

翻译:对神经网络对抗性攻击的强力是机器学习界的一个重要研究课题。我们观察到神经网脆弱性的一个主要来源是过度参数化的完全连接层。我们在本文件中提出一个新的邻里保护层,可以取代这些完全连接的层,以提高网络的稳健性。我们展示了一个新的神经网络结构,可以纳入这些层,也可以进行有效的培训。我们理论上证明我们的模型更强大,可以防止扭曲,因为它们有效控制梯度的大小。最后,我们的经验表明,我们设计的网络结构对基于最新梯度的进攻更加强大,例如PGD对MNIST和CIFAR10基准数据集的攻击。