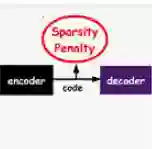

Learning rich data representations from unlabeled data is a key challenge towards applying deep learning algorithms in downstream supervised tasks. Several variants of variational autoencoders have been proposed to learn compact data representaitons by encoding high-dimensional data in a lower dimensional space. Two main classes of VAEs methods may be distinguished depending on the characteristics of the meta-priors that are enforced in the representation learning step. The first class of methods derives a continuous encoding by assuming a static prior distribution in the latent space. The second class of methods learns instead a discrete latent representation using vector quantization (VQ) along with a codebook. However, both classes of methods suffer from certain challenges, which may lead to suboptimal image reconstruction results. The first class of methods suffers from posterior collapse, whereas the second class of methods suffers from codebook collapse. To address these challenges, we introduce a new VAE variant, termed SC-VAE (sparse coding-based VAE), which integrates sparse coding within variational autoencoder framework. Instead of learning a continuous or discrete latent representation, the proposed method learns a sparse data representation that consists of a linear combination of a small number of learned atoms. The sparse coding problem is solved using a learnable version of the iterative shrinkage thresholding algorithm (ISTA). Experiments on two image datasets demonstrate that our model can achieve improved image reconstruction results compared to state-of-the-art methods. Moreover, the use of learned sparse code vectors allows us to perform downstream task like coarse image segmentation through clustering image patches.

翻译:从未标记的数据中学习丰富的数据表示是应用深度学习算法于下游监督任务的关键挑战。已经提出了几种变分自编码器的变体,通过在低维空间中编码高维数据来学习紧凑的数据表示。根据应用于表示学习步骤的元先验特征,可以区分出两类VAE方法。第一类方法假设潜空间中的静态先验分布,导出连续的编码。第二类方法则学习一个离散的潜在表示,使用矢量量化(VQ)和码本。然而,这两类方法都面临着一些挑战,可能导致次优的图像重构结果。第一类方法遭受后验崩溃,而第二类方法则遭受码本崩溃。为了解决这些挑战,我们引入了一种新的VAE变体,称为SC-VAE(基于稀疏编码的VAE),它将稀疏编码集成到变分自编码器框架中。该方法不是学习连续或离散的潜在表示,而是学习一个稀疏的数据表示,其中包含学习的少量原子的线性组合。稀疏编码问题使用可学习的迭代收缩阈值算法(ISTA)的版本来解决。对两个图像数据集的实验表明,我们的模型可以实现比现有最先进方法更好的图像重构结果。此外,使用学习的稀疏码矢量,我们可以通过聚类图像补丁来执行下游任务,如粗糙图像分割。