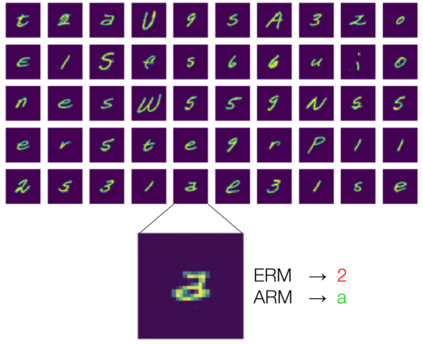

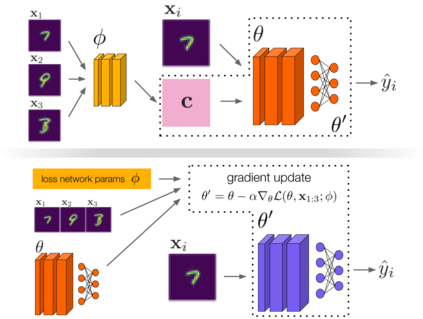

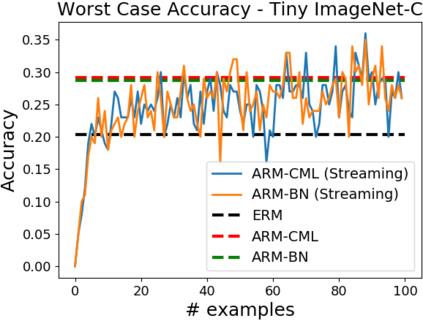

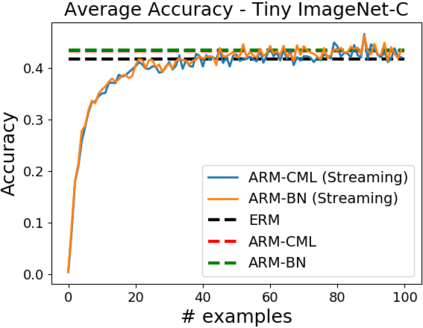

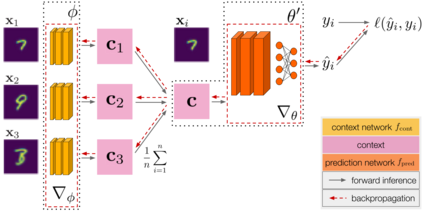

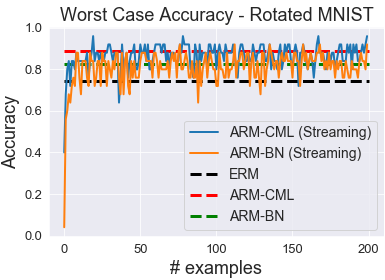

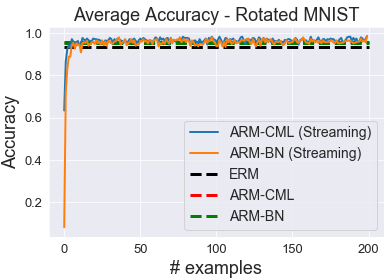

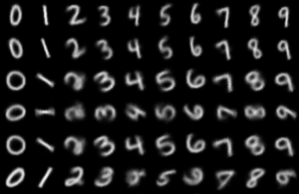

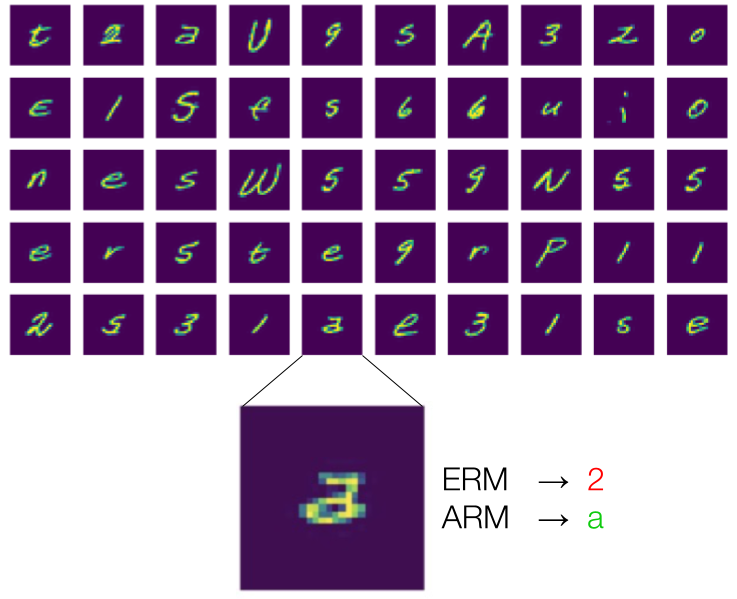

A fundamental assumption of most machine learning algorithms is that the training and test data are drawn from the same underlying distribution. However, this assumption is violated in almost all practical applications: machine learning systems are regularly tested under distribution shift, due to changing temporal correlations, atypical end users, or other factors. In this work, we consider the problem setting of domain generalization, where the training data are structured into domains and there may be multiple test time shifts, corresponding to new domains or domain distributions. Most prior methods aim to learn a single robust model or invariant feature space that performs well on all domains. In contrast, we aim to learn models that adapt at test time to domain shift using unlabeled test points. Our primary contribution is to introduce the framework of adaptive risk minimization (ARM), in which models are directly optimized for effective adaptation to shift by learning to adapt on the training domains. Compared to prior methods for robustness, invariance, and adaptation, ARM methods provide performance gains of 1-4% test accuracy on a number of image classification problems exhibiting domain shift.

翻译:大多数机器学习算法的基本假设是,培训和测试数据来自相同的基本分布。然而,几乎所有实际应用都违反了这一假设:由于时间相关性的变化、非典型终端用户或其他因素,机器学习系统在分配转移时定期测试;在这项工作中,我们考虑域的概括化问题设置,即培训数据按领域编排,并可能有与新领域或域分布相对应的多重测试时间变化。大多数先前的方法都旨在学习一个在所有领域运行良好的单一强势模型或无差异特征空间。相比之下,我们的目的是学习在测试时使用未贴标签的测试点适应域转移的模式。我们的主要贡献是引入适应风险最小化框架(ARM),在这个框架中,模型直接优化,以便通过学习适应培训领域的适应而进行有效调整。与先前的稳健性、易变和适应方法相比,ARM方法为显示领域转移的若干图像分类问题提供了1%-4%的测试精度。