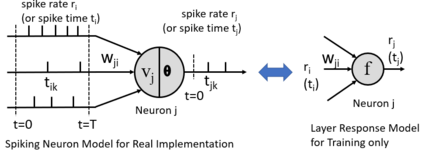

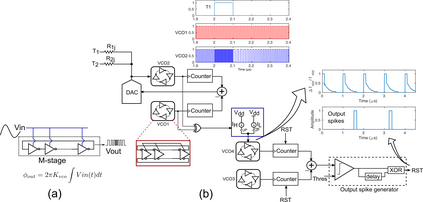

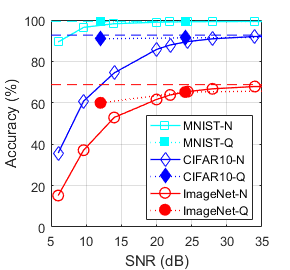

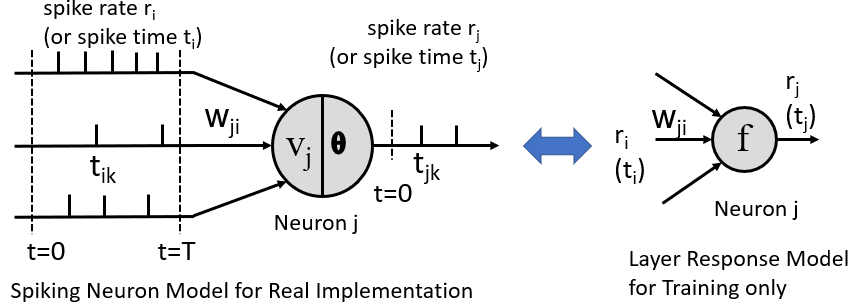

Spiking neural network (SNN) is interesting both theoretically and practically because of its strong bio-inspiration nature and potentially outstanding energy efficiency. Unfortunately, its development has fallen far behind the conventional deep neural network (DNN), mainly because of difficult training and lack of widely accepted hardware experiment platforms. In this paper, we show that a deep temporal-coded SNN can be trained easily and directly over the benchmark datasets CIFAR10 and ImageNet, with testing accuracy within 1% of the DNN of equivalent size and architecture. Training becomes similar to DNN thanks to the closed-form solution to the spiking waveform dynamics. Considering that SNNs should be implemented in practical neuromorphic hardwares, we train the deep SNN with weights quantized to 8, 4, 2 bits and with weights perturbed by random noise to demonstrate its robustness in practical applications. In addition, we develop a phase-domain signal processing circuit schematic to implement our spiking neuron with 90% gain of energy efficiency over existing work. This paper demonstrates that the temporal-coded deep SNN is feasible for applications with high performance and high energy efficient.

翻译:Spik 神经网络(SNN)在理论上和实践上都非常有趣,因为它具有很强的生物吸入性质和潜在的杰出能源效率。不幸的是,它的开发远远落后于常规的深神经网络(DNN),这主要是因为培训困难和缺乏广泛接受的硬件实验平台。在本文中,我们表明深时间编码的SNN可以在基准数据集CIFAR10和图像网络上很容易和直接接受培训,测试精度在相当于同等大小和结构的DNN的1%之内。培训与DNNN类似,因为对波形波形动态的封闭式解决方案。考虑到SNNN应该安装在实际神经形态硬件中,我们训练深SNNN,将重量分成8、4、2位,并配有随机噪音的重量,以显示其在实际应用中的稳健性。此外,我们还开发了一个阶段-多信号处理电路路路,以实施我们的Spiking神经,其能效比现有工作增加90%。这份文件表明,时间编码深SNNNNN可以高性能应用。