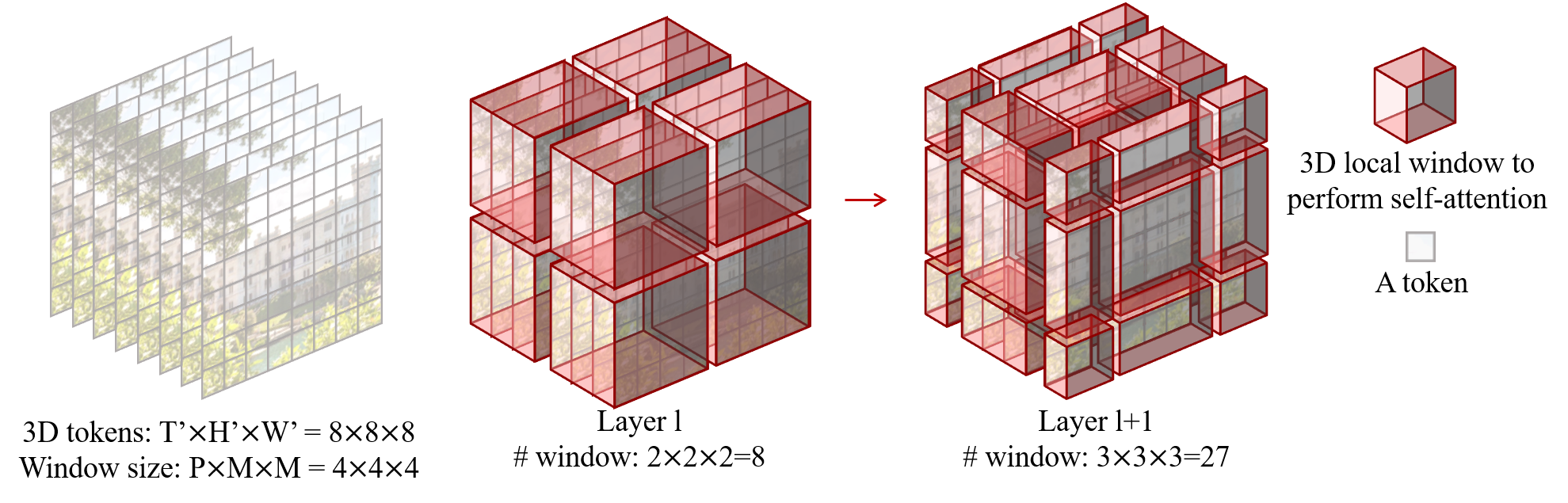

The vision community is witnessing a modeling shift from CNNs to Transformers, where pure Transformer architectures have attained top accuracy on the major video recognition benchmarks. These video models are all built on Transformer layers that globally connect patches across the spatial and temporal dimensions. In this paper, we instead advocate an inductive bias of locality in video Transformers, which leads to a better speed-accuracy trade-off compared to previous approaches which compute self-attention globally even with spatial-temporal factorization. The locality of the proposed video architecture is realized by adapting the Swin Transformer designed for the image domain, while continuing to leverage the power of pre-trained image models. Our approach achieves state-of-the-art accuracy on a broad range of video recognition benchmarks, including on action recognition (84.9 top-1 accuracy on Kinetics-400 and 86.1 top-1 accuracy on Kinetics-600 with ~20x less pre-training data and ~3x smaller model size) and temporal modeling (69.6 top-1 accuracy on Something-Something v2). The code and models will be made publicly available at https://github.com/SwinTransformer/Video-Swin-Transformer.

翻译:视觉社区正在目睹从CNN向变异器的模型转变,纯变异器结构在主要视频识别基准中达到了最高精确度。这些视频模型都建在了全球跨越空间和时间维度连接补丁的变异器层上。在本文中,我们主张视频变异器的定位偏向感应偏向于视频变异器中的位置,这导致速度-准确性权衡取舍,而以前的方法计算出全球范围内的自我关注,即使采用空间-时间因素化因素。拟议的视频结构的位置是通过调整为图像域设计的Swin变异器实现的,同时继续利用预先训练的图像模型的力量。我们的方法在广泛的视频识别基准方面实现了最新艺术准确性,包括在行动识别方面(动因技术-400和86.1的高度精确度为动力-600,而培训前数据为~20x,模型大小为~3x)和时间模型模型(Somen-imite-mainning v2),代码和模型将在https://Transy-Vestrefor.