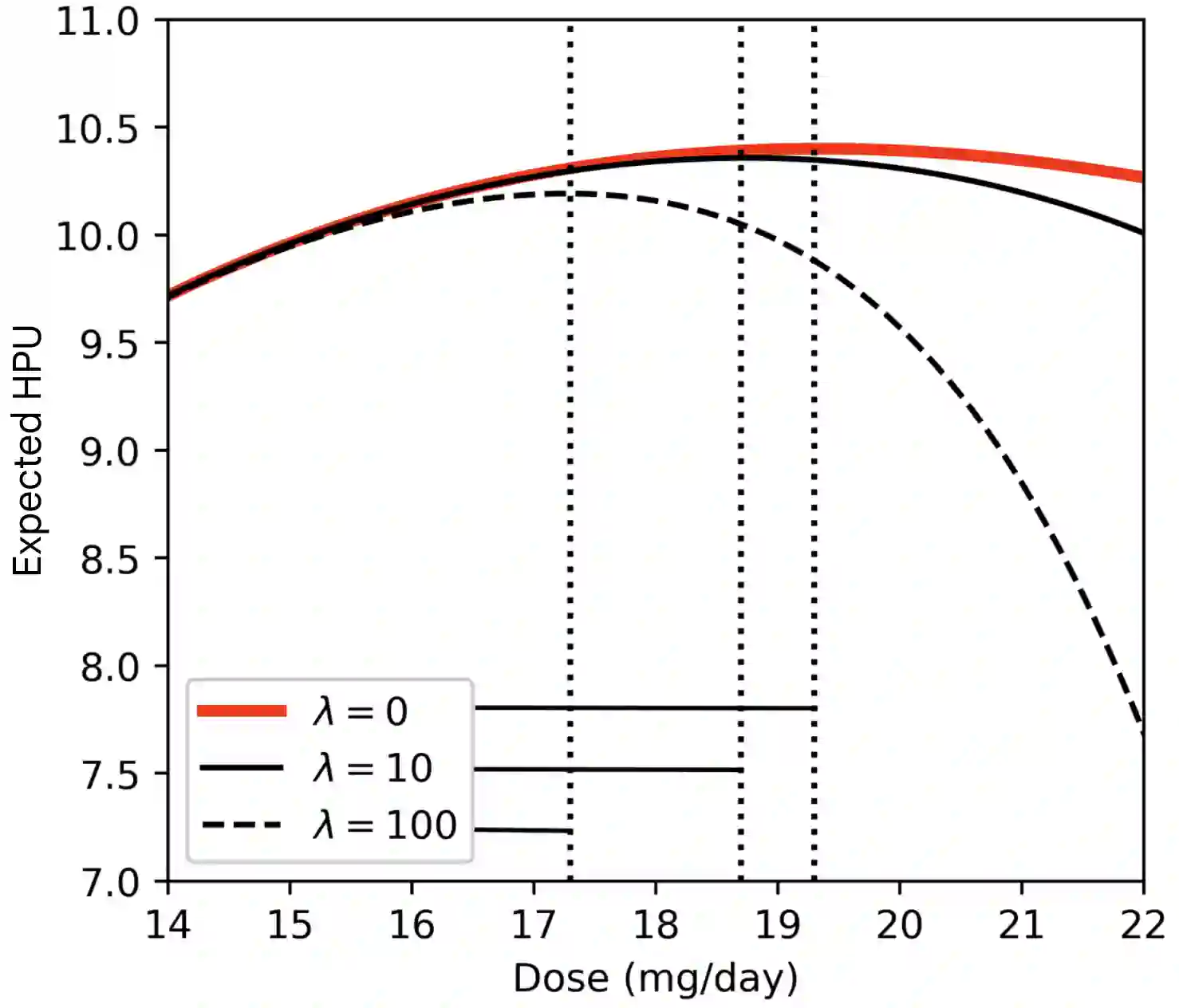

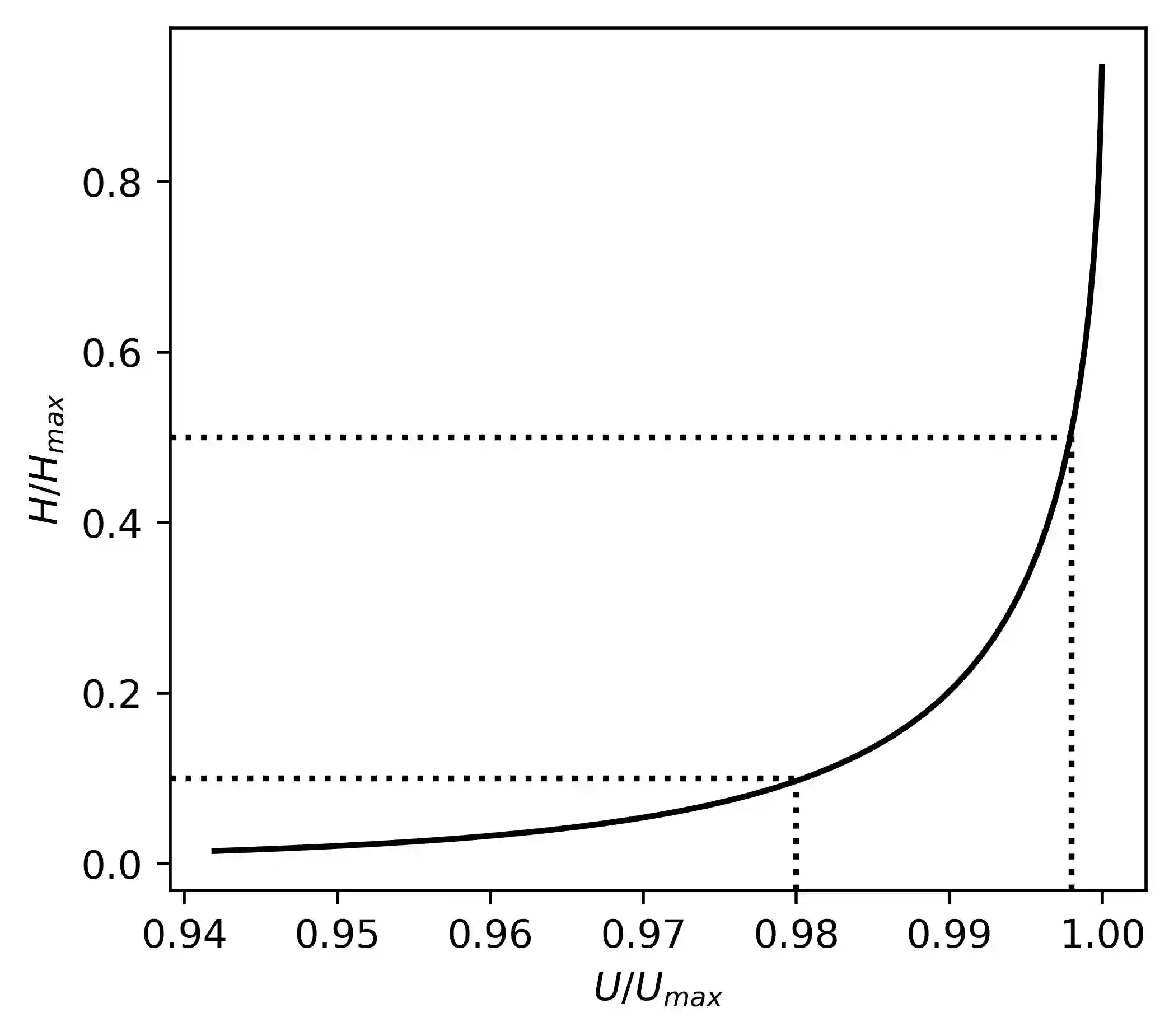

To act safely and ethically in the real world, agents must be able to reason about harm and avoid harmful actions. However, to date there is no statistical method for measuring harm and factoring it into algorithmic decisions. In this paper we propose the first formal definition of harm and benefit using causal models. We show that any factual definition of harm must violate basic intuitions in certain scenarios, and show that standard machine learning algorithms that cannot perform counterfactual reasoning are guaranteed to pursue harmful policies following distributional shifts. We use our definition of harm to devise a framework for harm-averse decision making using counterfactual objective functions. We demonstrate this framework on the problem of identifying optimal drug doses using a dose-response model learned from randomized control trial data. We find that the standard method of selecting doses using treatment effects results in unnecessarily harmful doses, while our counterfactual approach allows us to identify doses that are significantly less harmful without sacrificing efficacy.

翻译:为了在现实世界中安全和合乎道德地采取行动,代理人必须能够对伤害进行合理解释,避免采取有害行动。然而,迄今为止,还没有统计方法来衡量伤害并将伤害纳入算法决定。我们在本文件中提议使用因果模型对伤害和好处作出第一个正式定义。我们表明,对伤害的任何事实定义必须在某些情景中违反基本直觉,并表明不能进行反事实推理的标准机器学习算法保证在分配转移后采取有害的政策。我们利用对损害的定义来设计一个框架,以便利用反事实客观功能作出反对伤害的决策。我们用随机控制试验数据所学的剂量反应模型来说明确定最佳药物剂量的问题。我们发现,使用治疗效果选择剂量的标准方法会导致不必要的有害剂量,而我们的反事实方法使我们能够在不牺牲功效的情况下确定危害性要小得多的剂量。