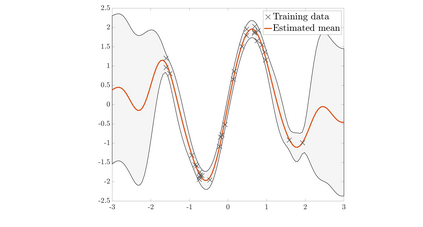

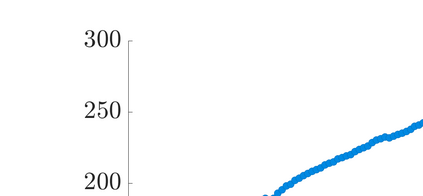

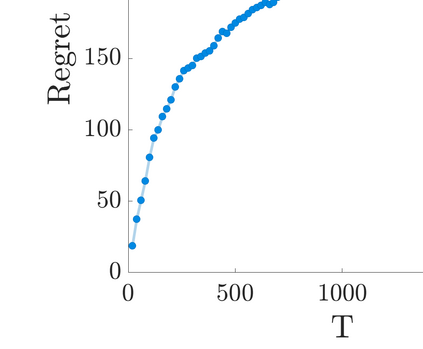

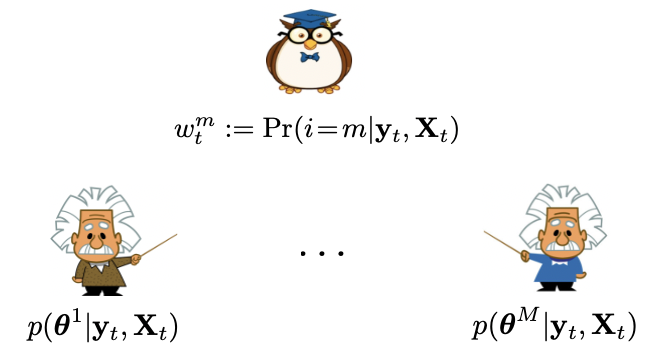

Belonging to the family of Bayesian nonparametrics, Gaussian process (GP) based approaches have well-documented merits not only in learning over a rich class of nonlinear functions, but also in quantifying the associated uncertainty. However, most GP methods rely on a single preselected kernel function, which may fall short in characterizing data samples that arrive sequentially in time-critical applications. To enable {\it online} kernel adaptation, the present work advocates an incremental ensemble (IE-) GP framework, where an EGP meta-learner employs an {\it ensemble} of GP learners, each having a unique kernel belonging to a prescribed kernel dictionary. With each GP expert leveraging the random feature-based approximation to perform online prediction and model update with {\it scalability}, the EGP meta-learner capitalizes on data-adaptive weights to synthesize the per-expert predictions. Further, the novel IE-GP is generalized to accommodate time-varying functions by modeling structured dynamics at the EGP meta-learner and within each GP learner. To benchmark the performance of IE-GP and its dynamic variant in the adversarial setting where the modeling assumptions are violated, rigorous performance analysis has been conducted via the notion of regret, as the norm in online convex optimization. Last but not the least, online unsupervised learning for dimensionality reduction is explored under the novel IE-GP framework. Synthetic and real data tests demonstrate the effectiveness of the proposed schemes.

翻译:高斯进程(GP)基础方法属于巴伊西亚非参数的家族,它不仅在学习丰富的非线性功能方面,而且在量化相关不确定性方面都有有据可查的优点。然而,大多数GP方法依赖于单一的预选内核功能,这可能不足以确定在时间紧迫的应用中相继到达的数据样本的特征。为了能够进行在线调整,目前的工作倡导一个渐进式整体化(IE-)GP框架,在这个框架中,EGP元性单向内,对GP学习者使用一个全套性,每个都拥有属于指定内核字典的独特内核。随着每个GP专家利用随机基于地基的近似功能进行在线预测和模型更新,而这些数据样本在时间紧迫的应用程序中,EGP元性(I-I-I-I-lear)框架被普遍化,通过模型结构化的动态动态动态性动态性动态性能测试,在EGPAF-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-IL-IL-I-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-ID-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-I-IL-I-I-IL-I-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-IL-I-I-IL-IL-IL-I-I