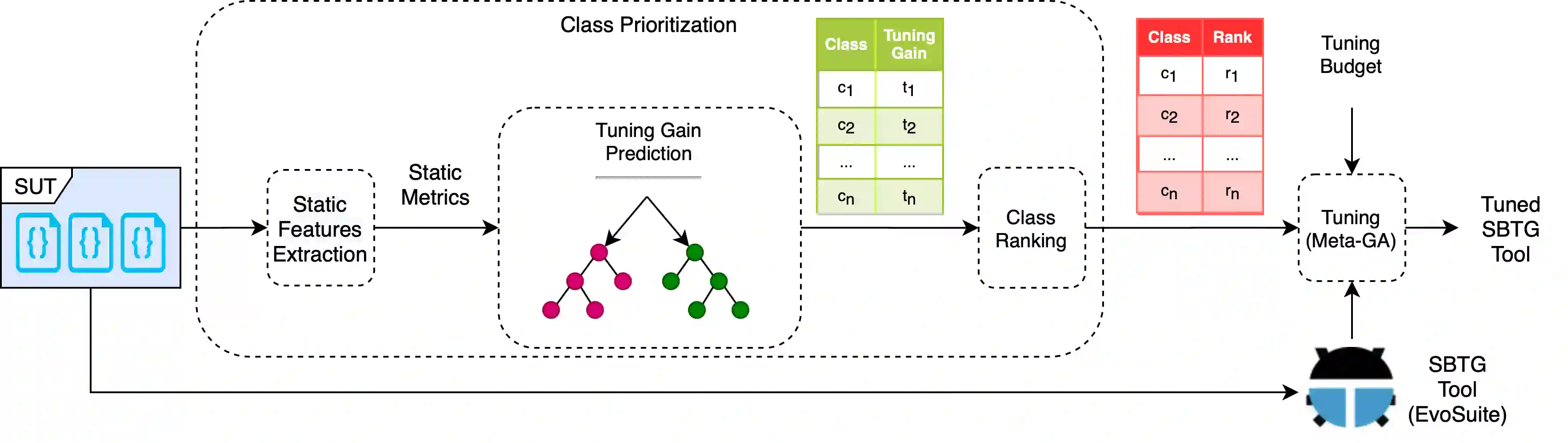

Search-based test case generation, which is the application of meta-heuristic search for generating test cases, has been studied a lot in the literature, lately. Since, in theory, the performance of meta-heuristic search methods is highly dependent on their hyper-parameters, there is a need to study hyper-parameter tuning in this domain. In this paper, we propose a new metric ("Tuning Gain"), which estimates how cost-effective tuning a particular class is. We then predict "Tuning Gain" using static features of source code classes. Finally, we prioritize classes for tuning, based on the estimated "Tuning Gains" and spend the tuning budget only on the highly-ranked classes. To evaluate our approach, we exhaustively analyze 1,200 hyper-parameter configurations of a well-known search-based test generation tool (EvoSuite) for 250 classes of 19 projects from benchmarks such as SF110 and SBST2018 tool competition. We used a tuning approach called Meta-GA and compared the tuning results with and without the proposed class prioritization. The results show that for a low tuning budget, prioritizing classes outperforms the alternatives in terms of extra covered branches (10 times more than a traditional global tuning). In addition, we report the impact of different features of our approach such as search space size, tuning budgets, tuning algorithms, and the number of classes to tune, on the final results.

翻译:在文献中,最近对基于搜索的测试案例生成进行了大量研究,这是对生成测试案例应用的超重力搜索。由于从理论上讲,超重力搜索方法的性能高度依赖其超参数,因此有必要研究这一领域的超参数调制。在本文件中,我们提出了一个新的衡量标准(“图宁增益”),用于估算某一类的调整的成本效益如何。然后,我们利用源代码类的静态特征预测“Turning Gain”,最后,我们根据估计的“Turning Gain”,将调整预算只花在高等级的类别上,因此,在评估我们的方法时,我们需要彻底分析一个众所周知的基于搜索的测试生成工具(EvoSite)的1200个超参数配置,用于从SF110和SBST2018工具竞争等基准中测出一个共19个项目的250个类别。我们采用了调制方法,即使用源代码类的静态特性预测“Turning GA”,将调结果与拟议的类别排序进行比较。最后,我们根据估计的优先排序,将调整预算只花在高等级的类别上。为了评估我们预算的低调的分类,我们的预算,结果显示一个比超重的排序,我们预算的分类的排序是不同的调整,而不是调整,我们预算的排序。在不同的调整,我们预算的排序,在不同的排序中,在不同的排序上,在不同的排序上,在不同的排序上。