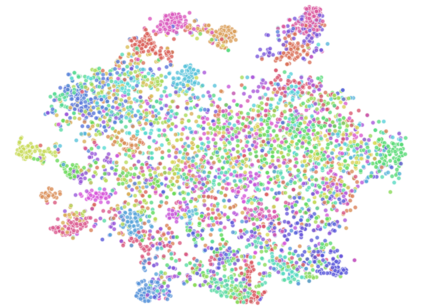

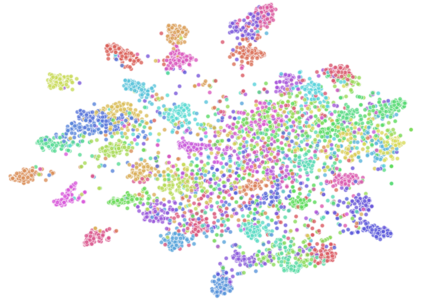

In class-incremental learning, the model is expected to learn new classes continually while maintaining knowledge on previous classes. The challenge here lies in preserving the model's ability to effectively represent prior classes in the feature space, while adapting it to represent incoming new classes. We propose two distillation-based objectives for class incremental learning that leverage the structure of the feature space to maintain accuracy on previous classes, as well as enable learning the new classes. In our first objective, termed cross-space clustering (CSC), we propose to use the feature space structure of the previous model to characterize directions of optimization that maximally preserve the class: directions that all instances of a specific class should collectively optimize towards, and those that they should collectively optimize away from. Apart from minimizing forgetting, this indirectly encourages the model to cluster all instances of a class in the current feature space, and gives rise to a sense of herd-immunity, allowing all samples of a class to jointly combat the model from forgetting the class. Our second objective termed controlled transfer (CT) tackles incremental learning from an understudied perspective of inter-class transfer. CT explicitly approximates and conditions the current model on the semantic similarities between incrementally arriving classes and prior classes. This allows the model to learn classes in such a way that it maximizes positive forward transfer from similar prior classes, thus increasing plasticity, and minimizes negative backward transfer on dissimilar prior classes, whereby strengthening stability. We perform extensive experiments on two benchmark datasets, adding our method (CSCCT) on top of three prominent class-incremental learning methods. We observe consistent performance improvement on a variety of experimental settings.

翻译:在课堂入门学习中,模型预计将不断学习新班级,同时保持对以往班级的了解。这里的挑战在于维护模型在功能空间中有效代表先前班级的能力,同时调整模型以代表新班级。我们建议两个基于蒸馏的班级增进学习目标,利用特性空间的结构结构来保持先前班级的准确性,并能够学习新班。在我们的第一个目标,即所谓的跨空间集群(CSC)中,我们提议使用先前模型的功能空间结构来描述优化方向,从而最大限度地保护班级:所有特定班级应该集体优化的方向,以及他们应该集体优化的班级。除了尽量减少遗忘之外,这间接地鼓励模型将当前功能空间中某个班级的所有情况集中起来,从而产生一种母体的免疫感,让所有班级的样本都能够联合抵制忘记班级的模型。我们的第二个目标,即控制转移(CT)从研究不足的层次间转移的角度处理不断改进班级间CCT的趋势。CT明确地估计和它们应该集体优化的方向,除了最小的顺序之外,这个模型还间接地鼓励模型在前班级中进行前班级之间的稳定性转移,因此使得前班级中逐渐地学习。