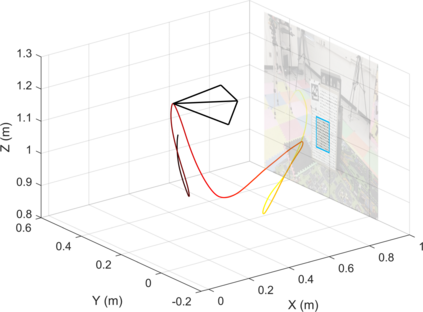

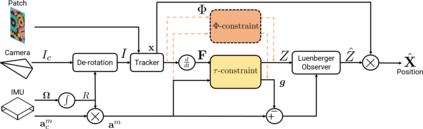

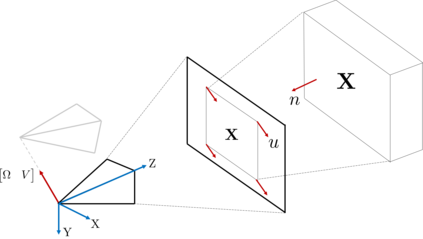

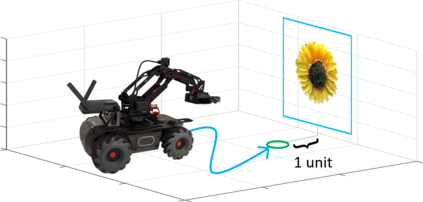

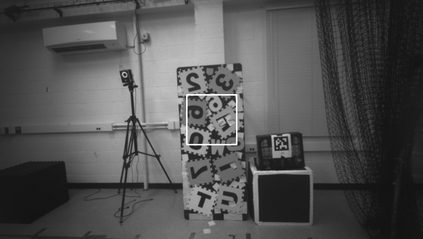

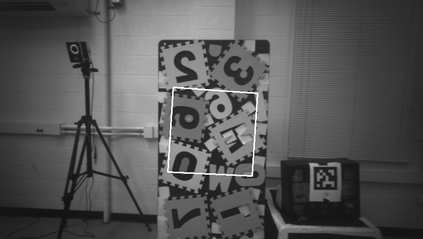

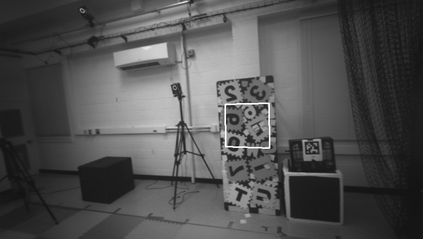

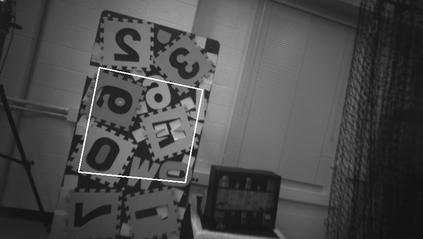

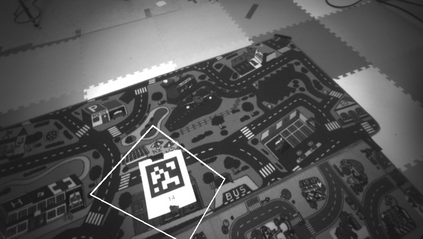

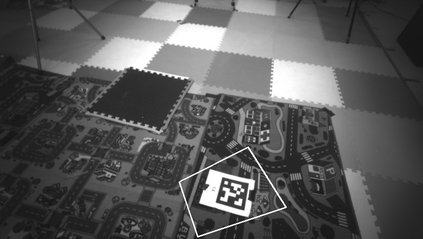

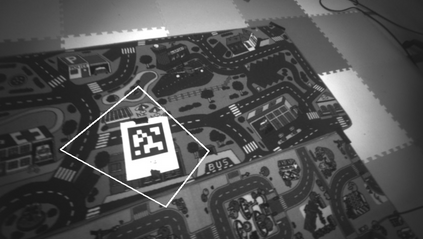

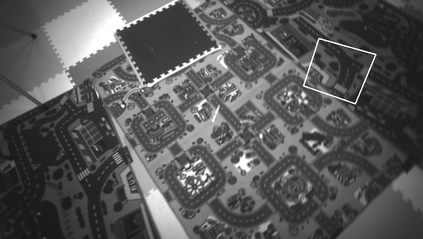

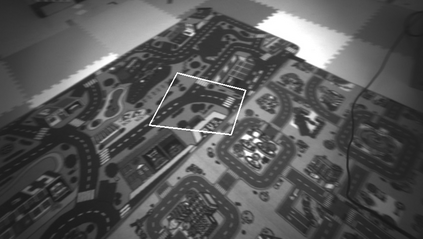

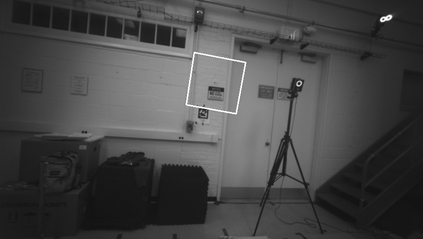

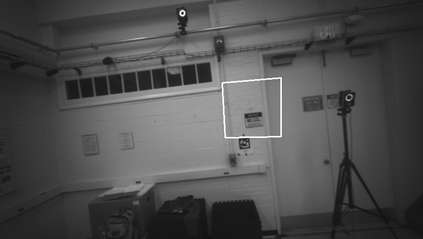

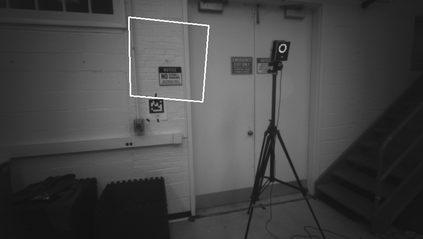

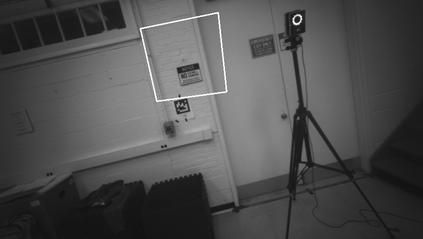

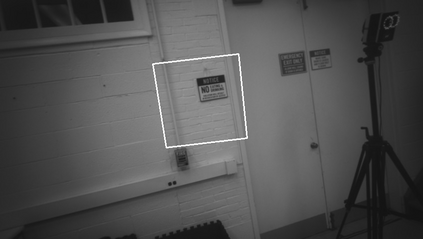

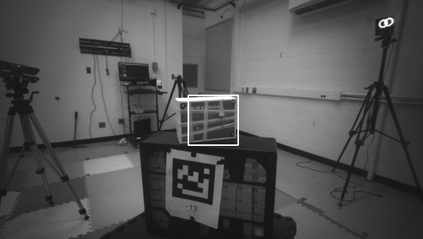

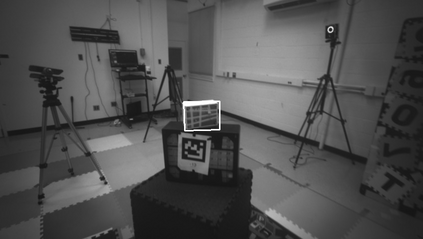

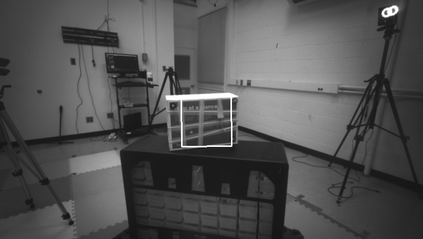

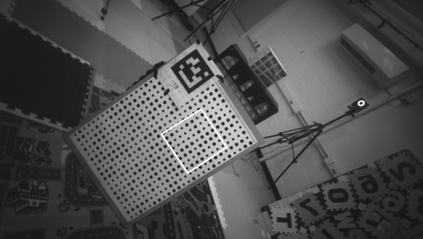

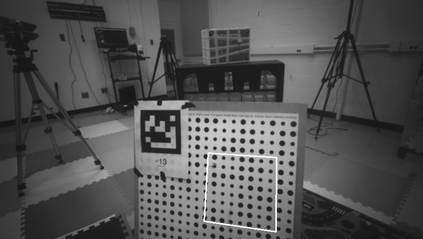

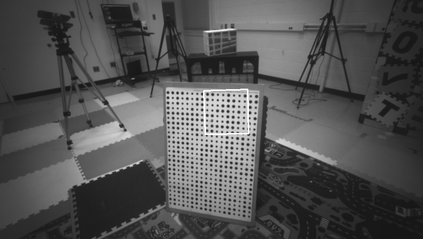

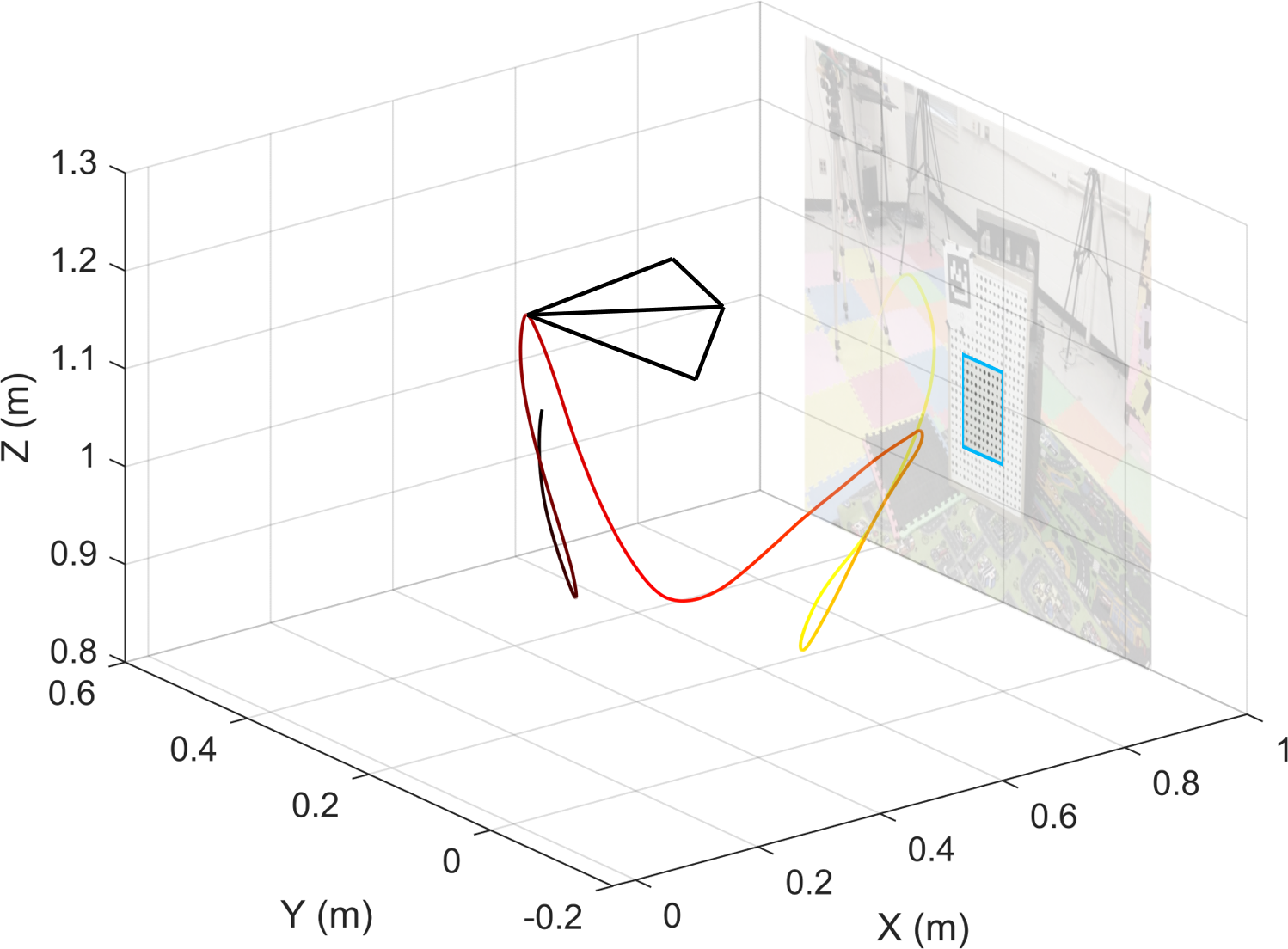

Distance estimation from vision is fundamental for a myriad of robotic applications such as navigation, manipulation and planning. Inspired by the mammal's visual system, which gazes at specific objects, we develop two novel constraints involving time-to-contact, acceleration, and distance that we call the $\tau$-constraint and $\Phi$-constraint which allow an active (moving) camera to estimate depth efficiently and accurately while using only a small portion of the image. We successfully validate the proposed constraints with two experiments. The first applies both constraints in a trajectory estimation task with a monocular camera and an Inertial Measurement Unit (IMU). Our methods achieve 30-70% less average trajectory error, while running 25$\times$ and 6.2$\times$ faster than the popular Visual-Inertial Odometry methods VINS-Mono and ROVIO respectively. The second experiment demonstrates that when the constraints are used for feedback with efference copies the resulting closed loop system's eigenvalues are invariant to scaling of the applied control signal. We believe these results indicate the $\tau$ and $\Phi$ constraint's potential as the basis of robust and efficient algorithms for a multitude of robotic applications.

翻译:从视觉到远的估算对于导航、操纵和规划等众多机器人应用的远程估算至关重要。在哺乳动物的视觉系统启发下,我们开发了两个新的限制因素,涉及时间对接触、加速和距离,我们称之为$tau$-约束和$Phi$-限制,我们称之为美元-约束和$Phi$-限制,我们称之为美元-约束和$Phi$-限制的距离,这使我们称之为美元-tau$-约束和$Phi$-限制,允许一个活跃(移动)的(移动)相机在仅使用一小部分图像时能够有效和准确地估计深度,而只使用一小部分图像。我们用两个实验成功地验证了拟议的限制。我们成功地用两个实验用两个实验来验证了拟议的限制。第一个试验用一个是用单眼照相机和惰性测量单位(IMUMUM)在轨估估测任务中同时使用两种限制。我们的方法取得了30-70%的平均轨差差差差差差差差差差差,而我们的方法比通用的视觉-内测量方法VNS-M-Mon-Mon和ROVIO-ROVIO-S-S-M-M-MUD 和潜在限制基础,我们认为这些结果表明了值是美元的多级和潜在。我们认为,是多的基基和潜在的。我们和潜在的。我们和潜在的。我们认为,是,是,是多的。我们和可能的,这是的。我们和可能的。我们和可能的。我们和可能的。我们和可能的制约和可能的。我们和可能的。我们认为,是,是。我们和可能的,是。我们的基基基基基基基基基基基基的,是,是,是。我们的。我们的,是,是,是,是。我们的,是。我们的,是。我们的。我们的。我们的,是。我们的,是的,是,是。我们的,是的,是,是的,是。 的,是,是。 的,是,是,是。