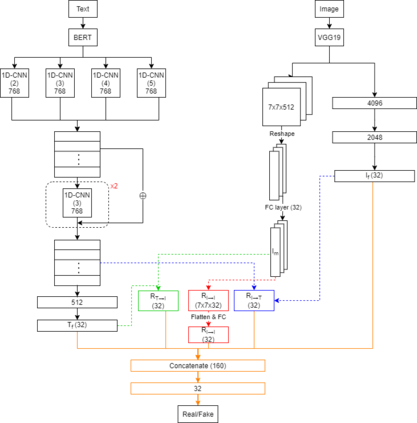

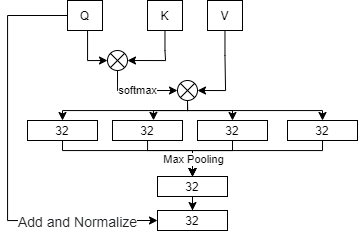

Fake news detection is an important task for increasing the credibility of information on the media since fake news is constantly spreading on social media every day and it is a very serious concern in our society. Fake news is usually created by manipulating images, texts, and videos. In this paper, we present a novel method for detecting fake news by fusing multimodal features derived from textual and visual data. Specifically, we used a pre-trained BERT model to learn text features and a VGG-19 model pre-trained on the ImageNet dataset to extract image features. We proposed a scale-dot product attention mechanism to capture the relationship between text features and visual features. Experimental results showed that our approach performs better than the current state-of-the-art method on a public Twitter dataset by 3.1% accuracy.

翻译:假新闻探测是提高媒体信息可信度的一项重要任务,因为假新闻每天都在社交媒体上不断传播,这是我们社会非常严重关切的一个问题。假新闻通常是通过操纵图像、文本和视频制造的。在本文中,我们展示了一种新颖的方法,通过使用来自文字和视觉数据的多式功能来检测假新闻。具体地说,我们使用预先培训的BERT模型学习文字功能,并在图像网络数据集上预先培训VGG-19模型来提取图像特征。我们提出了一个比例-点产品关注机制,以捕捉文本特征和视觉特征之间的关系。实验结果显示,我们的方法比公共推特数据集中目前的最新方法要好,精确度为3.1%。