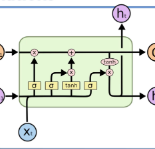

In recent years, there has been increased interest in building predictive models that harness natural language processing and machine learning techniques to detect emotions from various text sources, including social media posts, micro-blogs or news articles. Yet, deployment of such models in real-world sentiment and emotion applications faces challenges, in particular poor out-of-domain generalizability. This is likely due to domain-specific differences (e.g., topics, communicative goals, and annotation schemes) that make transfer between different models of emotion recognition difficult. In this work we propose approaches for text-based emotion detection that leverage transformer models (BERT and RoBERTa) in combination with Bidirectional Long Short-Term Memory (BiLSTM) networks trained on a comprehensive set of psycholinguistic features. First, we evaluate the performance of our models within-domain on two benchmark datasets: GoEmotion and ISEAR. Second, we conduct transfer learning experiments on six datasets from the Unified Emotion Dataset to evaluate their out-of-domain robustness. We find that the proposed hybrid models improve the ability to generalize to out-of-distribution data compared to a standard transformer-based approach. Moreover, we observe that these models perform competitively on in-domain data.

翻译:近年来,人们越来越有兴趣建立预测模型,利用自然语言处理和机器学习技术来探测各种文字来源的情绪,包括社交媒体文章、微博客或新闻文章。然而,在现实世界情绪和情感应用中部署这种模型面临挑战,特别是一般语言特征差,特别是超出常规的差强人意。这很可能是由于具体领域的差异(例如主题、交流目标和注释计划)使得不同情感识别模式之间的转移变得困难。在这项工作中,我们提出了基于文字的情感检测方法,即利用基于文字的变压器模型(BERT和RoBERTA)与经过全面心理语言特征培训的双向长期记忆(BILSTM)网络相结合。首先,我们评估我们内部模型在两个基准数据集(GoEmotion和ISEAR)上的性能。第二,我们对统一情感数据集的六个数据集进行了学习实验,以评价其外向坚固性。我们发现,拟议的混合模型提高了在常规数据转换方面普遍应用的能力,比我们观察了标准数据转换。