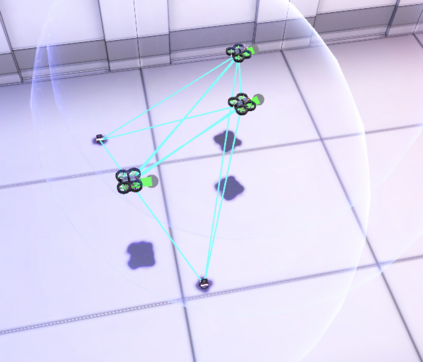

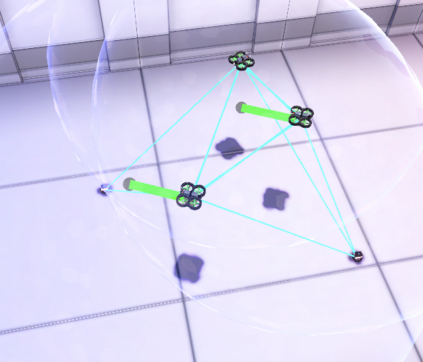

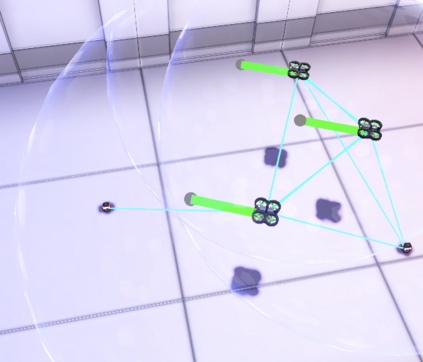

Addressing complex cooperative tasks in safety-critical environments poses significant challenges for multi-agent systems, especially under conditions of partial observability. We focus on a dynamic network bridging task, where agents must learn to maintain a communication path between two moving targets. To ensure safety during training and deployment, we integrate a control-theoretic safety filter that enforces collision avoidance through local setpoint updates. We develop and evaluate multi-agent reinforcement learning safety-informed message passing, showing that encoding safety filter activations as edge-level features improves coordination. The results suggest that local safety enforcement and decentralized learning can be effectively combined in distributed multi-agent tasks.

翻译:暂无翻译