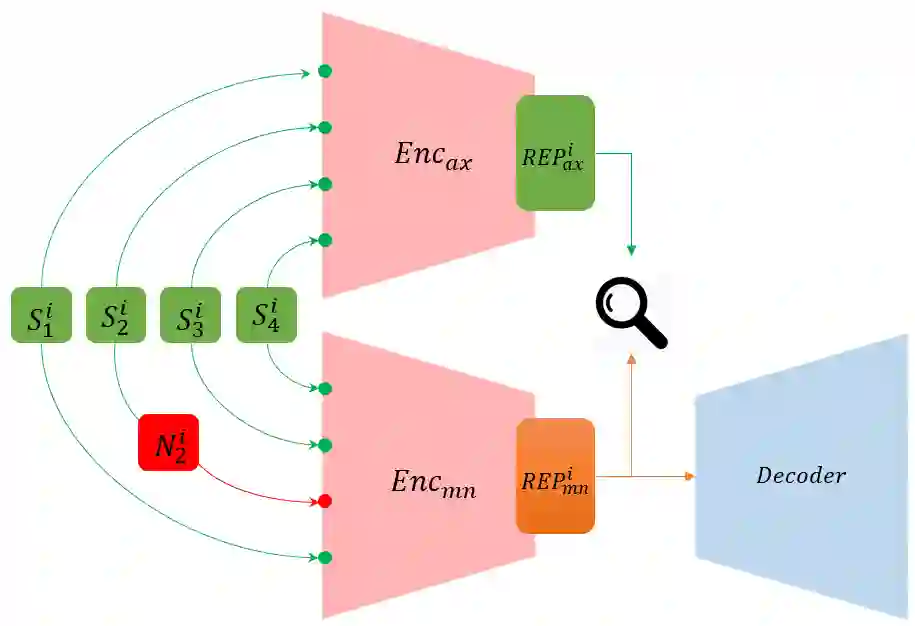

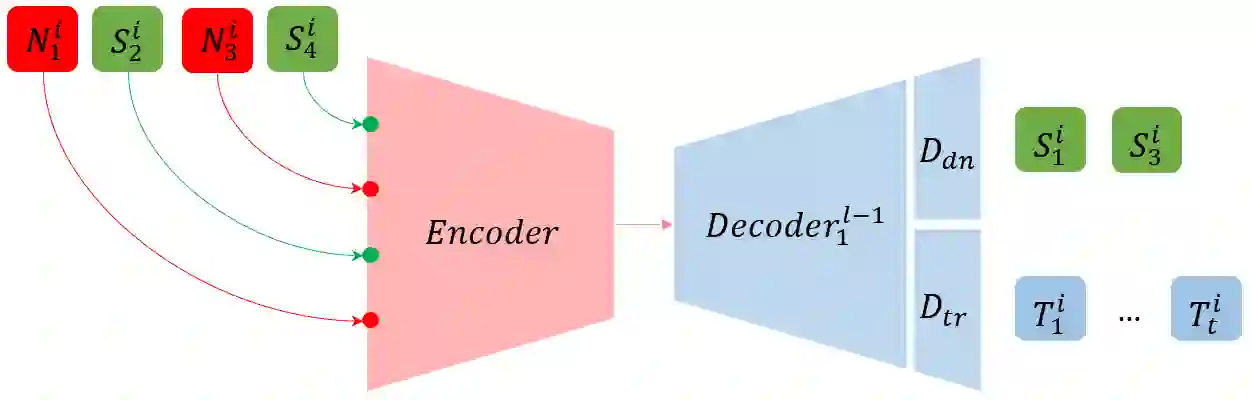

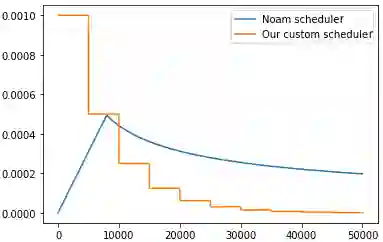

Transformers (Vaswani et al., 2017) have brought a remarkable improvement in the performance of neural machine translation (NMT) systems but they could be surprisingly vulnerable to noise. In this work, we try to investigate how noise breaks Transformers and if there exist solutions to deal with such issues. There is a large body of work in the NMT literature on analyzing the behavior of conventional models for the problem of noise but Transformers are relatively understudied in this context. Motivated by this, we introduce a novel data-driven technique called Target Augmented Fine-tuning (TAFT) to incorporate noise during training. This idea is comparable to the well-known fine-tuning strategy. Moreover, we propose two other novel extensions to the original Transformer: Controlled Denoising (CD) and Dual-Channel Decoding (DCD), that modify the neural architecture as well as the training process to handle noise. One important characteristic of our techniques is that they only impact the training phase and do not impose any overhead at inference time. We evaluated our techniques to translate the English--German pair in both directions and observed that our models have a higher tolerance to noise. More specifically, they perform with no deterioration where up to 10% of entire test words are infected by noise.

翻译:变异器(Vaswani等人,2017年)在神经机翻译系统(NMT)的性能方面带来了显著的改善,但是它们可能令人惊讶地容易受到噪音的影响。在这项工作中,我们试图调查噪音如何打破变异器,如果有解决这些问题的办法的话。 NMT文献中有大量关于分析传统模型处理噪音问题的行为的工作,但在此背景下,变异器相对缺乏研究。受此影响,我们引入了一种新型数据驱动技术,称为目标增强微调(TAFT),在培训期间纳入噪音。这个想法与众所周知的微调战略相似。此外,我们建议对原变异器进行另外两个新的扩展:控制Denoising(CD)和双声道Decod(DCD),这改变了神经结构以及处理噪音的培训过程。我们技术的一个重要特征是,它们只影响培训阶段,而不会在推导时造成任何间接费用。我们评估了我们如何将英德对双向两个方向翻译的技术,并且观察到我们观察到了两个方向的微调的两种新变异的两种模式都比高。