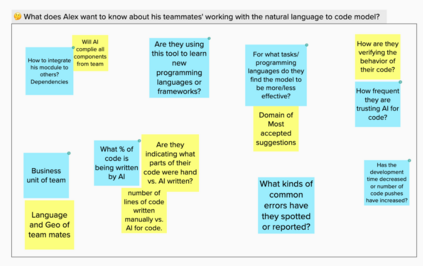

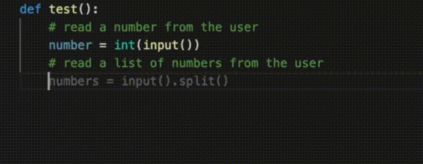

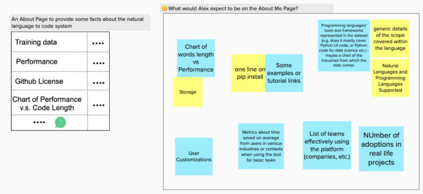

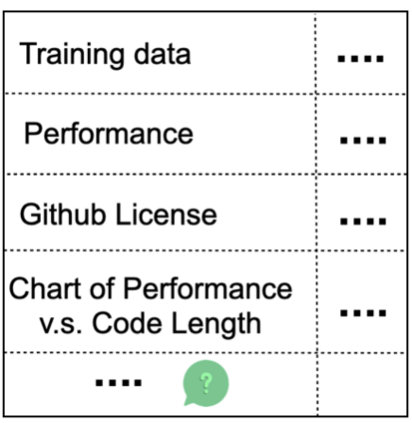

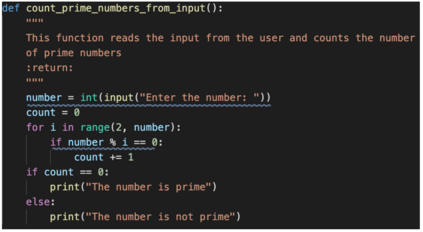

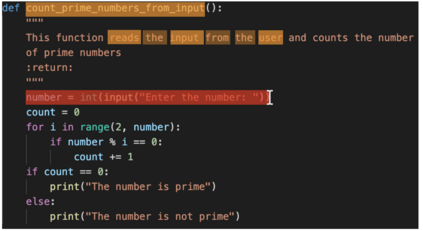

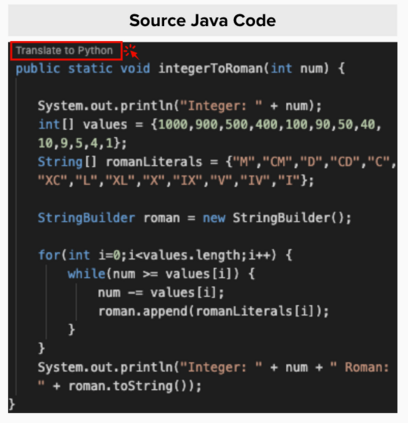

What does it mean for a generative AI model to be explainable? The emergent discipline of explainable AI (XAI) has made great strides in helping people understand discriminative models. Less attention has been paid to generative models that produce artifacts, rather than decisions, as output. Meanwhile, generative AI (GenAI) technologies are maturing and being applied to application domains such as software engineering. Using scenario-based design and question-driven XAI design approaches, we explore users' explainability needs for GenAI in three software engineering use cases: natural language to code, code translation, and code auto-completion. We conducted 9 workshops with 43 software engineers in which real examples from state-of-the-art generative AI models were used to elicit users' explainability needs. Drawing from prior work, we also propose 4 types of XAI features for GenAI for code and gathered additional design ideas from participants. Our work explores explainability needs for GenAI for code and demonstrates how human-centered approaches can drive the technical development of XAI in novel domains.

翻译:基因化的AI(GenAI)技术正在成熟,并应用于软件工程等应用领域。我们使用基于情景的设计和问题驱动的XAI设计方法,探索GenAI在三种软件工程使用案例中的可解释性需要:自然语言对代码、代码翻译和代码自动完成。我们与43名软件工程师举行了9次讲习班,其中使用了最新基因化的AI模型的真实例子来了解用户的可解释性需求。我们从先前的工作中也为GenAI提出了四种类型的 XAI特性用于代码,并从参与者那里收集了更多的设计想法。我们的工作探讨了GenAI对代码的可解释性需求,并展示了以人类为中心的方法如何在新领域推动XAI的技术发展。