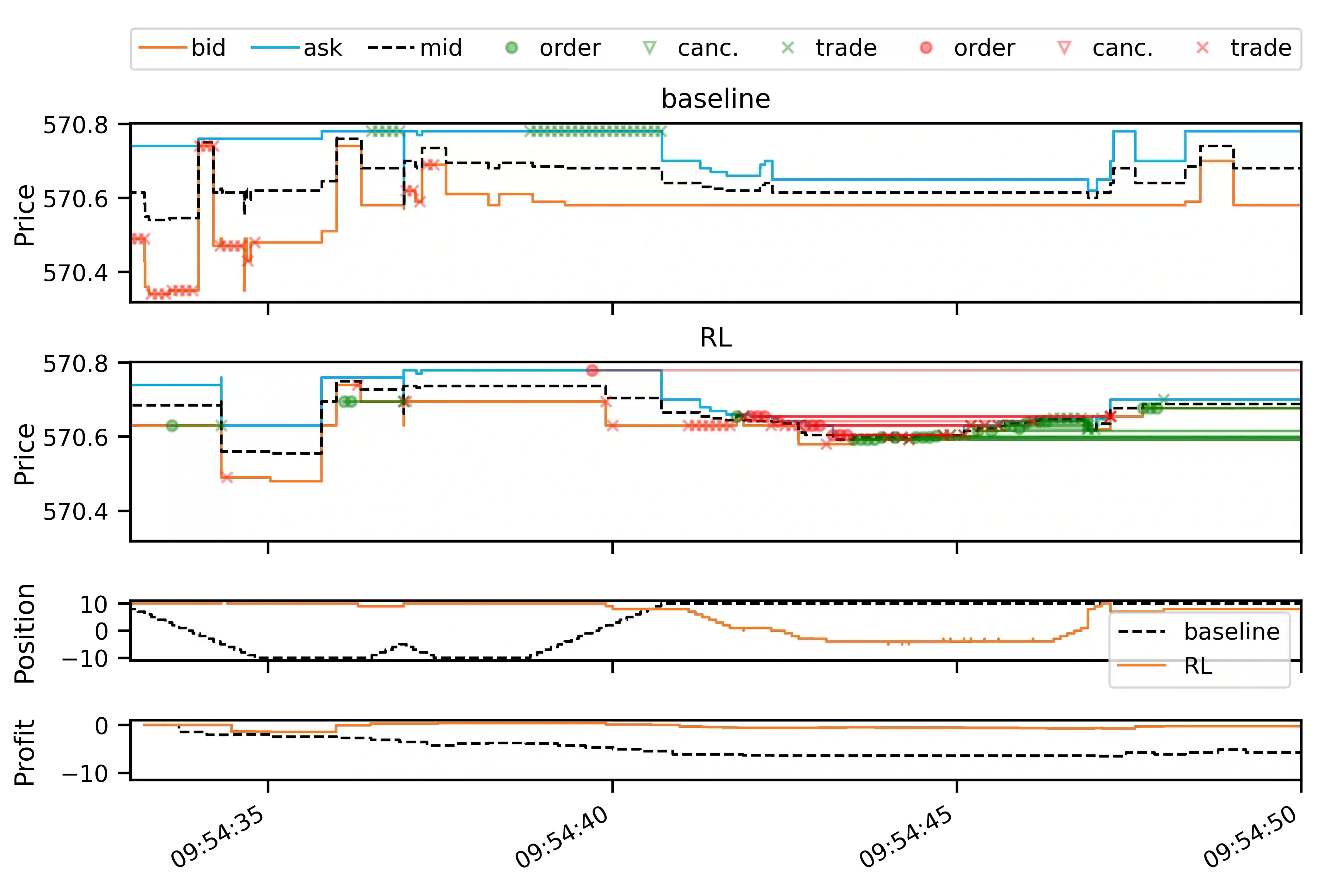

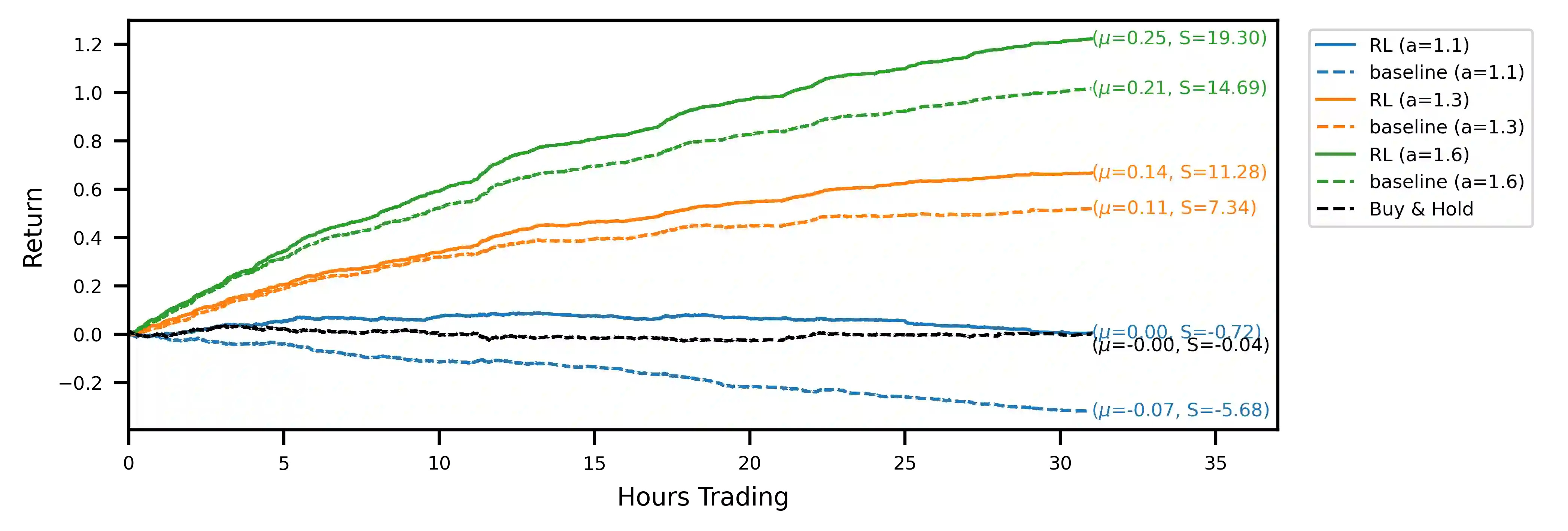

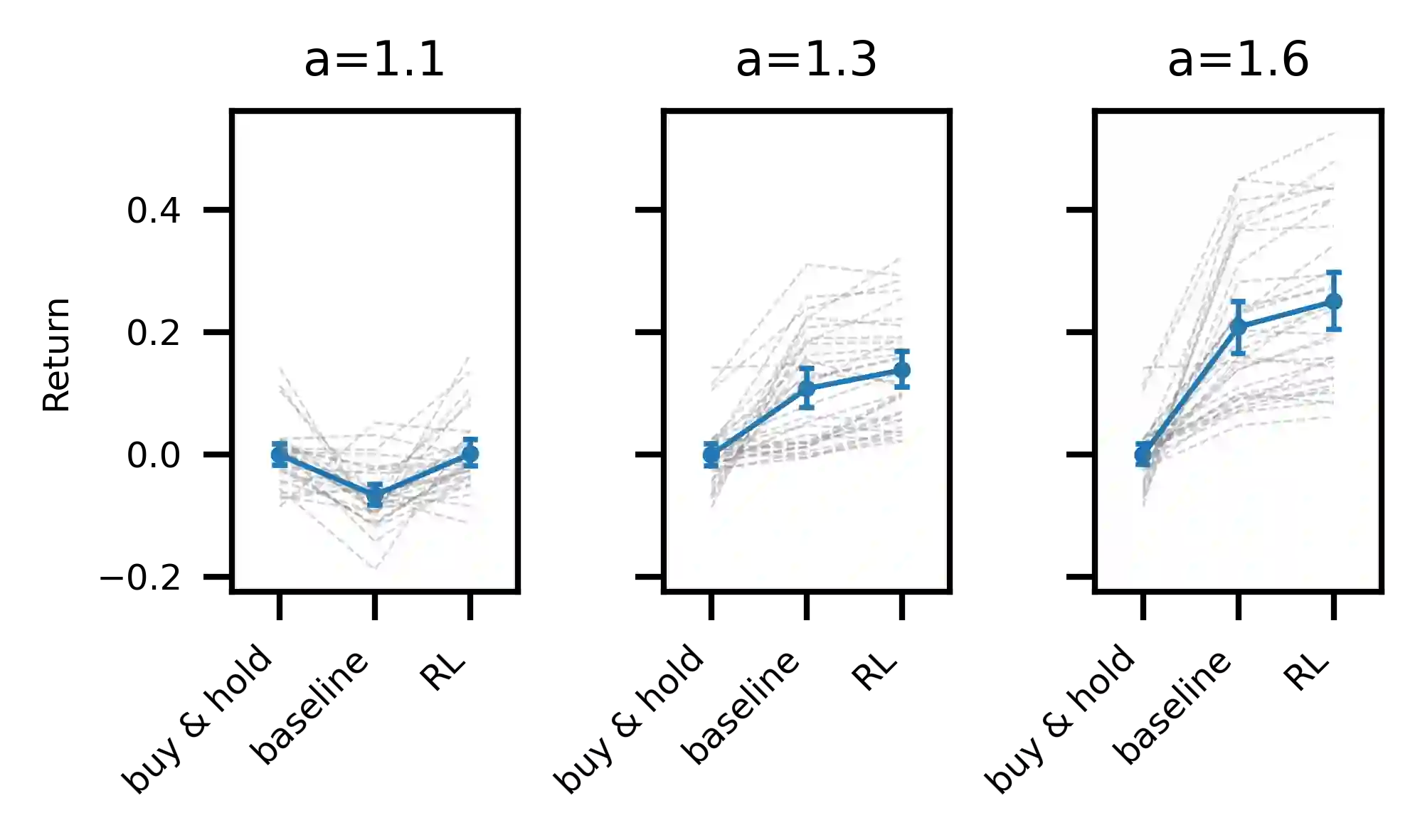

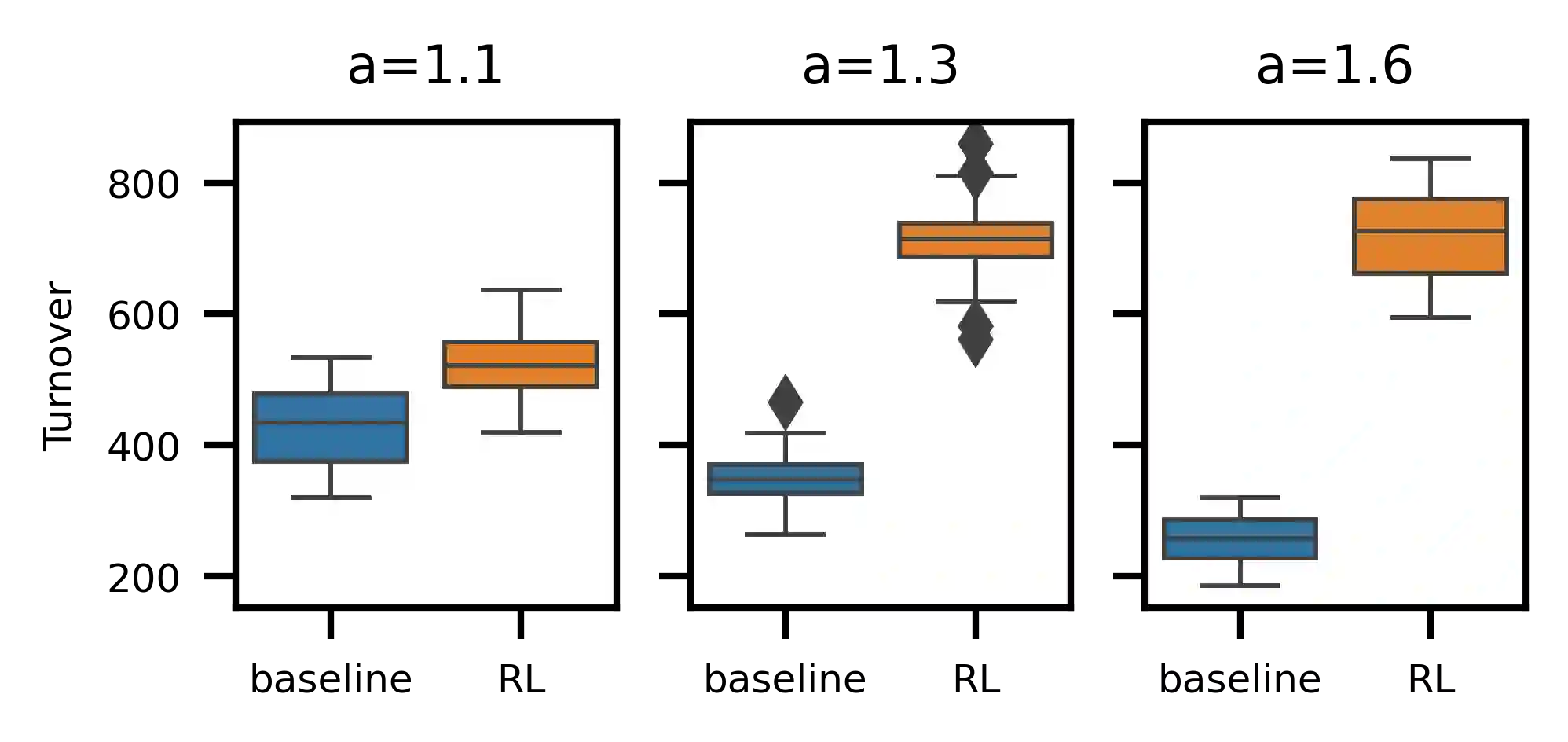

We employ deep reinforcement learning (RL) to train an agent to successfully translate a high-frequency trading signal into a trading strategy that places individual limit orders. Based on the ABIDES limit order book simulator, we build a reinforcement learning OpenAI gym environment and utilise it to simulate a realistic trading environment for NASDAQ equities based on historic order book messages. To train a trading agent that learns to maximise its trading return in this environment, we use Deep Duelling Double Q-learning with the APEX (asynchronous prioritised experience replay) architecture. The agent observes the current limit order book state, its recent history, and a short-term directional forecast. To investigate the performance of RL for adaptive trading independently from a concrete forecasting algorithm, we study the performance of our approach utilising synthetic alpha signals obtained by perturbing forward-looking returns with varying levels of noise. Here, we find that the RL agent learns an effective trading strategy for inventory management and order placing that outperforms a heuristic benchmark trading strategy having access to the same signal.

翻译:我们利用深度强化学习(RL)培训代理商,成功将高频交易信号转化为设定个人限制订单的贸易战略。根据ABIDES限制订单书模拟器,我们建设了强化学习OpenAI健身环境,并根据历史订单书信息模拟NASDAQ股票的现实贸易环境。培训一个学会在这种环境中最大限度地实现交易回报的贸易代理商,我们使用APEX(非同步优先经验重现)架构的深杜林双轨学习。该代理商观察了当前限制订单书状态、其近期历史和短期方向预测。为了调查不受具体预测算法影响的适应性贸易RL的绩效,我们研究了我们利用以不同程度的噪音渗透前瞻回报获得的合成阿尔法信号的方法的绩效。我们发现,RL代理商学习了有效的库存管理交易战略,并下令使其超越能够获取同一信号的超常基准交易战略。