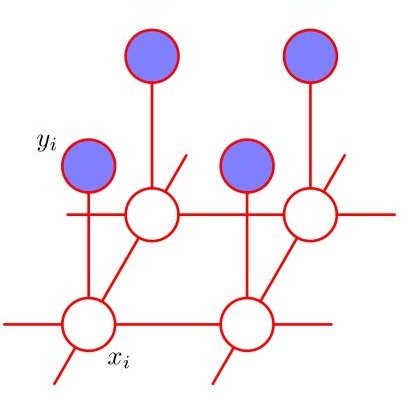

We propose a \emph{collaborative} multi-agent reinforcement learning algorithm named variational policy propagation (VPP) to learn a \emph{joint} policy through the interactions over agents. We prove that the joint policy is a Markov Random Field under some mild conditions, which in turn reduces the policy space effectively. We integrate the variational inference as special differentiable layers in policy such that the actions can be efficiently sampled from the Markov Random Field and the overall policy is differentiable. We evaluate our algorithm on several large scale challenging tasks and demonstrate that it outperforms previous state-of-the-arts.

翻译:我们提出一个多试剂强化学习算法,名为变式政策传播(VPP),通过代理机构的互动来学习一个\emph{联合)政策。我们证明,联合政策是在一些温和条件下的Markov随机场,这反过来又有效地减少了政策空间。我们把变式推论作为特殊的可区分的层次纳入政策,这样就可以有效地从Markov随机场抽取行动样本,而总体政策是不同的。我们评估了我们在若干大规模具有挑战性的任务上的算法,并证明它优于以往的艺术现状。