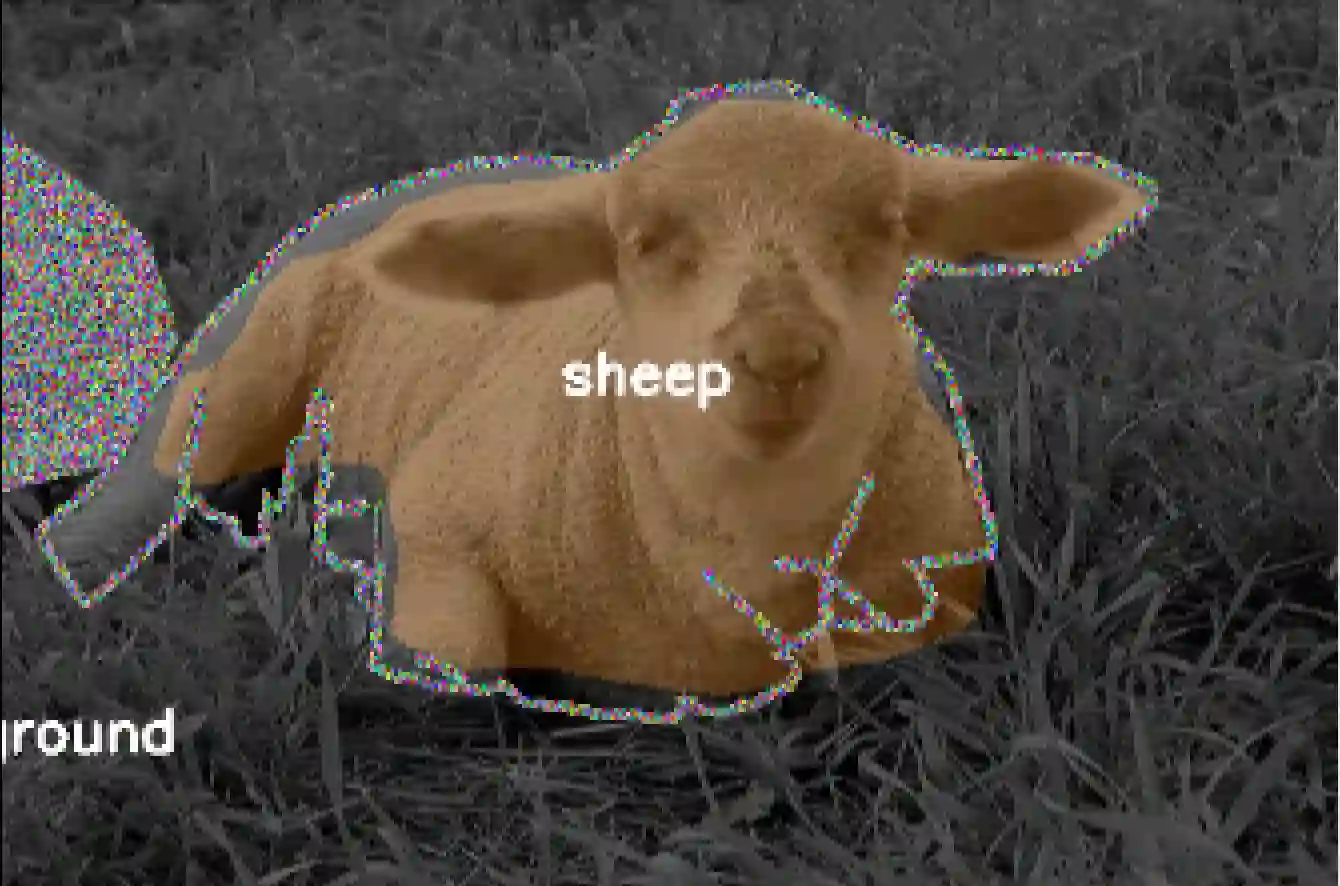

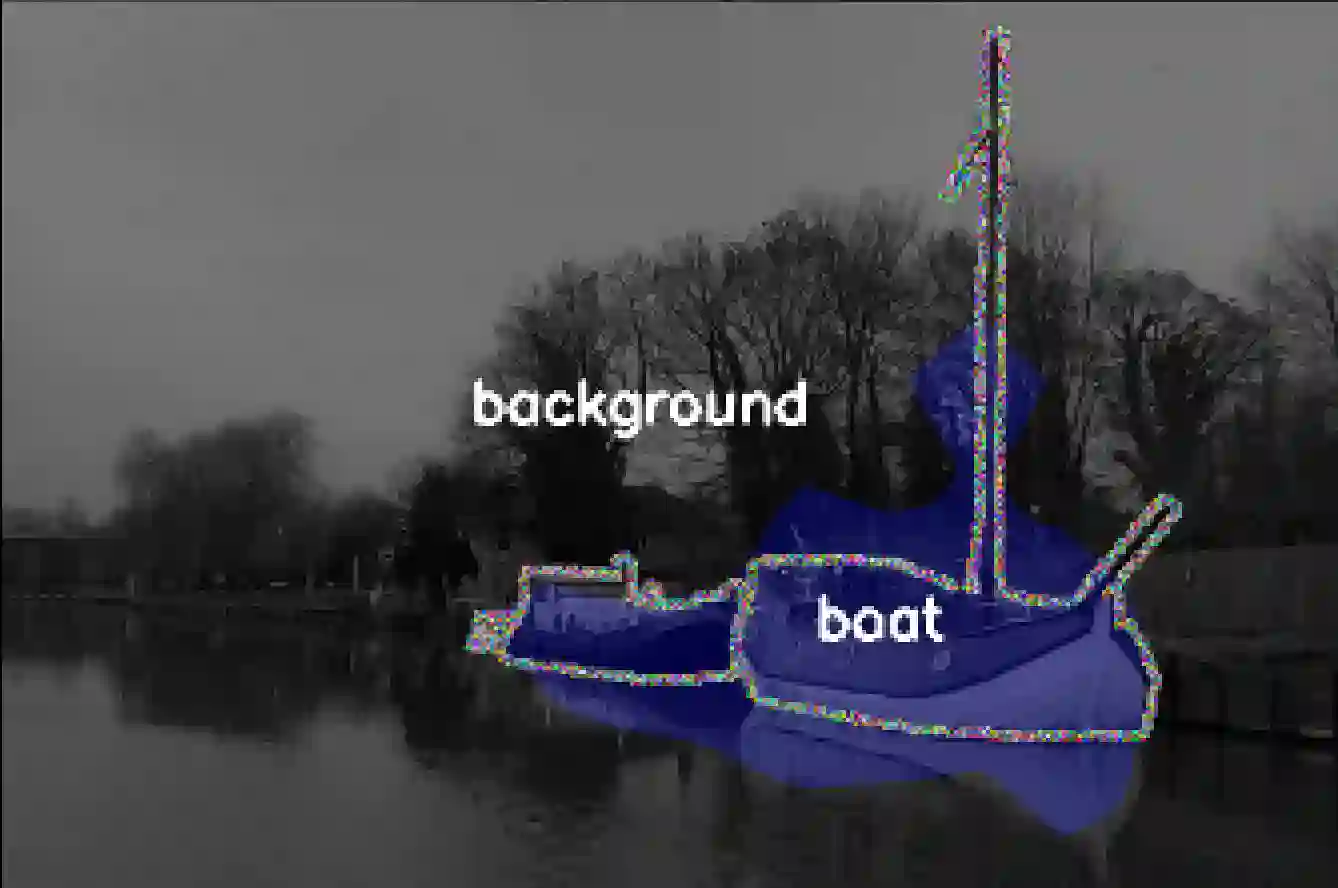

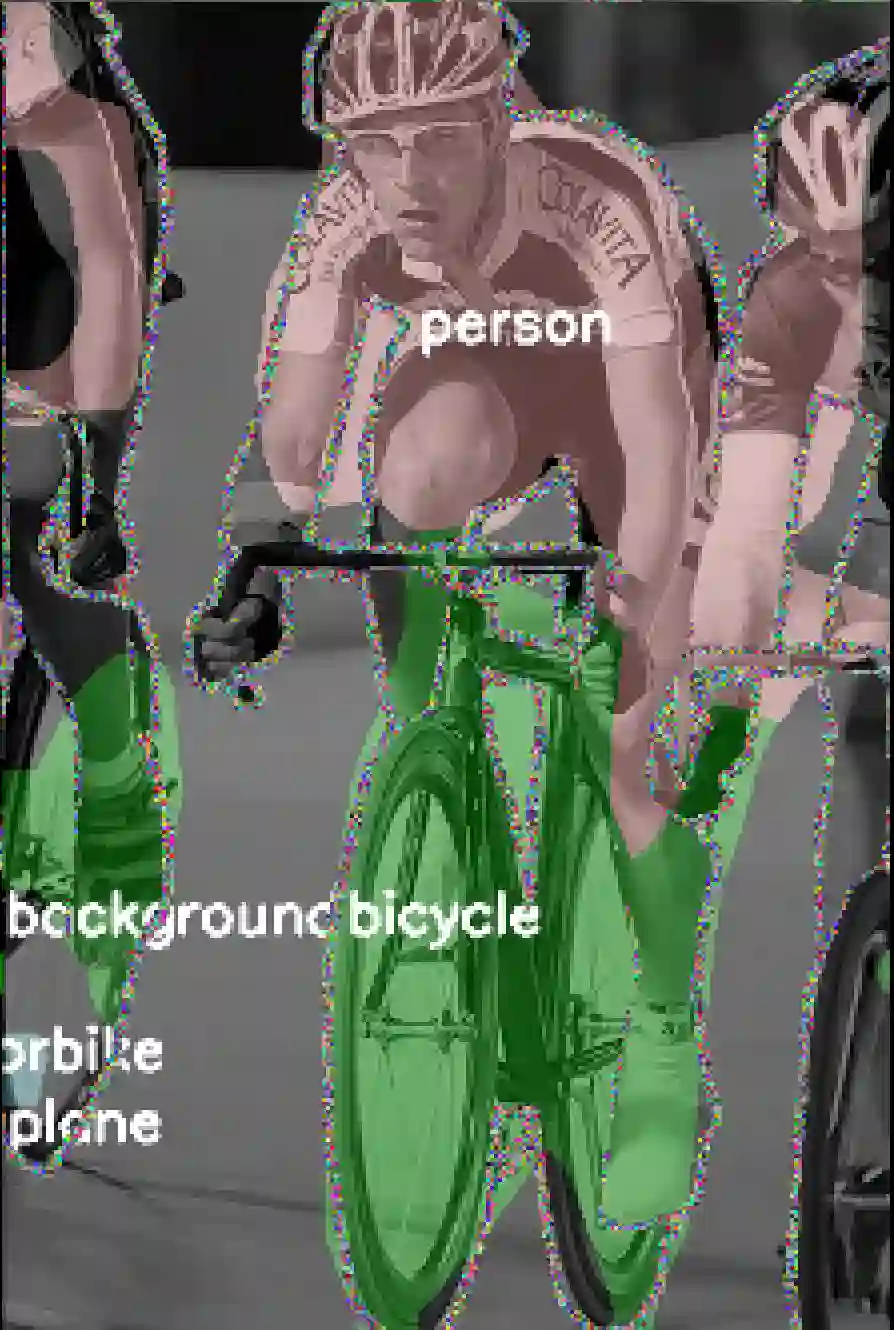

Filter pruning is one of the most effective ways to accelerate and compress convolutional neural networks (CNNs). In this work, we propose a global filter pruning algorithm called Gate Decorator, which transforms a vanilla CNN module by multiplying its output by the channel-wise scaling factors, i.e. gate. When the scaling factor is set to zero, it is equivalent to removing the corresponding filter. We use Taylor expansion to estimate the change in the loss function caused by setting the scaling factor to zero and use the estimation for the global filter importance ranking. Then we prune the network by removing those unimportant filters. After pruning, we merge all the scaling factors into its original module, so no special operations or structures are introduced. Moreover, we propose an iterative pruning framework called Tick-Tock to improve pruning accuracy. The extensive experiments demonstrate the effectiveness of our approaches. For example, we achieve the state-of-the-art pruning ratio on ResNet-56 by reducing 70% FLOPs without noticeable loss in accuracy. For ResNet-50 on ImageNet, our pruned model with 40% FLOPs reduction outperforms the baseline model by 0.31% in top-1 accuracy. Various datasets are used, including CIFAR-10, CIFAR-100, CUB-200, ImageNet ILSVRC-12 and PASCAL VOC 2011. Code is available at github.com/youzhonghui/gate-decorator-pruning

翻译:过滤过滤器运行是加速和压缩卷变神经网络(CNNs)的最有效方法之一。 在这项工作中, 我们提出一个叫“ 门装饰器” 的全球过滤运行算法, 它将香草CNN模块的输出乘以频道角度的缩放因子, 即门。 当缩放因子设定为零时, 它相当于删除相应的过滤器。 我们使用泰勒扩张来估计由于将缩放因将缩放因设定为零而导致的损失函数的变化, 并使用全球过滤重要性排序的估算值。 然后我们通过删除这些不重要的过滤器来利用网络。 在运行后, 我们将所有缩放因子都合并到其原始模块, 因此没有引入特殊操作或结构。 此外, 我们提议了一个迭接接的调试框架, 叫做Tick-Tock, 来提高运行准确度。 广泛的实验显示了我们的方法的有效性。 例如, 我们通过将 ResNet- 和 NSB/ 5, 而将70% FLOP 的 FLOP 模式降低到 。 在图像网络上, 我们的 RIS- 50, IM1 使用 的 IMFAR 的 的 模型中, 的 CRA- 10 的准确性模型是 。