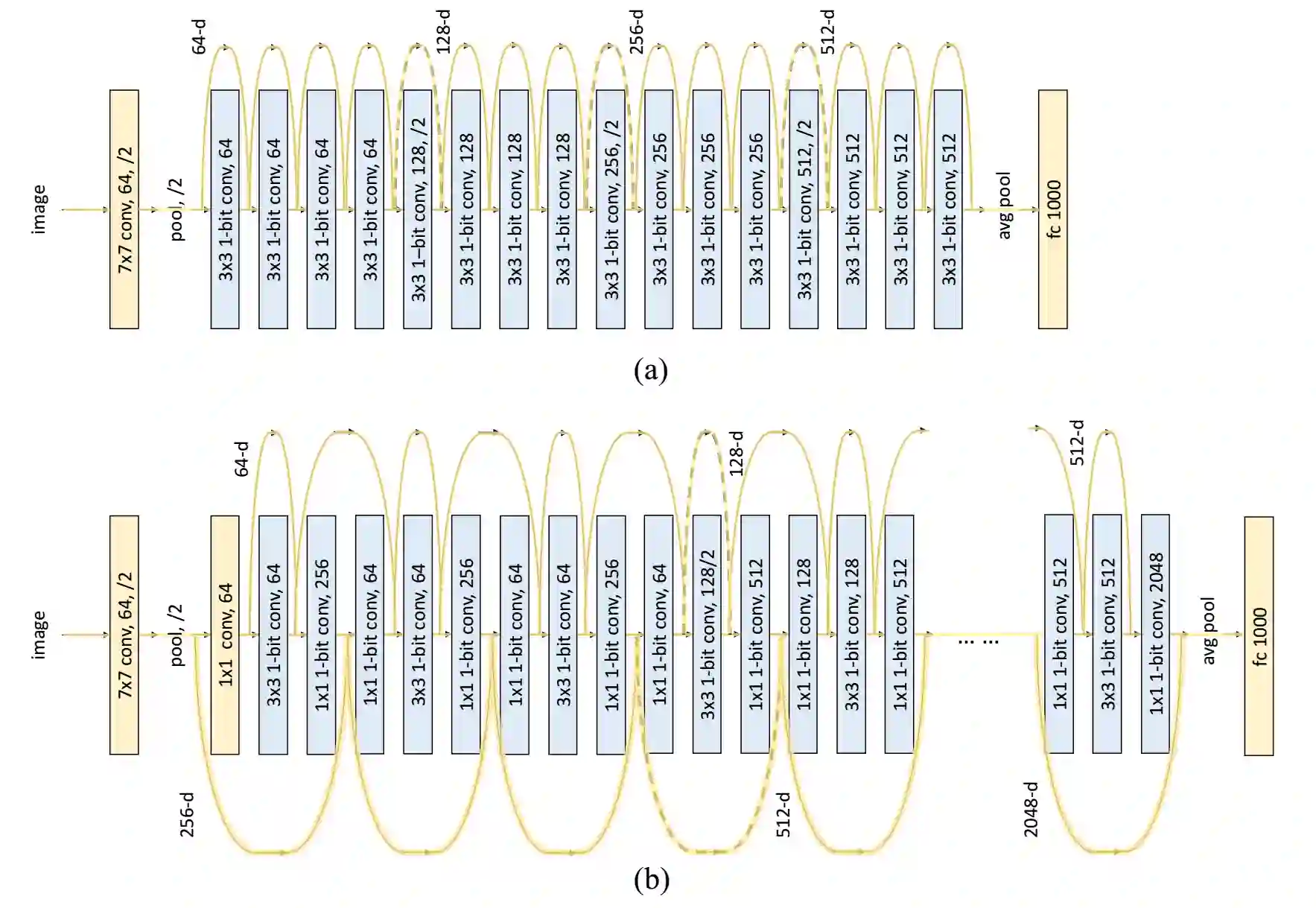

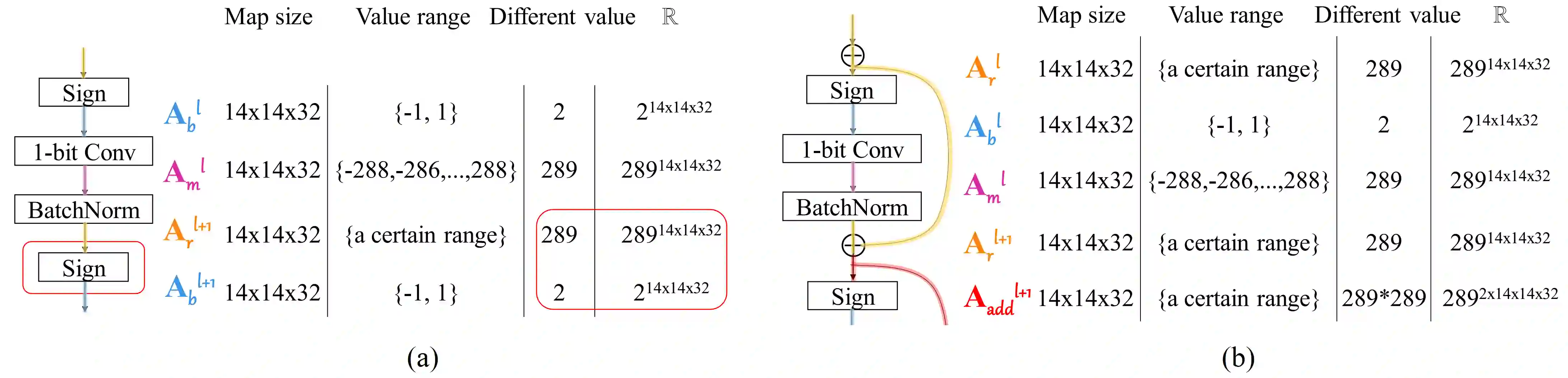

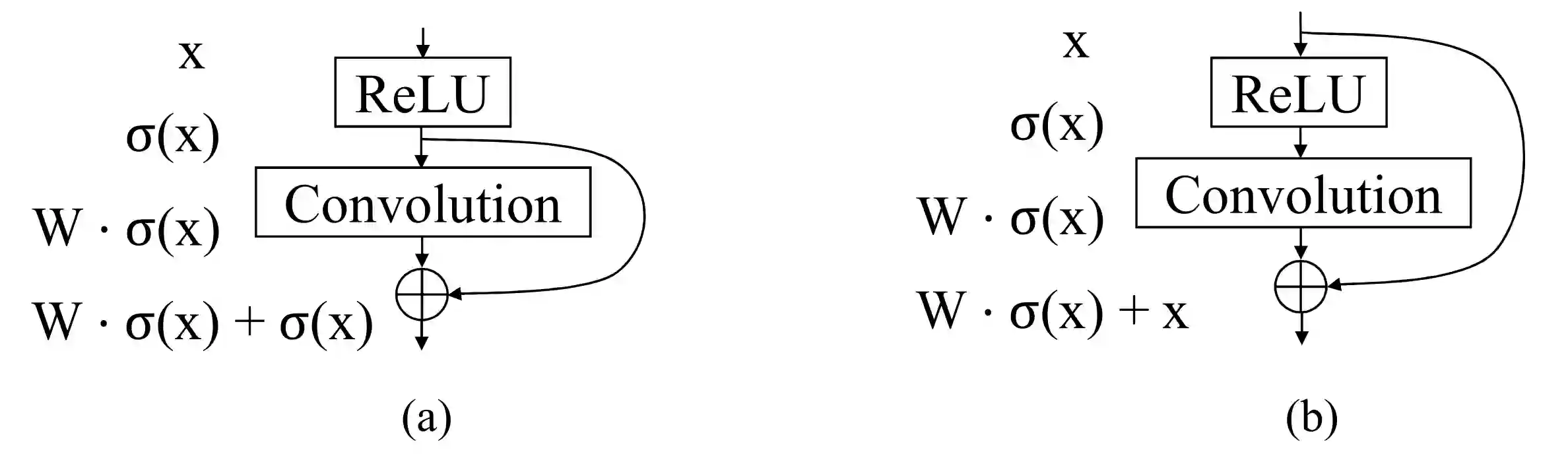

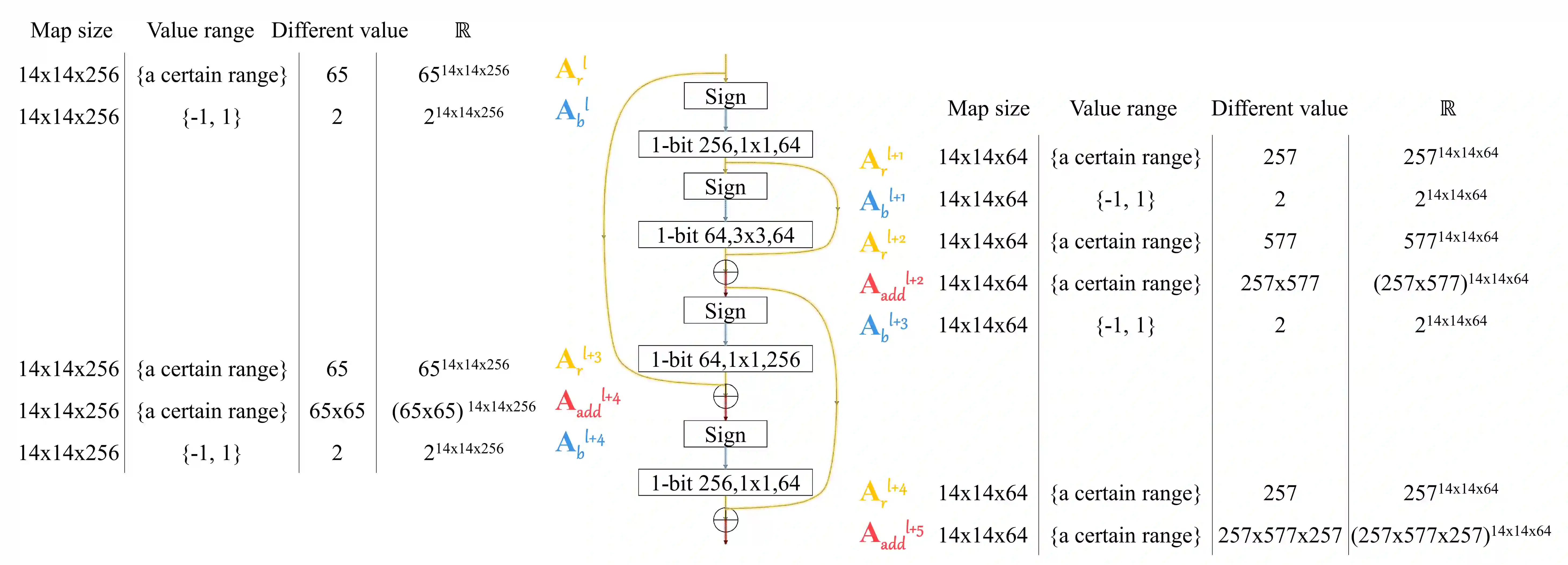

In this paper, we study 1-bit convolutional neural networks (CNNs), of which both the weights and activations are binary. While efficient, the lacking of representational capability and the training difficulty impede 1-bit CNNs from performing as well as real-valued networks. We propose Bi-Real net with a novel training algorithm to tackle these two challenges. To enhance the representational capability, we propagate the real-valued activations generated by each 1-bit convolution via a parameter-free shortcut. To address the training difficulty, we propose a training algorithm using a tighter approximation to the derivative of the sign function, a magnitude-aware gradient for weight updating, a better initialization method, and a two-step scheme for training a deep network. Experiments on ImageNet show that an 18-layer Bi-Real net with the proposed training algorithm achieves 56.4% top-1 classification accuracy, which is 10% higher than the state-of-the-arts (e.g., XNOR-Net) with greater memory saving and lower computational cost. Bi-Real net is also the first to scale up 1-bit CNNs to an ultra-deep network with 152 layers, and achieves 64.5% top-1 accuracy on ImageNet. A 50-layer Bi-Real net shows comparable performance to a real-valued network on the depth estimation task with only a 0.3% accuracy gap.

翻译:在本文中,我们研究了1位数的进化神经网络(CNNs),其重量和激活都是二进制的。虽然效率高,但缺乏代表性能力和训练困难阻碍了1位数的CNN运行以及真正有价值网络。我们提议双线网络,并采用新的培训算法来应对这两个挑战。为了提高代表能力,我们通过无参数快捷键传播每1位数的进化产生的真正价值启动。为了解决培训困难,我们建议采用一种培训算法,使用更接近信号函数衍生物的近似法,一个用于更新重量的量级觉梯度梯度,一个更好的初始化方法,以及一个用于培训深网络的两步方案。在图像网络上进行的实验表明,18层双线网络与拟议培训算法的达到56.4%的最高一级分类精确度,比状态(例如XNOR-Net)高出10%,但记忆储蓄率更高,计算成本较低。双线网络也是第一个到一级准确度的1比位数,155级网络上一个可比的图像级。