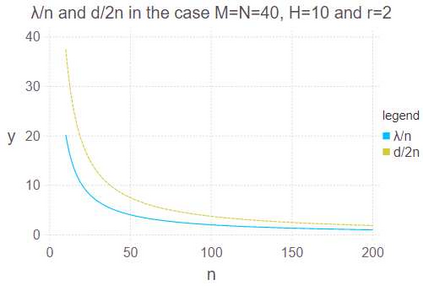

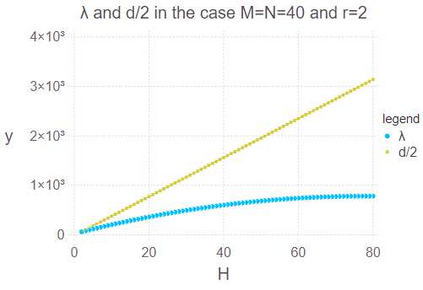

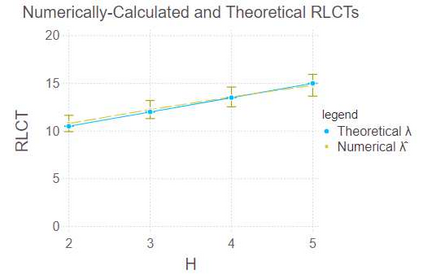

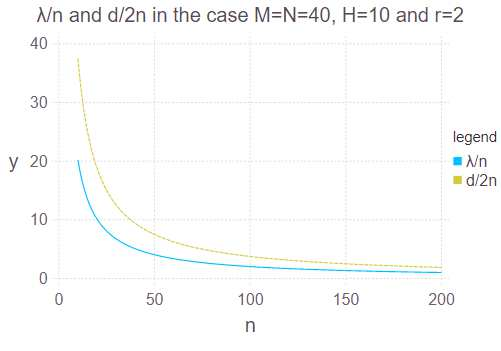

Latent Dirichlet allocation (LDA) obtains essential information from data by using Bayesian inference. It is applied to knowledge discovery via dimension reducing and clustering in many fields. However, its generalization error had not been yet clarified since it is a singular statistical model where there is no one-to-one mapping from parameters to probability distributions. In this paper, we give the exact asymptotic form of its generalization error and marginal likelihood, by theoretical analysis of its learning coefficient using algebraic geometry. The theoretical result shows that the Bayesian generalization error in LDA is expressed in terms of that in matrix factorization and a penalty from the simplex restriction of LDA's parameter region. A numerical experiment is consistent to the theoretical result.

翻译:Drichlet 分配(LDA) 利用Bayesian推论从数据中获取基本信息,用于通过减少维度和在许多领域分组进行知识发现,然而,其一般化错误尚未澄清,因为它是一个单一的统计模型,没有从参数到概率分布的一对一绘图。在本文中,我们通过使用代数几何法对其学习系数进行理论分析,给出其一般化错误和边际可能性的准确无症状形式。理论结果表明,Bayesian 常规化错误表现在矩阵系数化和LDA参数区域简单x限制的处罚中。一个数字实验与理论结果一致。