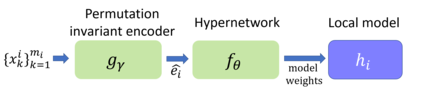

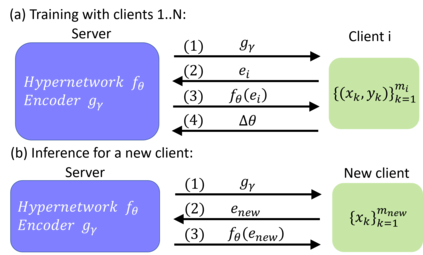

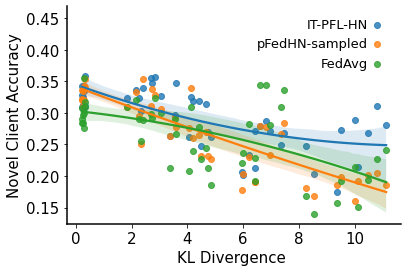

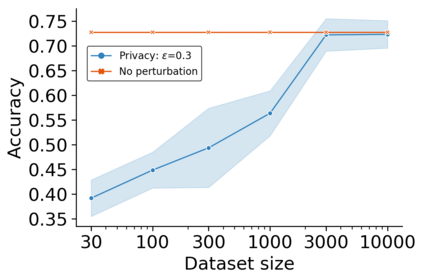

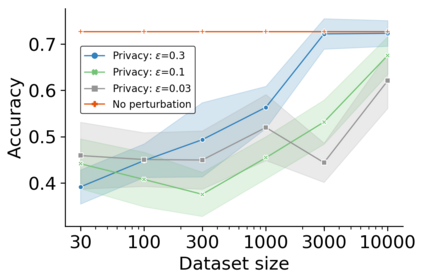

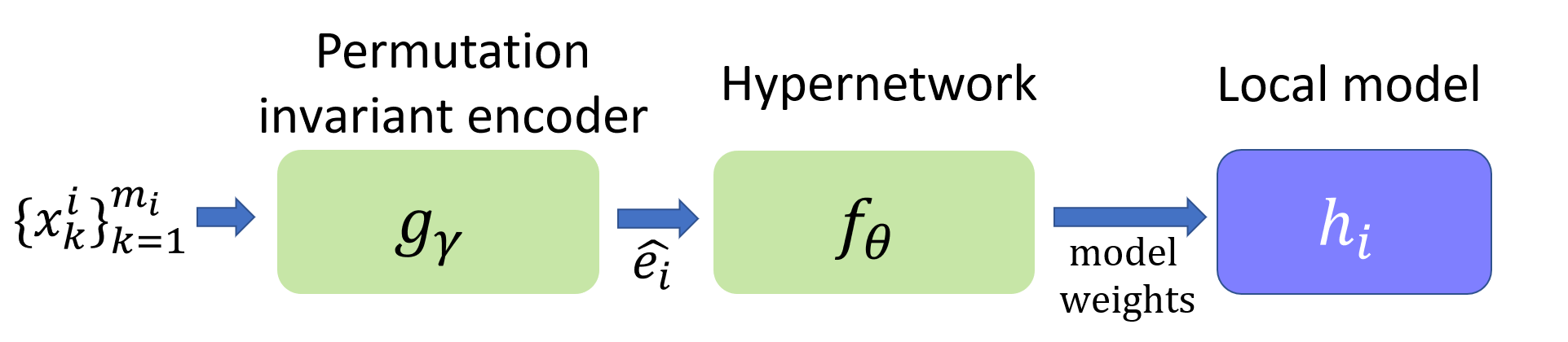

In Federated learning (FL), multiple clients collaborate to learn a shared model through a central server while they keep data decentralized. Personalized federated learning (PFL) further extends FL by learning personalized models per client. In both FL and PFL, all clients participate in the training process and their labeled data is used for training. However, in reality, novel clients may wish to join a prediction service after it has been deployed, obtaining predictions for their own unlabeled data. Here, we introduce a new learning setup, Inference-Time Unlabeled PFL (ITU-PFL), where a system trained on a set of clients, needs to be later applied to novel unlabeled clients at inference time. We propose a novel approach to this problem, ITUFL-HN, which uses a hypernetwork to produce a new model for the late-to-the-party client. Specifically, we train an encoder network that learns a representation for a client given its unlabeled data. That client representation is fed to a hypernetwork that generates a personalized model for that client. Evaluated on five benchmark datasets, we find that ITUFL-HN generalizes better than current FL and PFL methods, especially when the novel client has a large domain shift from training clients. We also analyzed the generalization error for novel clients, and showed analytically and experimentally how they can apply differential privacy to their data.

翻译:在联邦学习(FL)中,多个客户通过中央服务器合作学习共享模式,同时保持数据分散。个性化联邦学习(PFL)通过每个客户学习个性化模式,进一步扩大FL(FFL),进一步扩展FL。在FL和PFL中,所有客户都参与培训过程并使用其标签数据进行培训。然而,在现实中,新客户可能希望在部署后加入一个预测服务,获得自己未贴标签数据的预测。在这里,我们引入一个新的学习设置,即“推断时间不贴标签的PFL(IT-PFL)”,在这个系统中,对一组客户进行个人化培训的系统需要随后应用到新的非贴标签客户身上。我们提出了解决这一问题的新办法,即UITFL-HN,它使用超网络为晚到晚端客户制作新的模型。具体地说,我们培训一个编码网络,以了解客户在未贴标签数据上的差异。客户的代表性被反馈到一个超级网络,为该客户创建个人化模型。我们评估了五个基准数据化客户,特别是FLAL的大型客户的升级方法,我们发现,我们从一般客户如何从GLAFLB到我们发现一个更精确的客户。