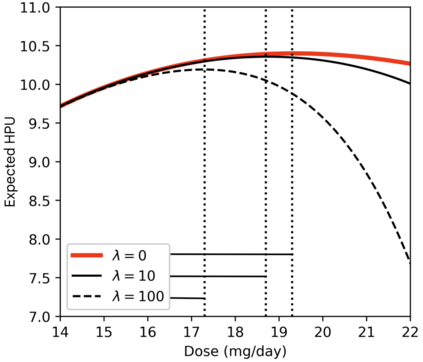

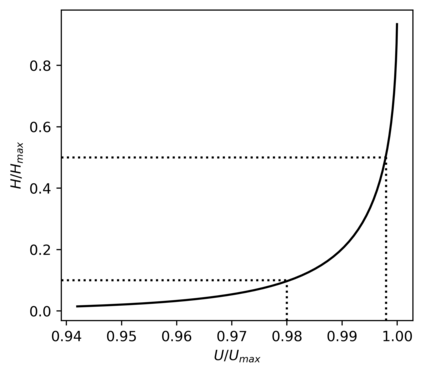

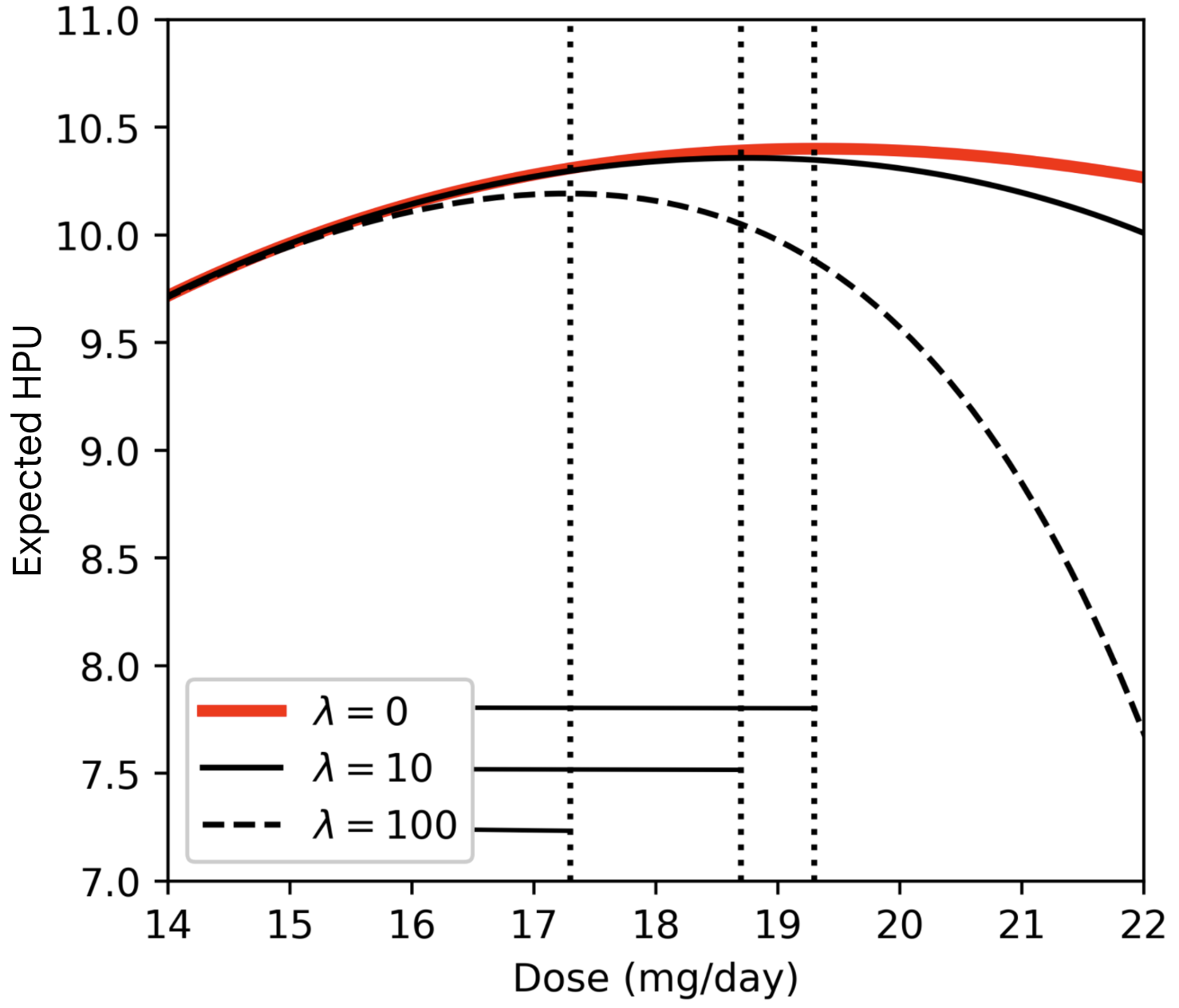

To act safely and ethically in the real world, agents must be able to reason about harm and avoid harmful actions. In this paper we develop the first statistical definition of harm and a framework for incorporating harm into algorithmic decisions. We argue that harm is fundamentally a counterfactual quantity, and show that standard machine learning algorithms that cannot perform counterfactual reasoning are guaranteed to pursue harmful policies in certain environments. To resolve this we derive a family of counterfactual objective functions that robustly mitigate for harm. We demonstrate our approach with a statistical model for identifying optimal drug doses. While standard algorithms that select doses using causal treatment effects result in significant harm, our counterfactual algorithm identifies doses that are significantly less harmful without sacrificing efficacy. Our results show that counterfactual reasoning is a key ingredient for safe and ethical AI.

翻译:为了在现实世界中安全和合乎道德地采取行动,代理人必须能够理性地理解伤害并避免有害行动。在本文件中,我们制定了第一个伤害统计定义和将伤害纳入算法决定的框架。我们争辩说,伤害从根本上说是一个反事实的数量,并表明不能进行反事实推理的标准机器学习算法可以保证在某些环境中推行有害政策。要解决这个问题,我们形成一个反事实目标功能大家庭,以强有力地减轻伤害。我们用统计模型来证明我们的方法,以确定最佳药物剂量。虽然使用因果治疗效果选择剂量的标准算法造成了重大伤害,但我们的反事实算法却确定了在不牺牲效力的情况下危害性要小得多的剂量。我们的结果表明,反事实推理是安全和道德的AI的关键要素。