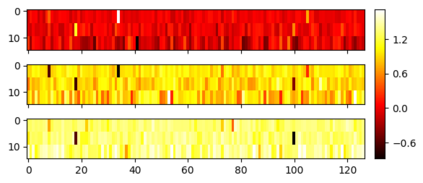

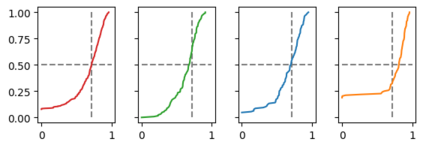

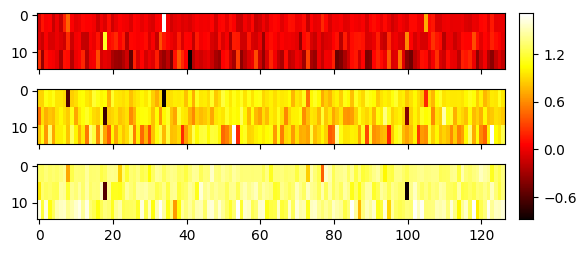

We introduce a framework for reasoning about what meaning is captured by the neurons in a trained neural network. We provide a strategy for discovering meaning by training a second model (referred to as an observer model) to classify the state of the model it observes (an object model) in relation to attributes of the underlying dataset. We implement and evaluate observer models in the context of a specific set of classification problems, employ heat maps for visualizing the relevance of components of an object model in the context of linear observer models, and use these visualizations to extract insights about the manner in which neural networks identify salient characteristics of their inputs. We identify important properties captured decisively in trained neural networks; some of these properties are denoted by individual neurons. Finally, we observe that the label proportion of a property denoted by a neuron is dependent on the depth of a neuron within a network; we analyze these dependencies, and provide an interpretation of them.

翻译:我们引入了神经元在经过培训的神经网络中所捕捉到的含义的推理框架,我们通过培训第二个模型(称为观察模型)来提供发现含义的战略,将所观察到的模型(物体模型)相对于基本数据集属性的状态进行分类,我们在一套具体的分类问题背景下实施和评价观察模型,使用热图来直观物体模型组件在线性观察模型中的关联性,并利用这些直观图来获取神经网络识别其投入特征的方式的洞察力。我们确定了在经过培训的神经网络中决定性地捕捉到的重要属性;其中一些属性由个别神经元来表示。最后,我们观察到,神经元所标明的属性的标签比例取决于网络内神经的深度;我们分析这些依赖性,并提供对这些属性的解释。