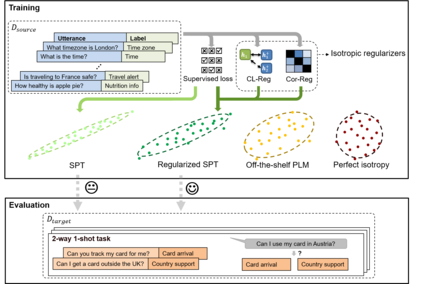

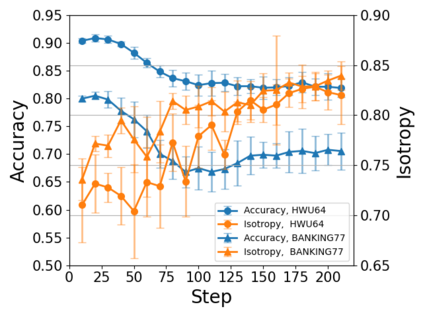

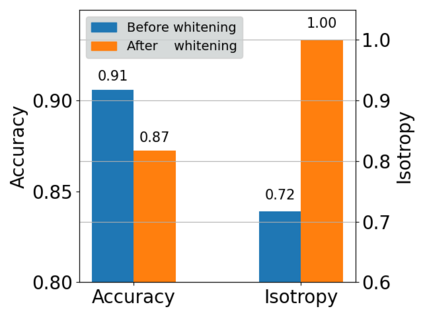

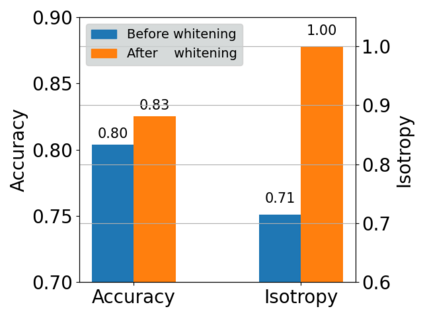

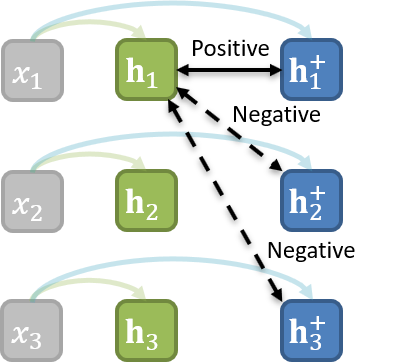

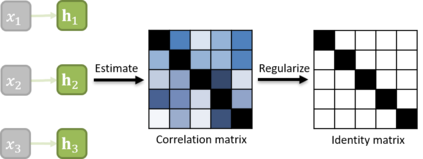

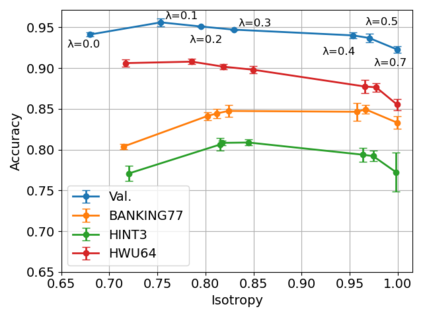

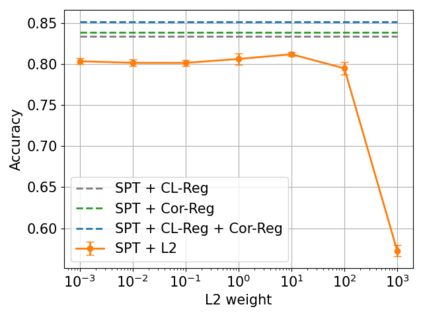

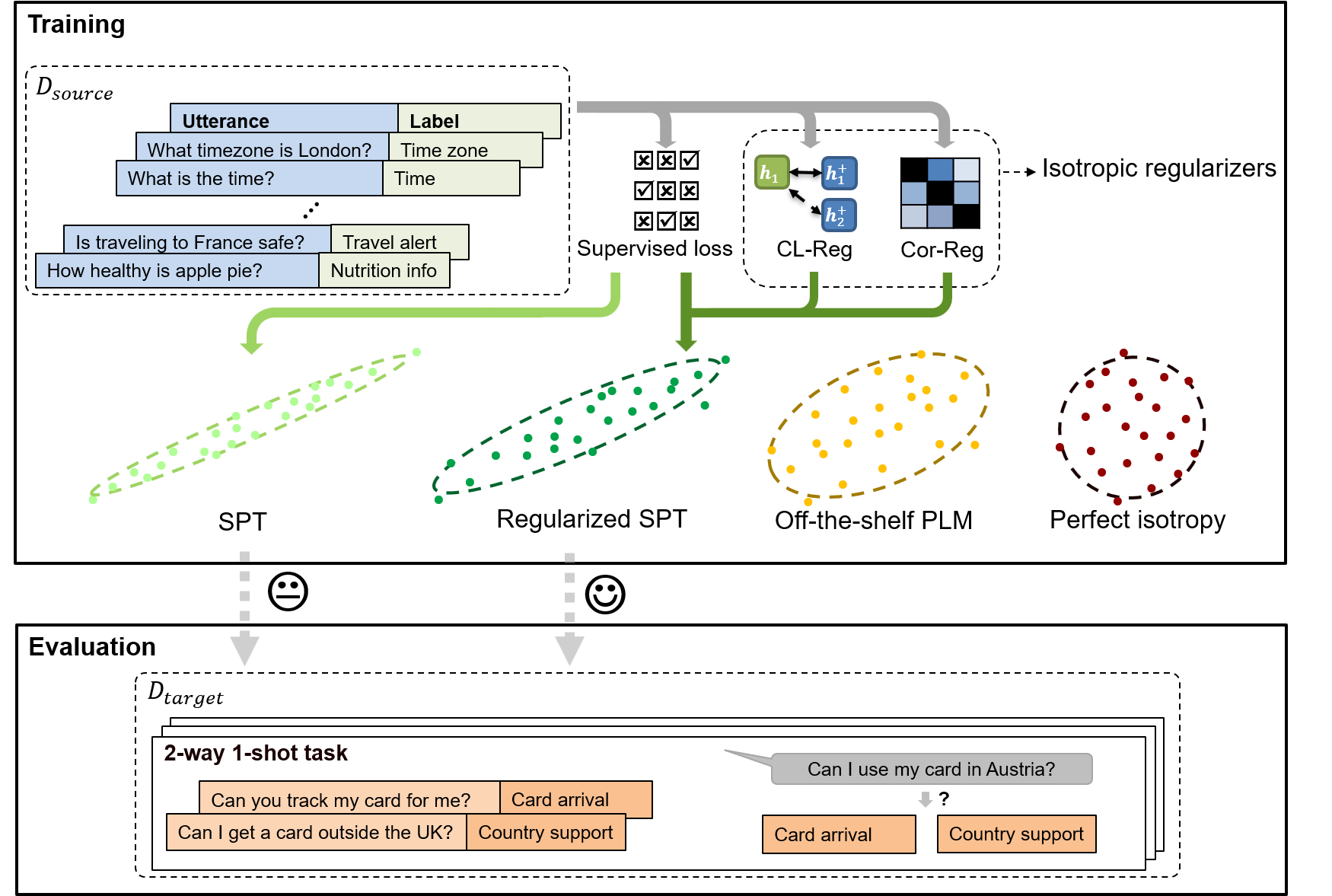

It is challenging to train a good intent classifier for a task-oriented dialogue system with only a few annotations. Recent studies have shown that fine-tuning pre-trained language models with a small amount of labeled utterances from public benchmarks in a supervised manner is extremely helpful. However, we find that supervised pre-training yields an anisotropic feature space, which may suppress the expressive power of the semantic representations. Inspired by recent research in isotropization, we propose to improve supervised pre-training by regularizing the feature space towards isotropy. We propose two regularizers based on contrastive learning and correlation matrix respectively, and demonstrate their effectiveness through extensive experiments. Our main finding is that it is promising to regularize supervised pre-training with isotropization to further improve the performance of few-shot intent detection. The source code can be found at https://github.com/fanolabs/isoIntentBert-main.

翻译:最近的研究显示,对经过训练的语文模式进行微调,从公共基准中贴上少量标签的语句进行微调是极为有益的,然而,我们发现,受监督的训练前会产生一种厌食性特征空间,这可能会抑制语义表达的表达力。受最近在节制化方面的研究的启发,我们提议通过将特征空间正规化为无氮化来改进受监督的预培训。我们提议了两个基于对比性学习和相关性矩阵的规范化者,并通过广泛的实验来展示其有效性。我们的主要发现是,有希望对受监督的预培训进行常规化,采用无节制化,以进一步改进微粒意图检测的性能。源代码可以在https://github.com/fanolabs/isoIntentBert-main上找到。