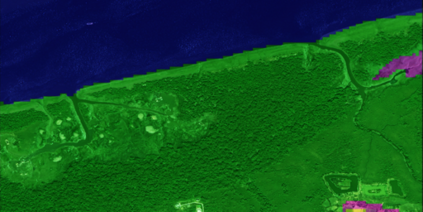

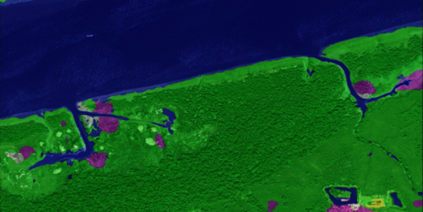

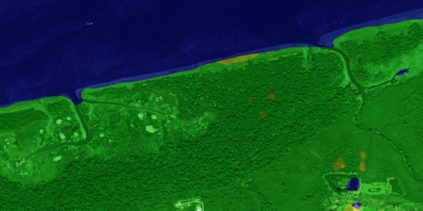

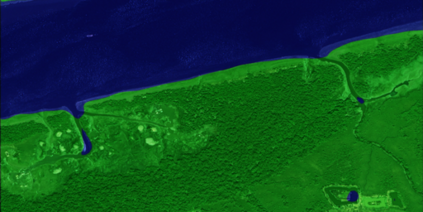

The objective of this work is to segment high-resolution images without overloading GPU memory usage or losing the fine details in the output segmentation map. The memory constraint means that we must either downsample the big image or divide the image into local patches for separate processing. However, the former approach would lose the fine details, while the latter can be ambiguous due to the lack of a global picture. In this work, we present MagNet, a multi-scale framework that resolves local ambiguity by looking at the image at multiple magnification levels. MagNet has multiple processing stages, where each stage corresponds to a magnification level, and the output of one stage is fed into the next stage for coarse-to-fine information propagation. Each stage analyzes the image at a higher resolution than the previous stage, recovering the previously lost details due to the lossy downsampling step, and the segmentation output is progressively refined through the processing stages. Experiments on three high-resolution datasets of urban views, aerial scenes, and medical images show that MagNet consistently outperforms the state-of-the-art methods by a significant margin.

翻译:这项工作的目标是在不给 GPU 内存使用量超载的情况下将高分辨率图像进行分解,或在输出区块图中丢失精细细节。 内存限制意味着我们必须将大图像降格或将图像分成局部部分, 以便分开处理。 但是, 前一种方法会丢失精细细节, 而后一种方法则会由于缺乏全局图像而变得模糊不清。 在这项工作中, 我们展示了MagNet, 这个多尺度框架通过在多个放大度水平上查看图像来解决本地的模糊问题。 MagNet有多个处理阶段, 每个阶段都与放大水平相对应, 一个阶段的输出被输入到下一个阶段, 用于粗度至精度信息传播。 每个阶段分析图像的分辨率高于前一个阶段, 恢复先前因损失的下游步骤而丢失的细节, 分解输出通过处理阶段逐渐精细化。 在三个高分辨率的城市视图、 航空场景和医疗图象数据集上进行的实验显示, MagNet 始终在显著的边缘超越了状态方法。