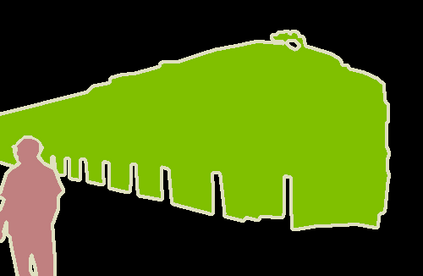

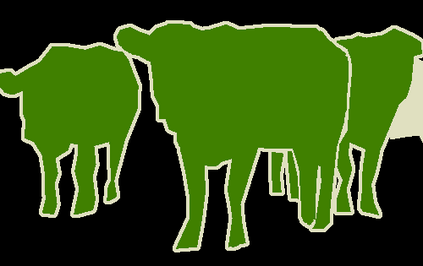

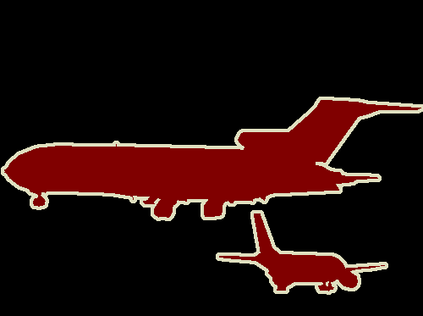

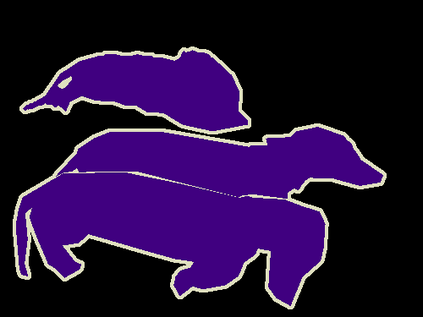

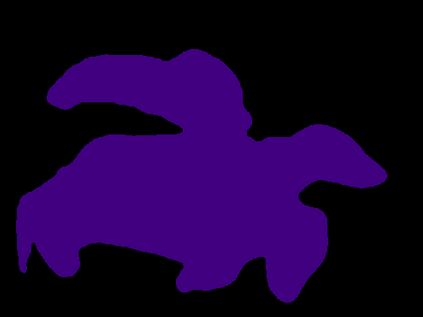

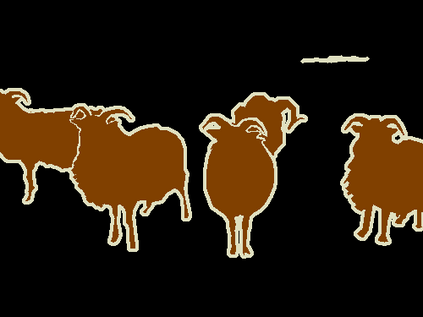

Acquiring sufficient ground-truth supervision to train deep visual models has been a bottleneck over the years due to the data-hungry nature of deep learning. This is exacerbated in some structured prediction tasks, such as semantic segmentation, which requires pixel-level annotations. This work addresses weakly supervised semantic segmentation (WSSS), with the goal of bridging the gap between image-level annotations and pixel-level segmentation. We formulate WSSS as a novel group-wise learning task that explicitly models semantic dependencies in a group of images to estimate more reliable pseudo ground-truths, which can be used for training more accurate segmentation models. In particular, we devise a graph neural network (GNN) for group-wise semantic mining, wherein input images are represented as graph nodes, and the underlying relations between a pair of images are characterized by an efficient co-attention mechanism. Moreover, in order to prevent the model from paying excessive attention to common semantics only, we further propose a graph dropout layer, encouraging the model to learn more accurate and complete object responses. The whole network is end-to-end trainable by iterative message passing, which propagates interaction cues over the images to progressively improve the performance. We conduct experiments on the popular PASCAL VOC 2012 and COCO benchmarks, and our model yields state-of-the-art performance. Our code is available at: https://github.com/Lixy1997/Group-WSSS.

翻译:多年来,由于深层学习的数据-饥饿性质,为培养深层视觉模型而需要的足够的地面真相监督一直是一个瓶颈。在一些结构化的预测任务中,这种情况更加恶化,例如语义分割,这需要像素级的注释。这项工作涉及监管不力的语义分割(WSSS),目的是缩小图像级别说明和像素级分解之间的差距。我们把SSS设计成一个新颖的小组式学习任务,明确模拟一组图像中的语义依赖性,以估计更可靠的假的地面真相,可用于培训更准确的分解模型。特别是,我们设计了一个图形神经网络(GNNN),用于群度的语义开采,其中输入图像以图解节点表示,一对图像之间的内在关系以高效的共留机制为特征。此外,为了防止模型过于关注通用的语义结构,我们进一步提议了一个图形丢弃层,鼓励模型学习更准确和完整的对象分解模型,用于培训更准确的土壤分解模型。我们设计了一个图文系的图像,我们整个网络的图像将逐步升级到2012年版本。