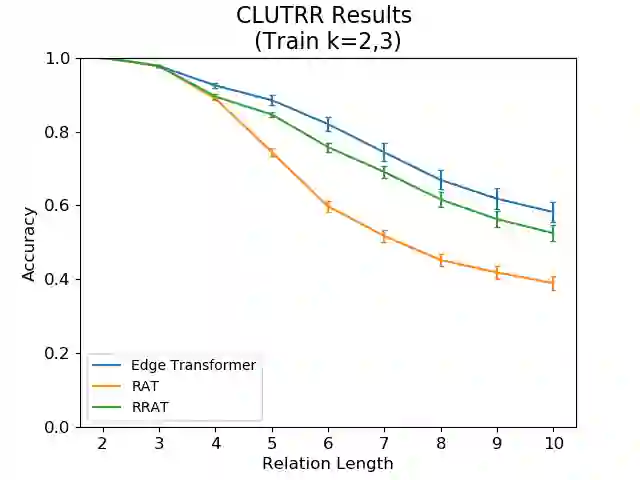

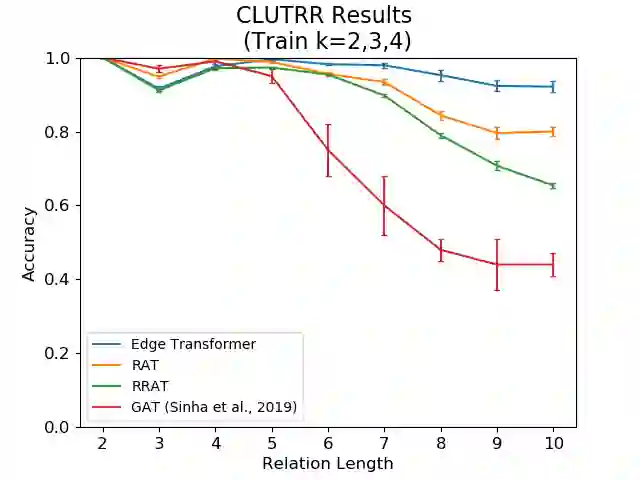

Recent research suggests that systematic generalization in natural language understanding remains a challenge for state-of-the-art neural models such as Transformers and Graph Neural Networks. To tackle this challenge, we propose Edge Transformer, a new model that combines inspiration from Transformers and rule-based symbolic AI. The first key idea in Edge Transformers is to associate vector states with every edge, that is, with every pair of input nodes -- as opposed to just every node, as it is done in the Transformer model. The second major innovation is a triangular attention mechanism that updates edge representations in a way that is inspired by unification from logic programming. We evaluate Edge Transformer on compositional generalization benchmarks in relational reasoning, semantic parsing, and dependency parsing. In all three settings, the Edge Transformer outperforms Relation-aware, Universal and classical Transformer baselines.

翻译:最近的研究显示,对自然语言理解系统化的概括化仍然是最先进的神经模型(如变异器和图形神经网络)的挑战。为了应对这一挑战,我们提议了边缘变异器,这是一个将来自变异器的灵感与基于规则的象征性AI相结合的新模型。边缘变异器的第一个关键想法是将矢量国家与每个边缘联系起来,即与每一对输入节点联系起来,而不是与在变异器模型中所做的每一个节点相对应。第二个主要创新是三角关注机制,它以逻辑编程的统一激励的方式更新边缘表达方式。我们评估了在关联推理、语义分解和依赖性分辨中构成的通用基准。在所有三个环境中,都使用了电变异器超越变异变法关系意识、通用和古典变异器基线。