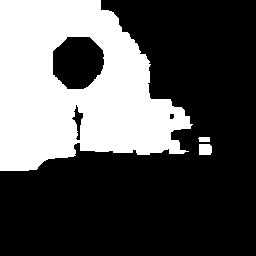

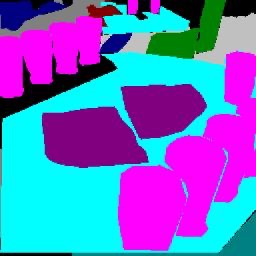

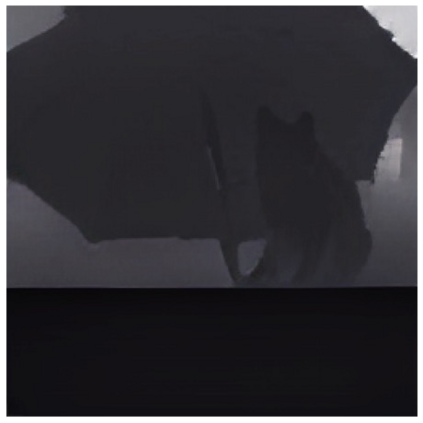

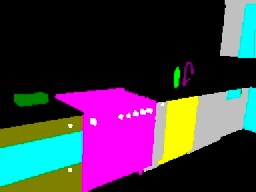

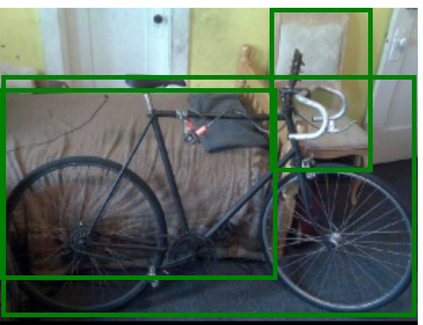

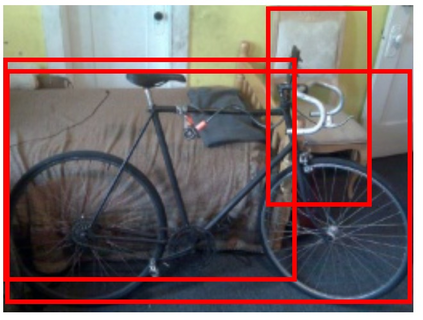

We propose Unified-IO, a model that performs a large variety of AI tasks spanning classical computer vision tasks, including pose estimation, object detection, depth estimation and image generation, vision-and-language tasks such as region captioning and referring expression comprehension, to natural language processing tasks such as question answering and paraphrasing. Developing a single unified model for such a large variety of tasks poses unique challenges due to the heterogeneous inputs and outputs pertaining to each task, including RGB images, per-pixel maps, binary masks, bounding boxes, and language. We achieve this unification by homogenizing every supported input and output into a sequence of discrete vocabulary tokens. This common representation across all tasks allows us to train a single transformer-based architecture, jointly on over 80 diverse datasets in the vision and language fields. Unified-IO is the first model capable of performing all 7 tasks on the GRIT benchmark and produces strong results across 16 diverse benchmarks like NYUv2-Depth, ImageNet, VQA2.0, OK-VQA, Swig, VizWizGround, BoolQ, and SciTail, with no task or benchmark specific fine-tuning. Demos for Unified-IO are available at https://unified-io.allenai.org.

翻译:我们建议采用统一-IO模式,这一模式可以执行包括古典计算机愿景任务在内的多种AI任务,包括估算、物体探测、深度估计和图像生成、视觉和语言任务,如区域字幕和表达理解,以及自然语言处理任务,如问答和参数表达等。为此类大量任务开发一个单一的统一模式,由于与每项任务相关的投入和产出多种多样,包括RGB图像、每像素地图、双面面面罩、捆绑框和语言。我们通过将所有支持的投入和产出统一成一系列独立的词汇符号来实现这一统一。这种共同的表述方式使我们得以在视觉和语言领域的80多个不同数据集上共同培训一个单一的变压器式结构。统一-IO是第一个能够执行全球资源信息数据库所有7项任务的模型,并在16个不同基准(如NYUv2-Depeh、图像网、VQA2.0、 OK-VQA、Swig、VizGround、BoolQ和Sciuntail)上没有用于统一/Dimal-IO。