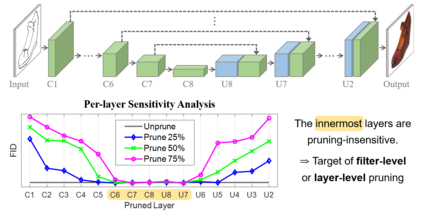

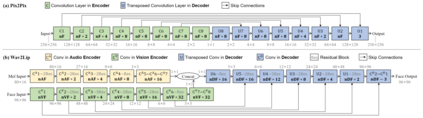

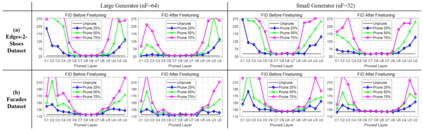

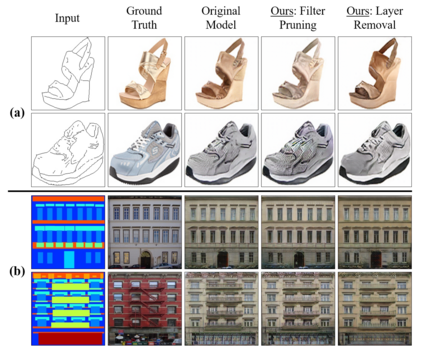

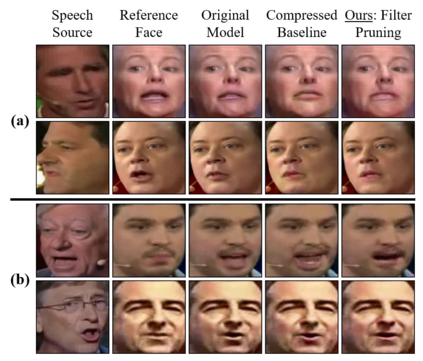

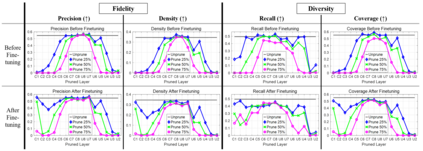

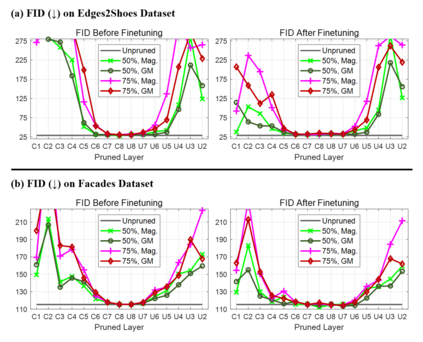

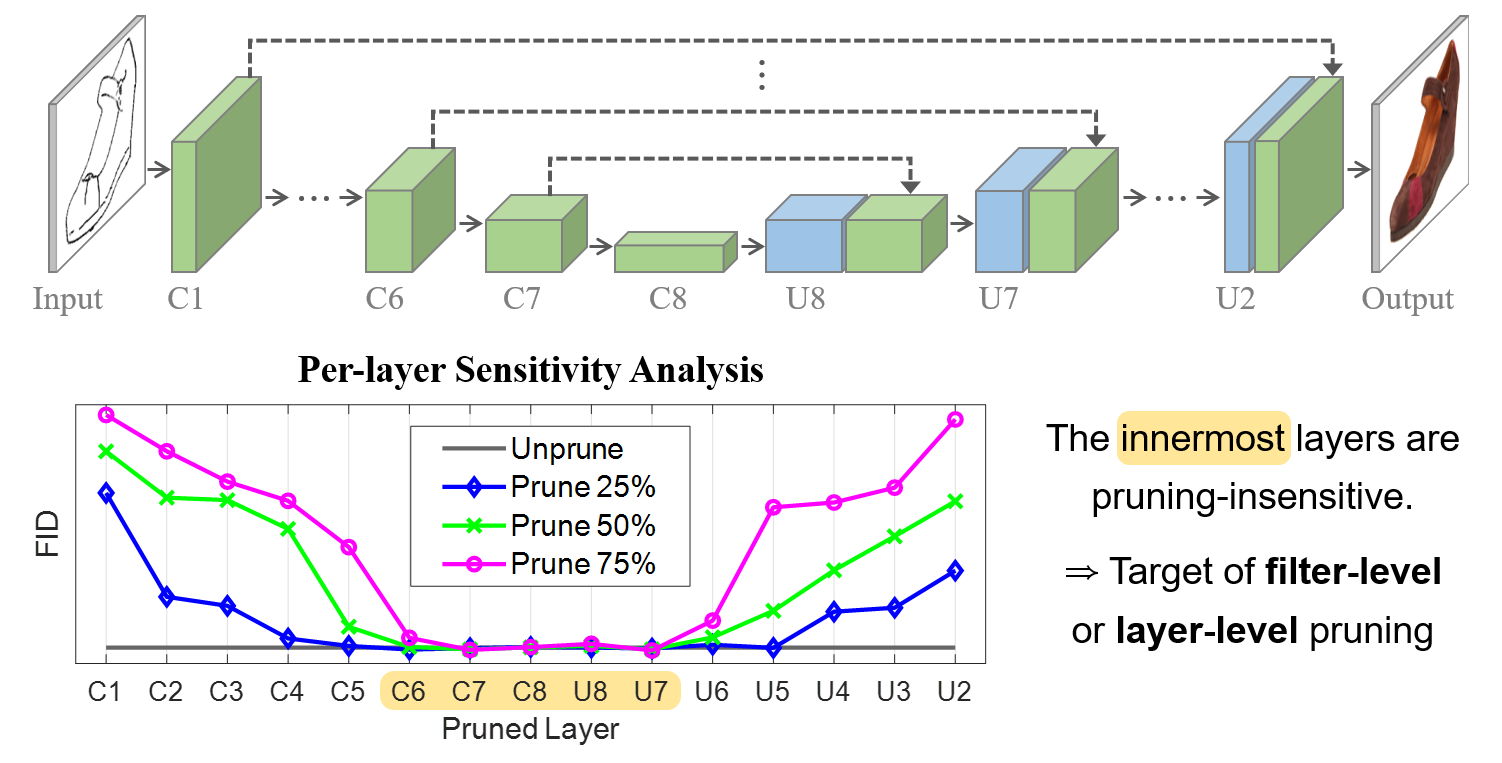

Pruning effectively compresses overparameterized models. Despite the success of pruning methods for discriminative models, applying them for generative models has been relatively rarely approached. This study conducts structured pruning on U-Net generators of conditional GANs. A per-layer sensitivity analysis confirms that many unnecessary filters exist in the innermost layers near the bottleneck and can be substantially pruned. Based on this observation, we prune these filters from multiple inner layers or suggest alternative architectures by completely eliminating the layers. We evaluate our approach with Pix2Pix for image-to-image translation and Wav2Lip for speech-driven talking face generation. Our method outperforms global pruning baselines, demonstrating the importance of properly considering where to prune for U-Net generators.

翻译:有效压缩压压超分化模型。 尽管对歧视模型的修剪方法非常成功, 但相对而言很少采用这些方法来应用基因模型。 本研究对有条件GANs的 U-Net 生成器进行结构化的修剪。 一次层次敏感度分析证实,在瓶颈附近的最深层存在许多不必要的过滤器, 并且可以大量修剪。 基于这一观察, 我们将这些过滤器从多个内部层中提取出来, 或者通过完全清除这些层来建议替代结构。 我们用 Pix2Pix 来评估图像到模拟翻译的方法, 和用Wav2Lip 来评估语音驱动的面部话语生成的方法。 我们的方法优于全球修剪基准, 表明适当考虑如何为 U- Net 生成器进行普鲁纳的重要性 。