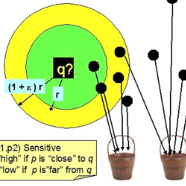

Large machine learning models achieve unprecedented performance on various tasks and have evolved as the go-to technique. However, deploying these compute and memory hungry models on resource constraint environments poses new challenges. In this work, we propose mathematically provable Representer Sketch, a concise set of count arrays that can approximate the inference procedure with simple hashing computations and aggregations. Representer Sketch builds upon the popular Representer Theorem from kernel literature, hence the name, providing a generic fundamental alternative to the problem of efficient inference that goes beyond the popular approach such as quantization, iterative pruning and knowledge distillation. A neural network function is transformed to its weighted kernel density representation, which can be very efficiently estimated with our sketching algorithm. Empirically, we show that Representer Sketch achieves up to 114x reduction in storage requirement and 59x reduction in computation complexity without any drop in accuracy.

翻译:大型机器学习模型在各种任务上取得了前所未有的业绩,并随着技术的发展而演变。然而,在资源制约环境中部署这些计算和记忆饥饿模型带来了新的挑战。在这项工作中,我们建议采用数学上可辨别的代言人 Scetch,这是一套简洁的计数阵列,可以用简单的散列计算和集成来比喻推论程序。代言人Scretech以内核文献中流行的代言人理论为基础,因此该名称为有效推论问题提供了一个通用的基本替代方案,它超越了量化、迭代操纵和知识蒸馏等流行方法。神经网络功能转换为其加权内核密度代表,可以通过我们的草图算法非常高效地估计。我们自然地表明,代言人Scletch在不降低准确性的情况下达到了114x存储要求和59x计算复杂性减少114x。