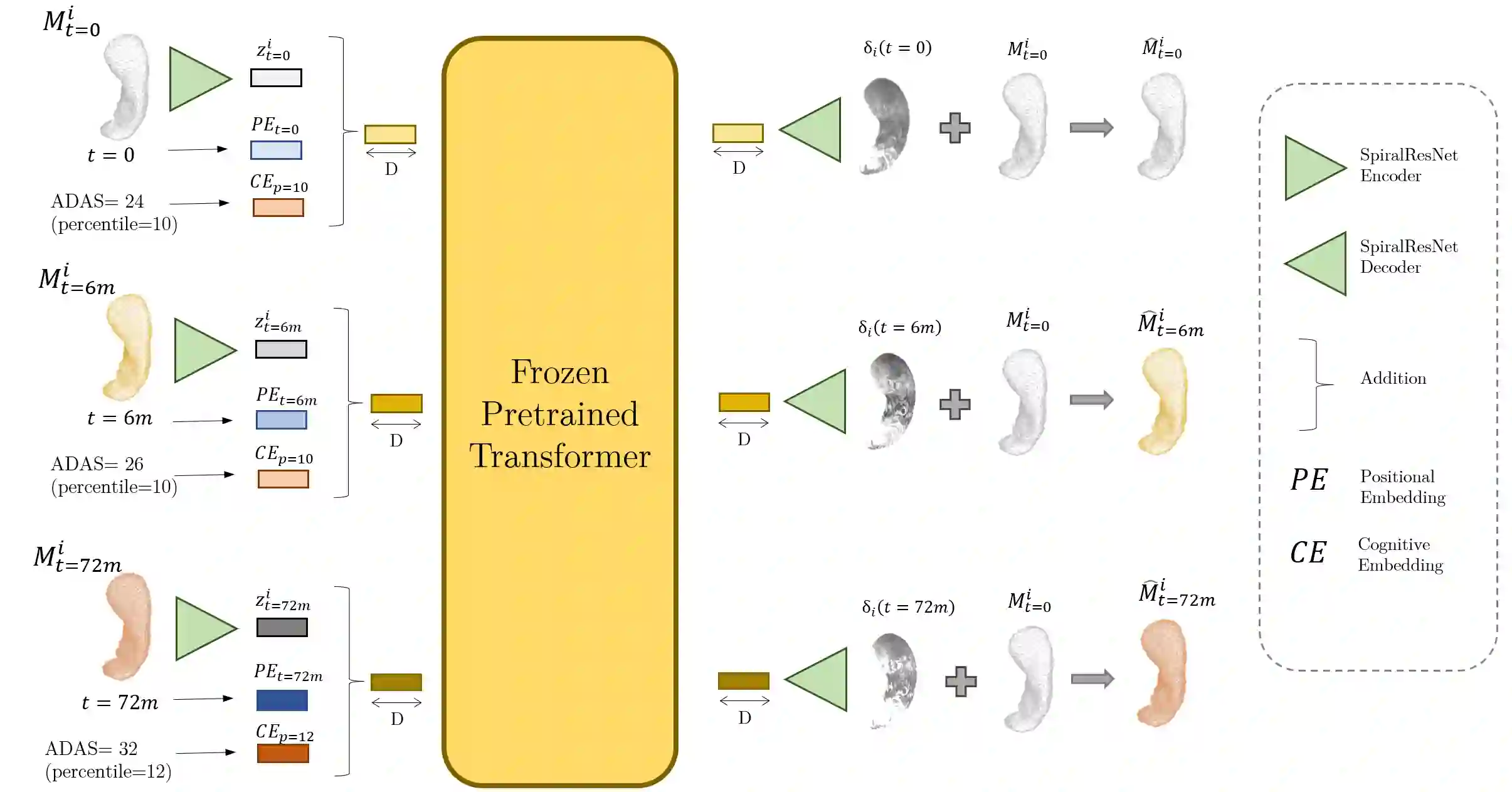

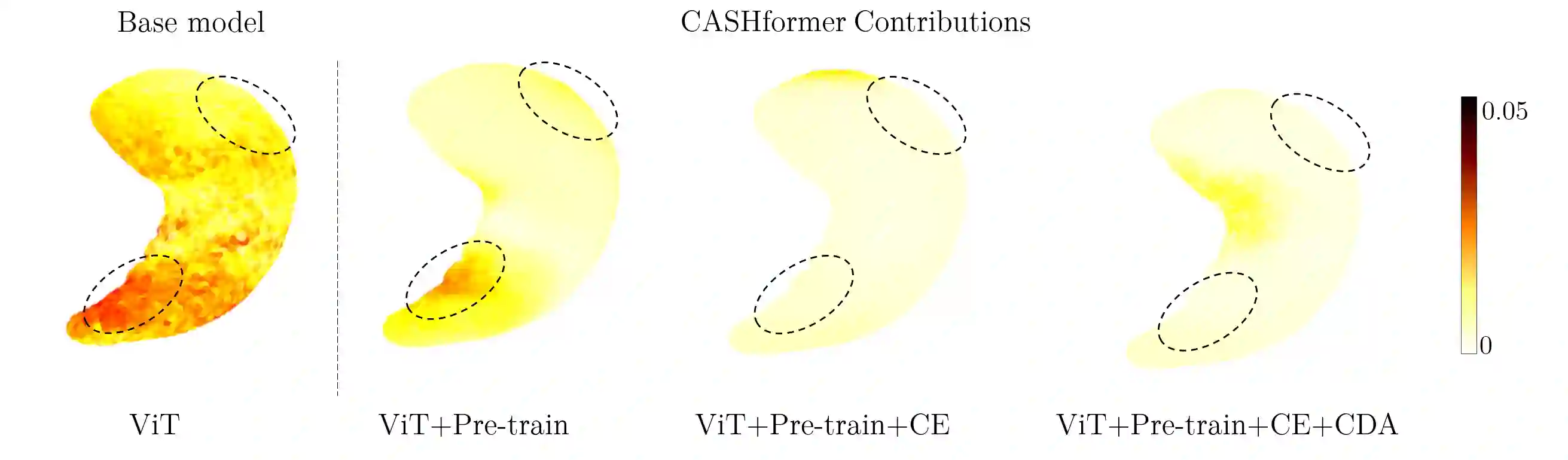

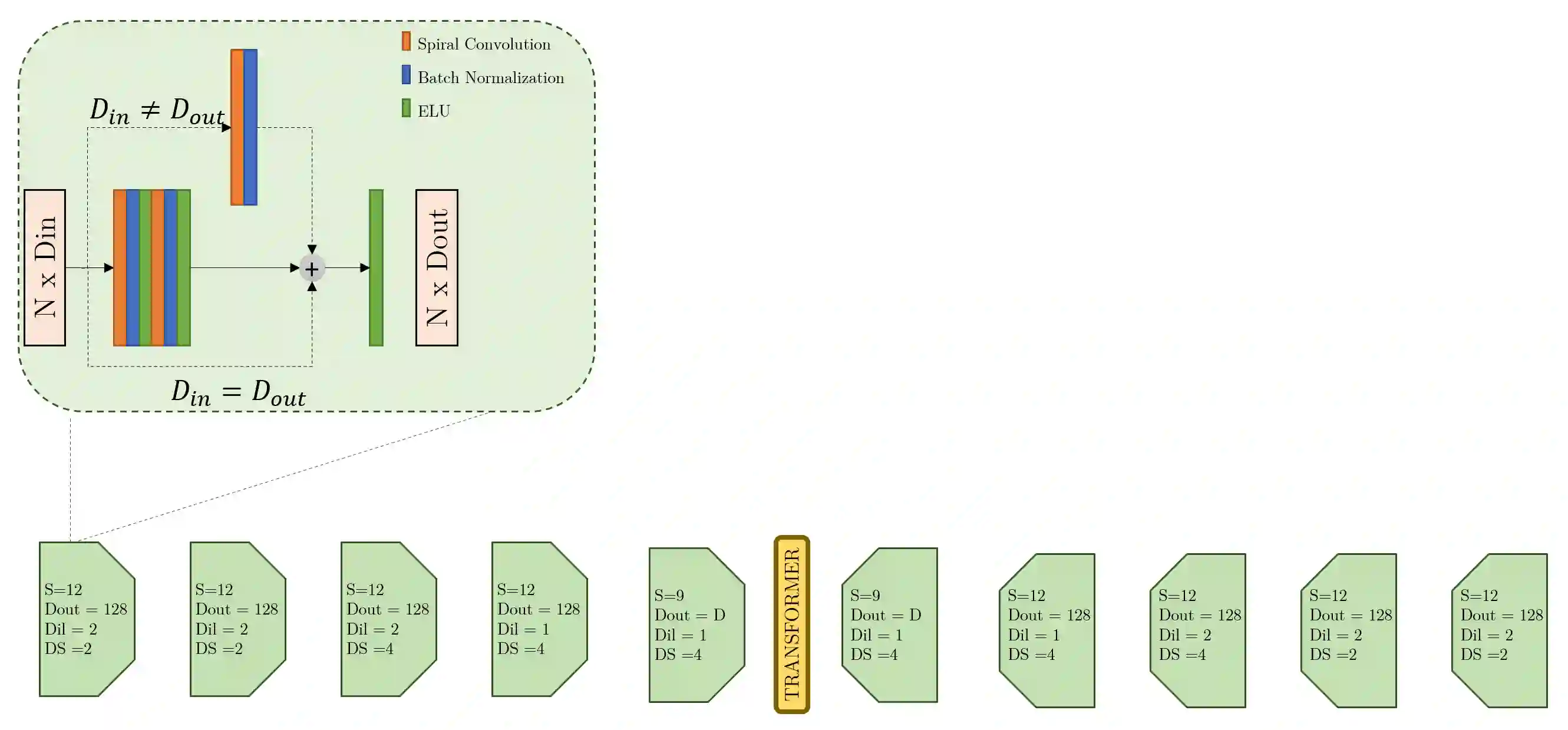

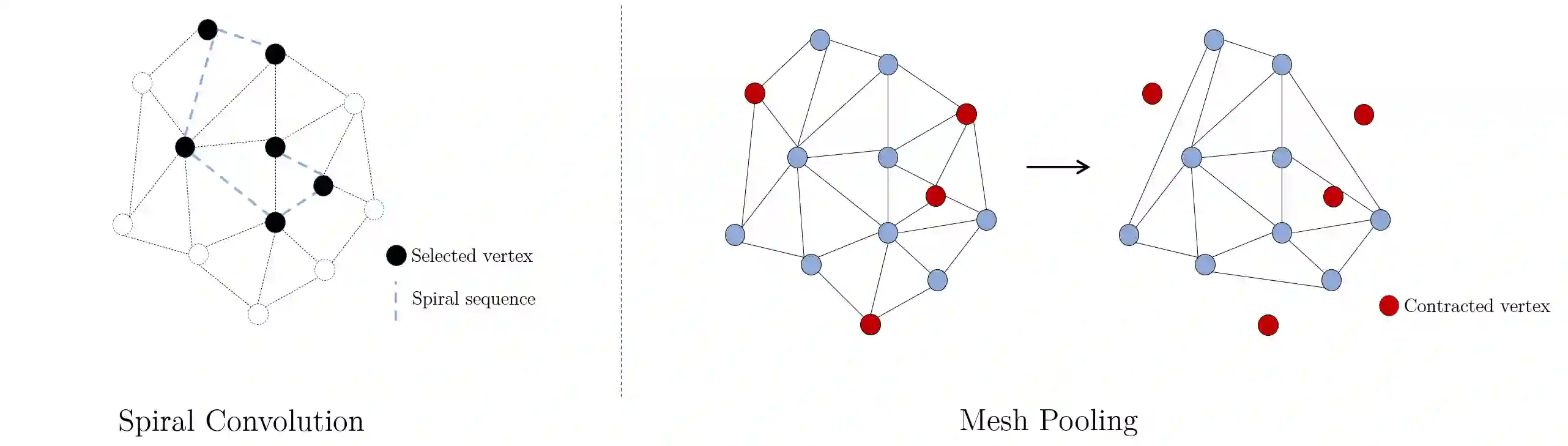

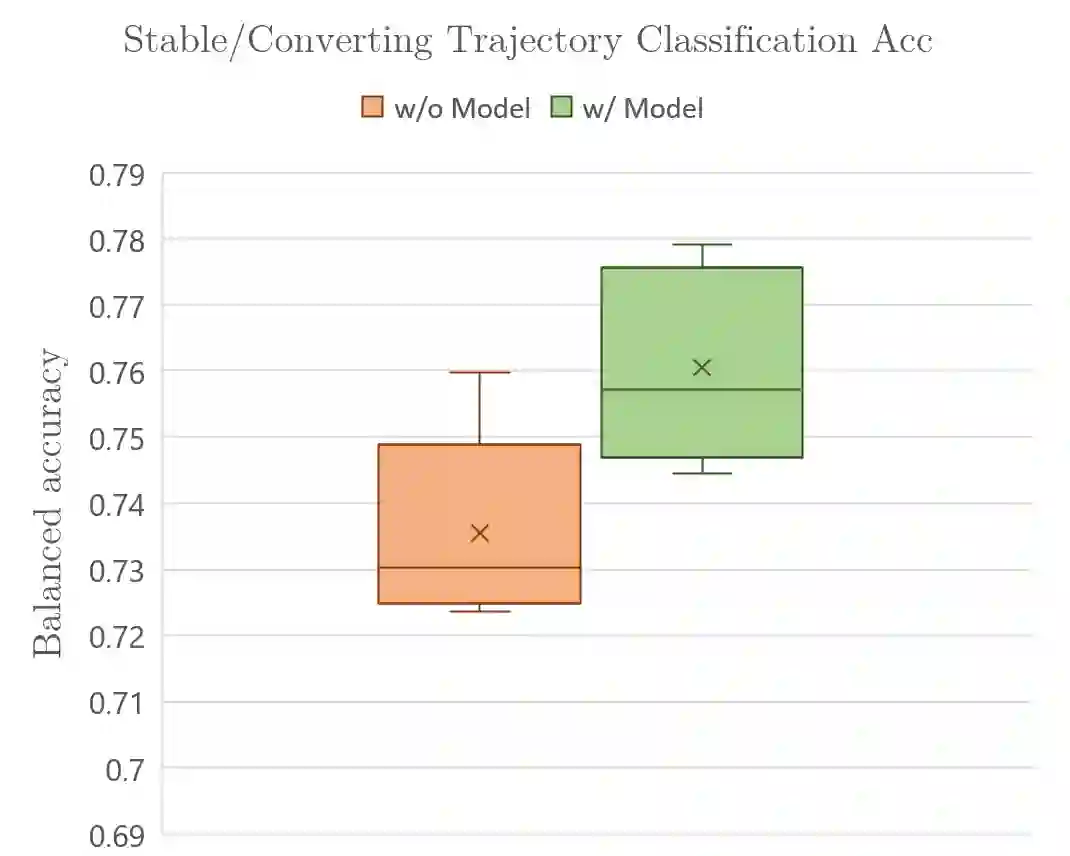

Modeling temporal changes in subcortical structures is crucial for a better understanding of the progression of Alzheimer's disease (AD). Given their flexibility to adapt to heterogeneous sequence lengths, mesh-based transformer architectures have been proposed in the past for predicting hippocampus deformations across time. However, one of the main limitations of transformers is the large amount of trainable parameters, which makes the application on small datasets very challenging. In addition, current methods do not include relevant non-image information that can help to identify AD-related patterns in the progression. To this end, we introduce CASHformer, a transformer-based framework to model longitudinal shape trajectories in AD. CASHformer incorporates the idea of pre-trained transformers as universal compute engines that generalize across a wide range of tasks by freezing most layers during fine-tuning. This reduces the number of parameters by over 90% with respect to the original model and therefore enables the application of large models on small datasets without overfitting. In addition, CASHformer models cognitive decline to reveal AD atrophy patterns in the temporal sequence. Our results show that CASHformer reduces the reconstruction error by 73% compared to previously proposed methods. Moreover, the accuracy of detecting patients progressing to AD increases by 3% with imputing missing longitudinal shape data.

翻译:模拟亚表层结构的时间变化对于更好地了解阿尔茨海默氏病(AD)的演变至关重要。鉴于其适应不同序列长度的灵活性,过去曾提议以网状为基础的变压器结构来预测时空变形。然而,变压器的主要局限性之一是大量的可训练参数,这使得对小型数据集的应用非常具有挑战性。此外,目前的方法不包括相关的非模拟信息,而这些信息有助于查明进化过程中与AD有关的模式。为此,我们引入了CASHexer,一个基于变压器的框架,以模拟AD的长视形状轨迹。CASHExer将预先训练的变压器的概念纳入到通用的折叠式引擎中,通过在微调中冻结大多数层,将任务广泛化为通用。这使得对小型数据集的应用非常具有挑战性。因此,目前的方法并不包括有助于在进化过程中识别与AD相关模式应用大模型。此外,我们引入了CASHASTAD模型, 以显示时间序列中的减缩式模式。CASASSED将改进到AD的准确性。我们以前通过检测到ADFAFM 3的错误来显示, ASRAD ASD ASMAD ASMAD ASUD ASMAD 3 ASMAD ASMAD ASMAD