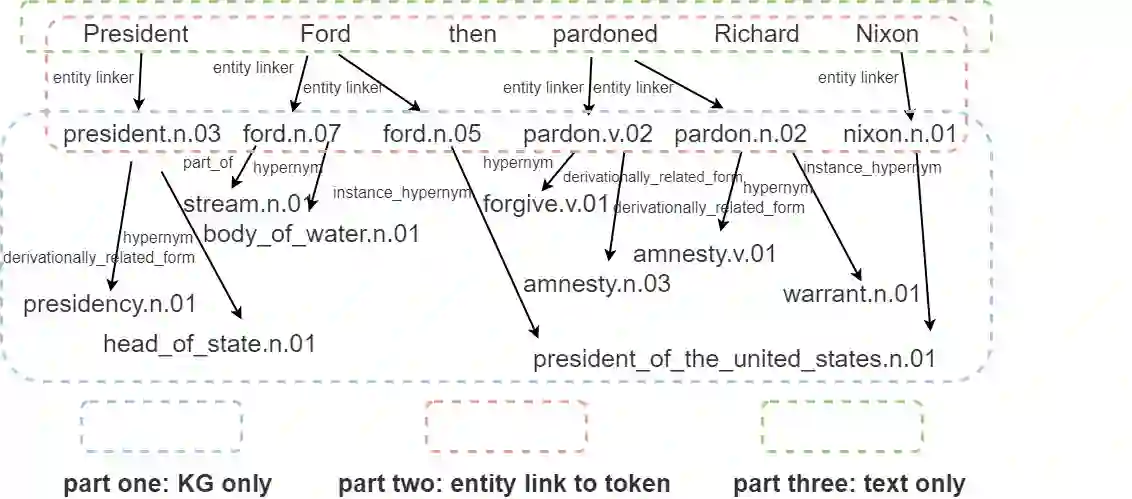

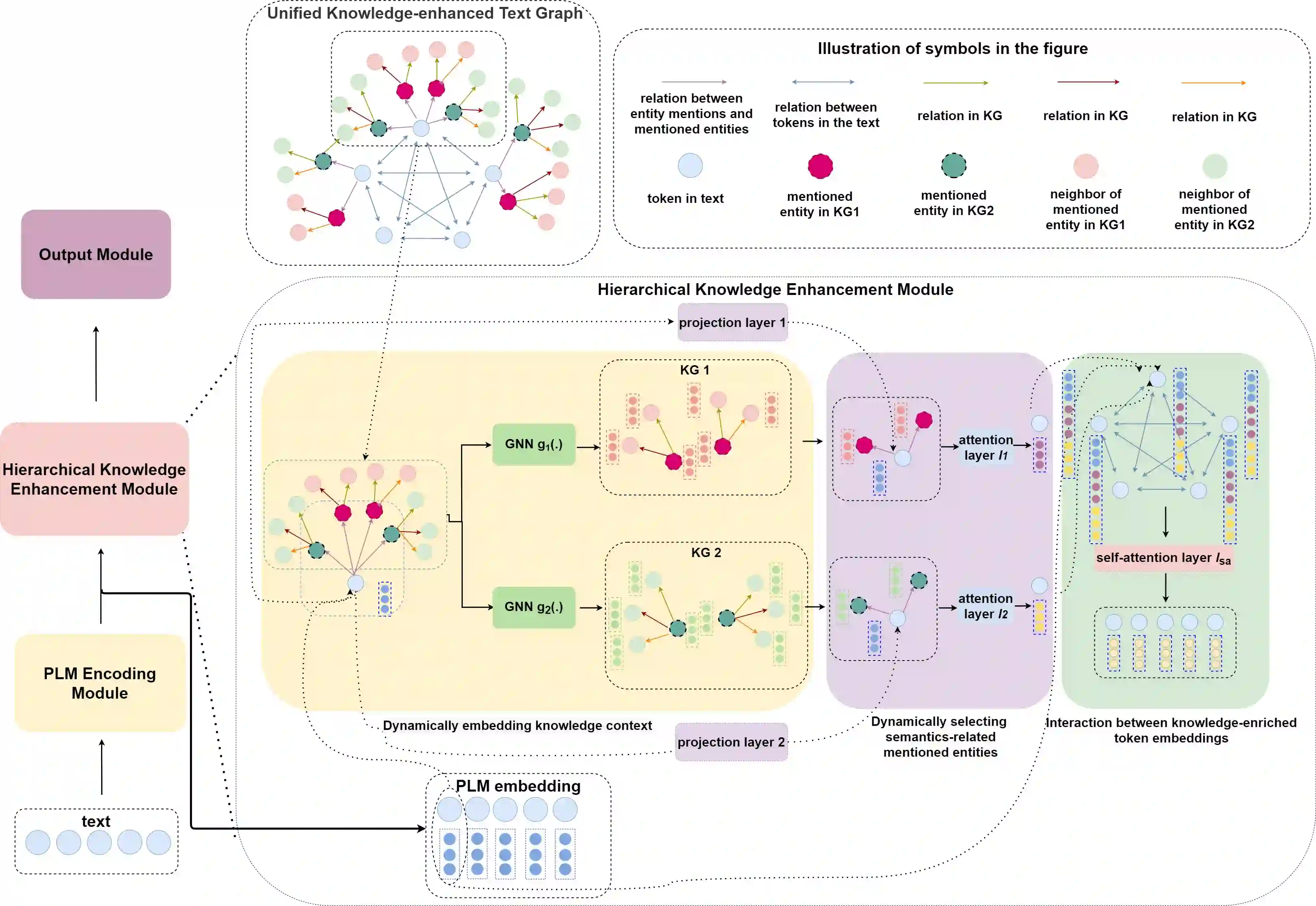

Incorporating factual knowledge into pre-trained language models (PLM) such as BERT is an emerging trend in recent NLP studies. However, most of the existing methods combine the external knowledge integration module with a modified pre-training loss and re-implement the pre-training process on the large-scale corpus. Re-pretraining these models is usually resource-consuming, and difficult to adapt to another domain with a different knowledge graph (KG). Besides, those works either cannot embed knowledge context dynamically according to textual context or struggle with the knowledge ambiguity issue. In this paper, we propose a novel knowledge-aware language model framework based on fine-tuning process, which equips PLM with a unified knowledge-enhanced text graph that contains both text and multi-relational sub-graphs extracted from KG. We design a hierarchical relational-graph-based message passing mechanism, which can allow the representations of injected KG and text to mutually update each other and can dynamically select ambiguous mentioned entities that share the same text. Our empirical results show that our model can efficiently incorporate world knowledge from KGs into existing language models such as BERT, and achieve significant improvement on the machine reading comprehension (MRC) task compared with other knowledge-enhanced models.

翻译:将事实知识纳入培训前语言模型(PLM),如BERT等实际知识纳入培训前语言模型(PLM)是最近国家学习计划研究中出现的一个新趋势,然而,大多数现有方法将外部知识整合模块与经修改的培训前损失和重新实施大规模培训前程序相结合。对这些模型进行再培训通常耗费资源,而且难以适应使用不同知识图表(KG)的另一个领域。此外,这些工程要么无法根据文字背景动态地将知识背景纳入知识背景,要么无法与知识模糊问题进行斗争。在本文中,我们提出了一个以微调程序为基础的新的有知识的语言模型框架,为PLM提供统一的知识强化文本图,该图包含从KG提取的文本和多关系分图。我们设计了一个基于等级关系绘图的信息传递机制,使注入的KG和文本能够相互更新,并且能够动态地选择共享同一文本的模糊实体。我们的经验结果显示,我们的模型能够有效地将KGs的世界知识纳入现有的语言模型中,如BERRTC模型,并实现对机器任务的重大改进。