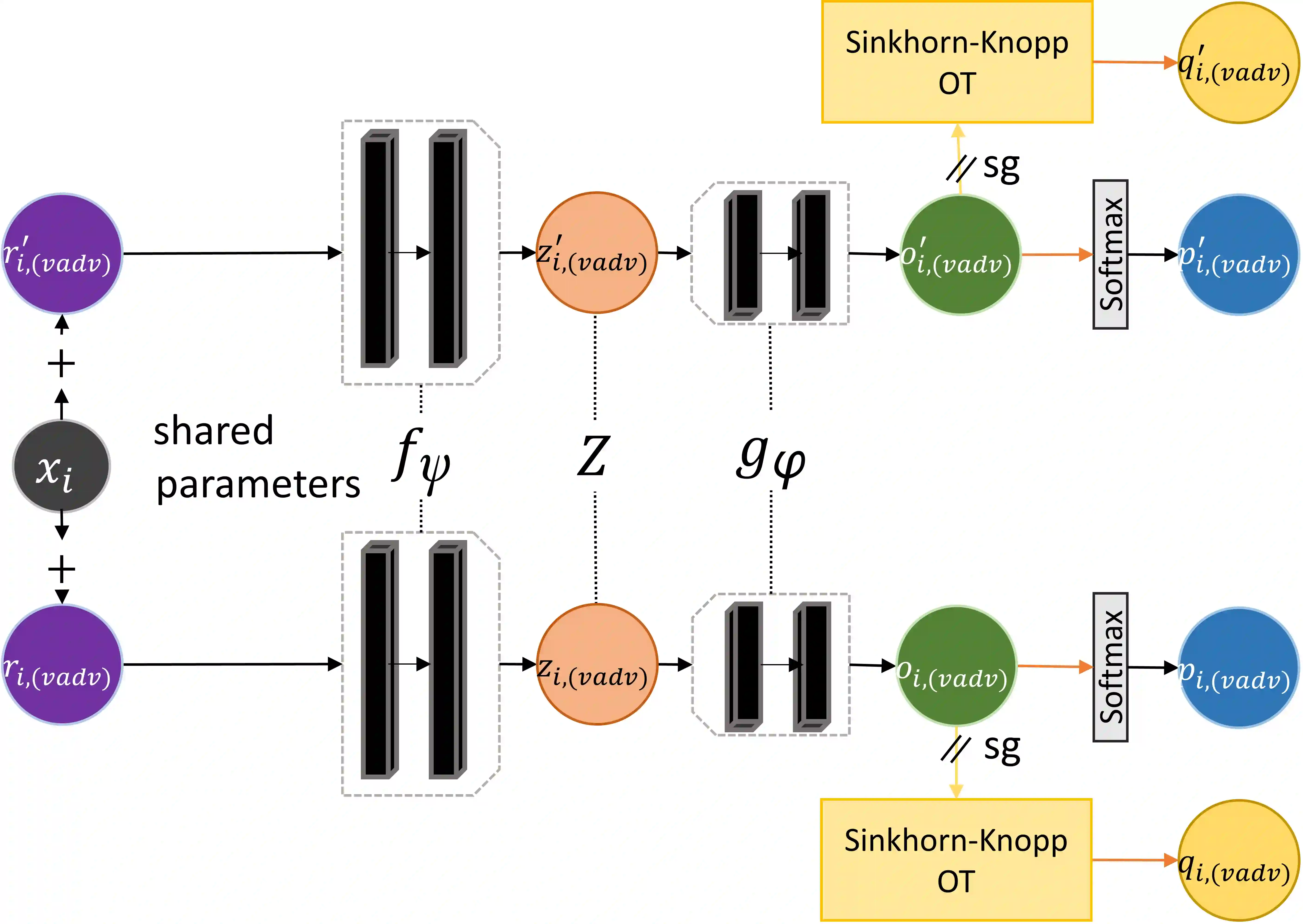

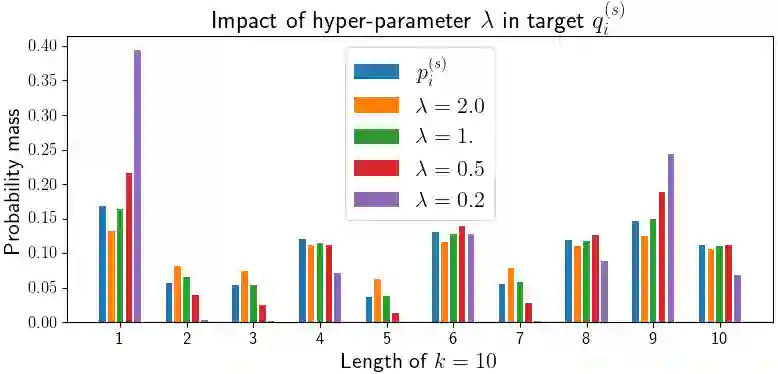

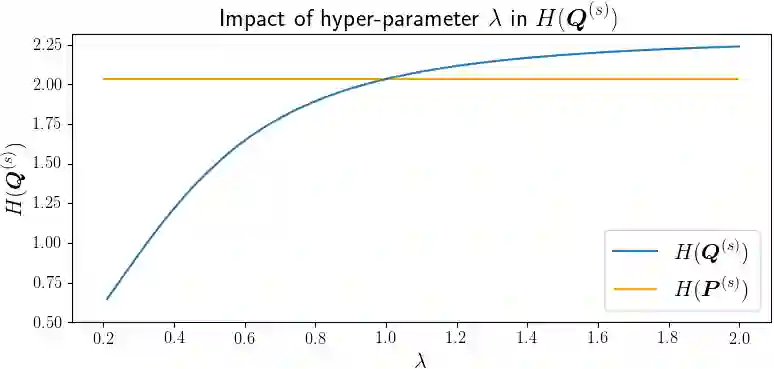

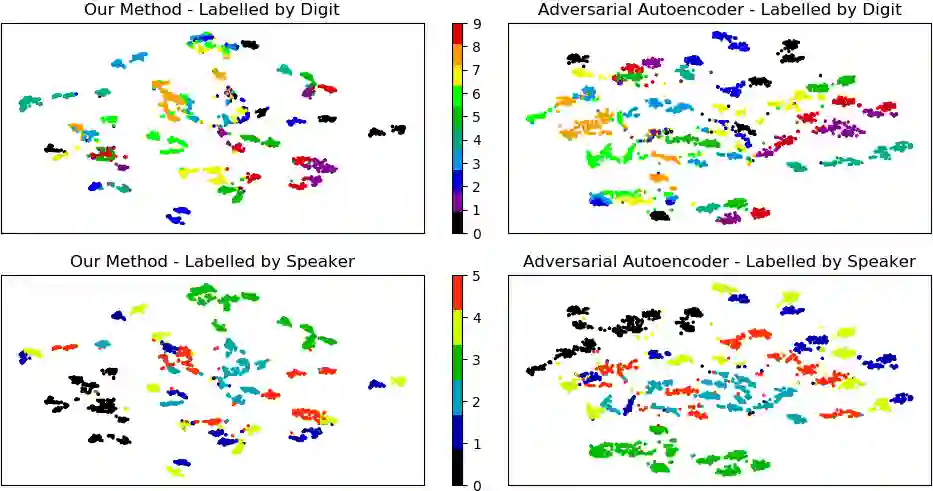

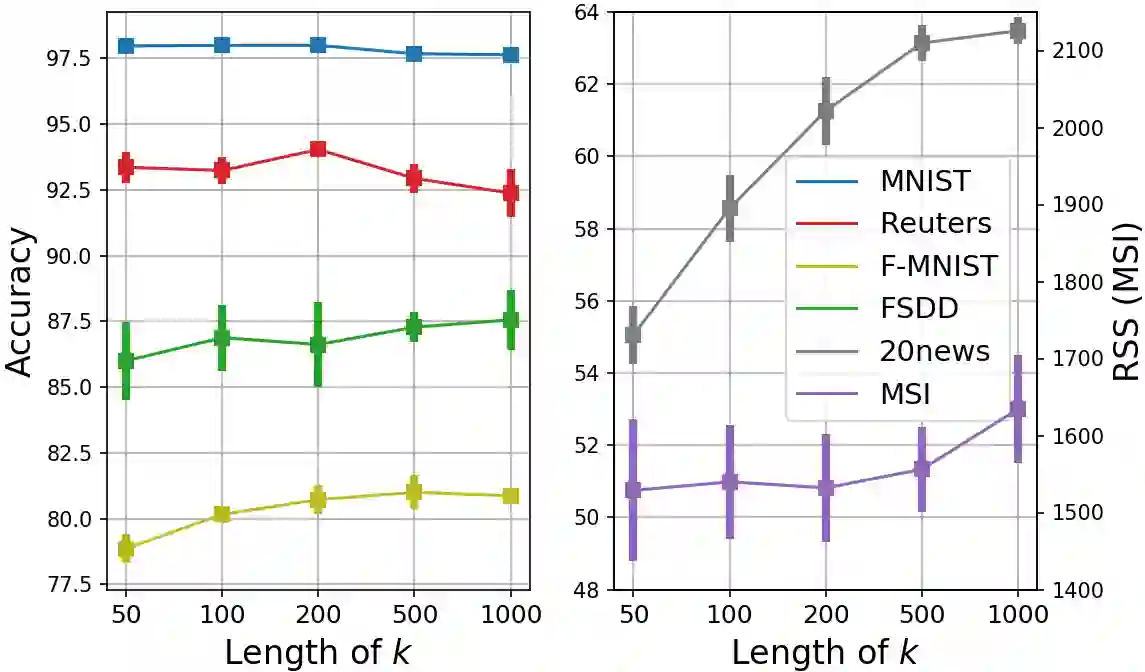

Self-supervised learning (SSL) has become a popular method for generating invariant representations without the need for human annotations. Nonetheless, the desired invariant representation is achieved by utilising prior online transformation functions on the input data. As a result, each SSL framework is customised for a particular data type, e.g., visual data, and further modifications are required if it is used for other dataset types. On the other hand, autoencoder (AE), which is a generic and widely applicable framework, mainly focuses on dimension reduction and is not suited for learning invariant representation. This paper proposes a generic SSL framework based on a constrained self-labelling assignment process that prevents degenerate solutions. Specifically, the prior transformation functions are replaced with a self-transformation mechanism, derived through an unsupervised training process of adversarial training, for imposing invariant representations. Via the self-transformation mechanism, pairs of augmented instances can be generated from the same input data. Finally, a training objective based on contrastive learning is designed by leveraging both the self-labelling assignment and the self-transformation mechanism. Despite the fact that the self-transformation process is very generic, the proposed training strategy outperforms a majority of state-of-the-art representation learning methods based on AE structures. To validate the performance of our method, we conduct experiments on four types of data, namely visual, audio, text, and mass spectrometry data, and compare them in terms of four quantitative metrics. Our comparison results indicate that the proposed method demonstrate robustness and successfully identify patterns within the datasets.

翻译:自我监督的学习(SSL)已成为一种流行的方法,用于生成不需人工说明的变异表达方式。然而,理想的变异表达方式是通过在输入数据上使用先前的在线转换功能来实现的。因此,每个 SSL 框架为某一特定数据类型定制,例如视觉数据,如果用于其他数据集类型,则需要进一步修改。另一方面,自动编码器(AE)是一个通用和广泛适用的框架,主要侧重于尺寸减少,不适于学习变异表达方式。本文提出一个通用的 SSL 框架,其基础是有限的自我标签任务分配过程,防止了退化的解决方案。具体地说,以前的变异功能被一个自变机制所定制,通过未经监督的对抗性培训过程,将它用于其他数据集类型。 自我转换机制,从相同的输入数据数据中生成各种强化实例。最后,一个基于对比学习设计的培训目标,是利用自我标签任务内部的自我标签和比较性质量分配的指定格式,也就是基于我们提出的四种变异性数据结构的自我转换方法, 显示我们提出的四种数据格式的自我转换方法。