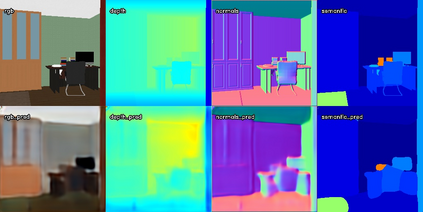

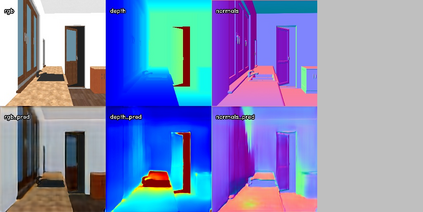

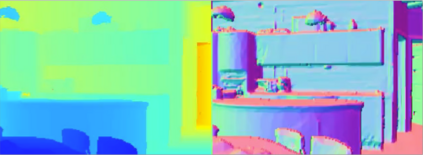

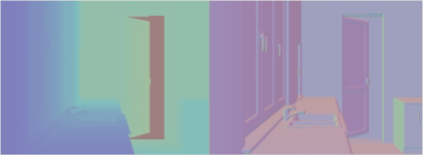

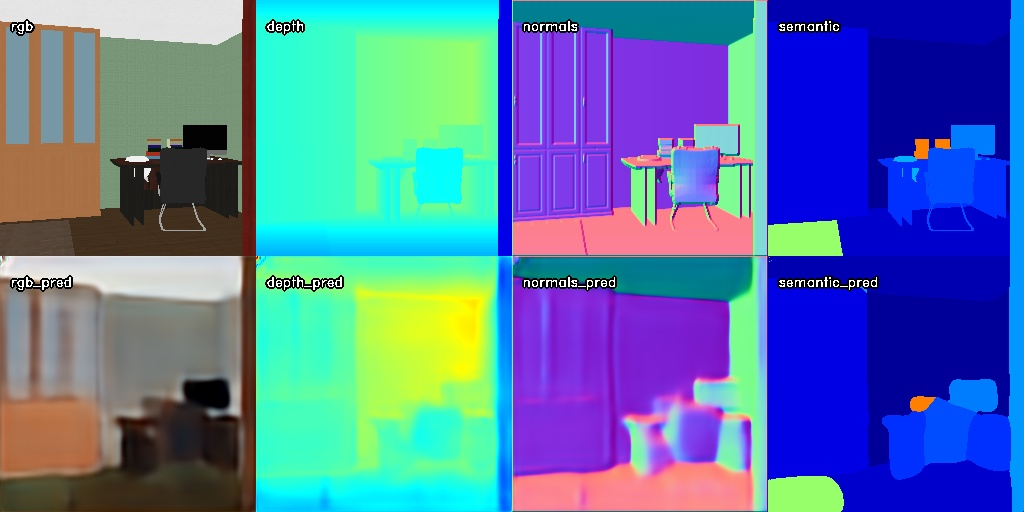

We propose SplitNet, a method for decoupling visual perception and policy learning. By incorporating auxiliary tasks and selective learning of portions of the model, we explicitly decompose the learning objectives for visual navigation into perceiving the world and acting on that perception. We show dramatic improvements over baseline models on transferring between simulators, an encouraging step towards Sim2Real. Additionally, SplitNet generalizes better to unseen environments from the same simulator and transfers faster and more effectively to novel embodied navigation tasks. Further, given only a small sample from a target domain, SplitNet can match the performance of traditional end-to-end pipelines which receive the entire dataset. Code and video are available at https://github.com/facebookresearch/splitnet and https://youtu.be/TJkZcsD2vrc

翻译:我们提出SplitNet,这是将视觉感知和政策学习脱钩的一种方法。我们把辅助任务和有选择地学习模型的某些部分纳入其中,我们明确地将视觉导航的学习目标分解为对世界的感知并据此采取行动。我们展示了在模拟器之间转移的基准模型上的巨大改进,这是向Sim2Real迈出的令人鼓舞的一步。此外,SlitNet从同一个模拟器向看不见环境的更好概括,并更快和更有效地向新颖的体现导航任务转移。此外,由于目标域只有少量的样本,SlipNet可以匹配接收整个数据集的传统端到端管道的性能。代码和视频可在https://github.com/facebourseearch/splitnet和https://youtu.be/TJkZsD2vrc上查阅。