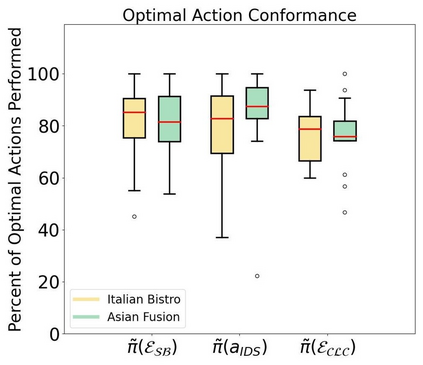

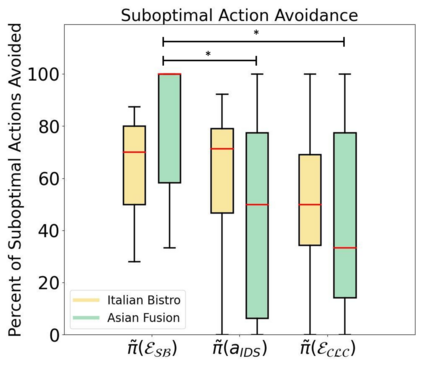

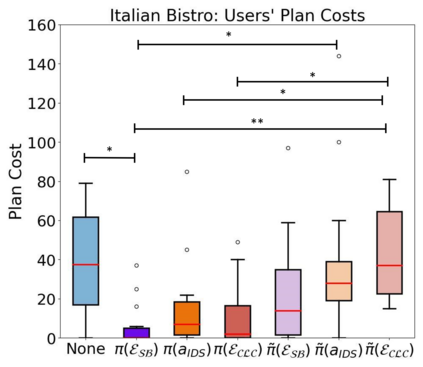

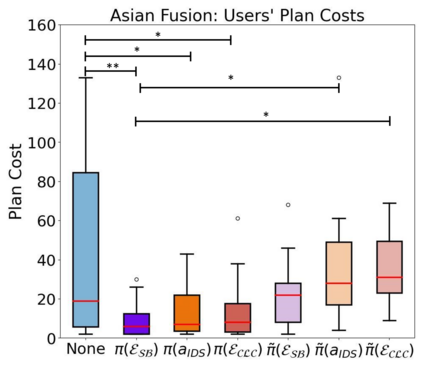

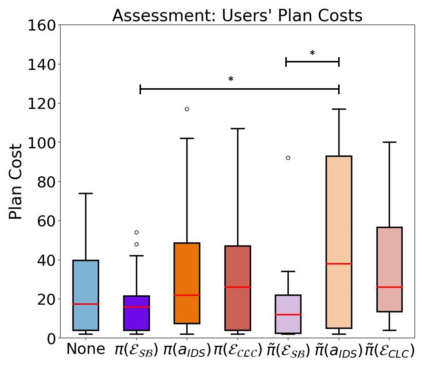

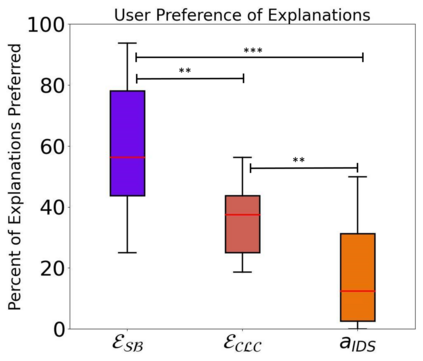

Intelligent decision support (IDS) systems leverage artificial intelligence techniques to generate recommendations that guide human users through the decision making phases of a task. However, a key challenge is that IDS systems are not perfect, and in complex real-world scenarios may produce incorrect output or fail to work altogether. The field of explainable AI planning (XAIP) has sought to develop techniques that make the decision making of sequential decision making AI systems more explainable to end-users. Critically, prior work in applying XAIP techniques to IDS systems has assumed that the plan being proposed by the planner is always optimal, and therefore the action or plan being recommended as decision support to the user is always correct. In this work, we examine novice user interactions with a non-robust IDS system -- one that occasionally recommends the wrong action, and one that may become unavailable after users have become accustomed to its guidance. We introduce a novel explanation type, subgoal-based explanations, for planning-based IDS systems, that supplements traditional IDS output with information about the subgoal toward which the recommended action would contribute. We demonstrate that subgoal-based explanations lead to improved user task performance, improve user ability to distinguish optimal and suboptimal IDS recommendations, are preferred by users, and enable more robust user performance in the case of IDS failure

翻译:智能决策支持(IDS)系统利用人工智能决策支持(IDS)系统,利用人工智能智能技术提出建议,在任务的决策阶段指导人类用户。然而,一个关键的挑战在于,IDS系统并不完美,在复杂的现实世界情景下,可能会产生不正确的产出或完全无法工作。可解释的AI规划(XAIIP)领域力求开发技术,使顺序决策决策决策的技术,使AI系统更容易向最终用户解释。关键地是,以前将XAIP技术应用于ISDS系统的工作假设,计划者提出的计划总是最佳的,因此建议的行动或计划对用户提供决策支持总是正确。在这项工作中,我们检查了与非机器人 IDS系统的新用户互动 -- -- 偶尔建议错误行动,在用户已经习惯了它的指导后可能无法使用。我们为基于规划的ISDS系统引入了新的解释类型、基于次级目标的解释,这种解释补充了传统的ISDS产出,并增加了关于所建议的行动将促进实现的次级目标的信息。我们证明,基于次级目标的解释导致改进用户业绩的最佳解释,用户能力通过改进了I-ADS软件改进了用户业绩,使I的次级能力使I.