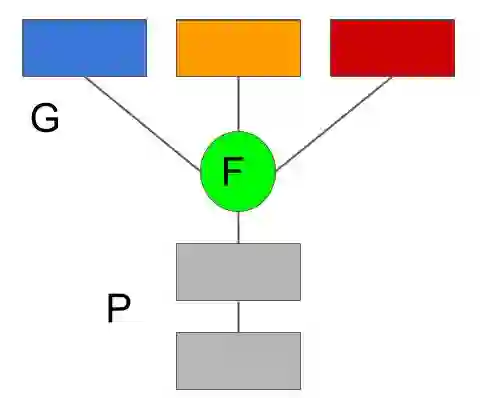

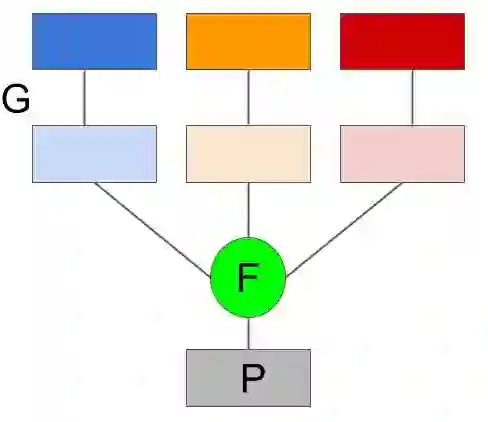

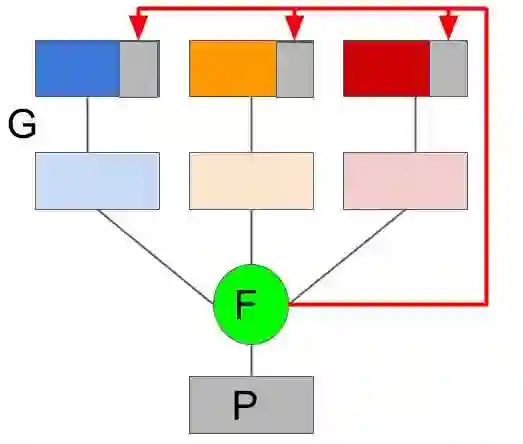

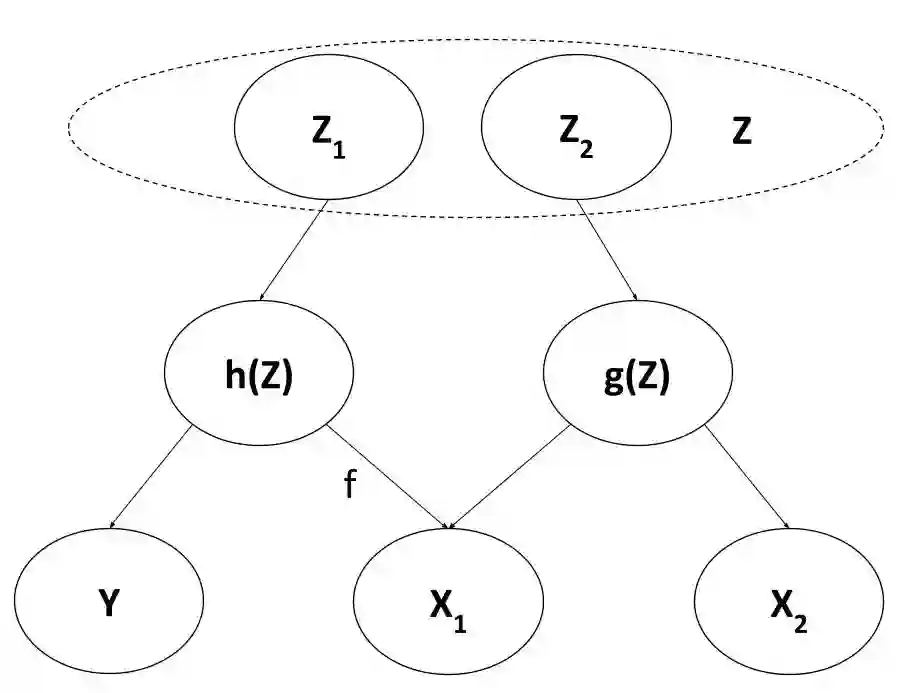

Integration of multimodal information from various sources has been shown to boost the performance of machine learning models and thus has received increased attention in recent years. Often such models use deep modality-specific networks to obtain unimodal features which are combined to obtain "late-fusion" representations. However, these designs run the risk of information loss in the respective unimodal pipelines. On the other hand, "early-fusion" methodologies, which combine features early, suffer from the problems associated with feature heterogeneity and high sample complexity. In this work, we present an iterative representation refinement approach, called Progressive Fusion, which mitigates the issues with late fusion representations. Our model-agnostic technique introduces backward connections that make late stage fused representations available to early layers, improving the expressiveness of the representations at those stages, while retaining the advantages of late fusion designs. We test Progressive Fusion on tasks including affective sentiment detection, multimedia analysis, and time series fusion with different models, demonstrating its versatility. We show that our approach consistently improves performance, for instance attaining a 5% reduction in MSE and 40% improvement in robustness on multimodal time series prediction.

翻译:从各种来源获得的多式联运信息一体化已证明提高了机器学习模型的性能,因此近年来受到越来越多的注意。这类模型往往使用深层次模式特定网络获得单式特征,这些特征可以合并,以获得“末融合”表示。然而,这些设计在不同的单式输油管中存在信息丢失的风险。另一方面,“早期融合”方法,这些特征早期结合,受到与特征异质和高样本复杂性有关的问题的影响。在这项工作中,我们提出了一种迭代代表性改进方法,称为“累进融合”,以缓解迟融合表现的问题。我们的模型-不可知性技术引入后向后端连接,将晚阶段的整合演示提供给早期层,提高这些阶段的表达的清晰度,同时保留迟融合设计的好处。我们测试了包括感应感检测、多媒体分析以及时间序列与不同模型融合等任务的进展,显示了其多功能性。我们表明,我们的方法不断改进绩效,例如,在多式时间序列预测方面实现了5%的削减和40%的完善性。