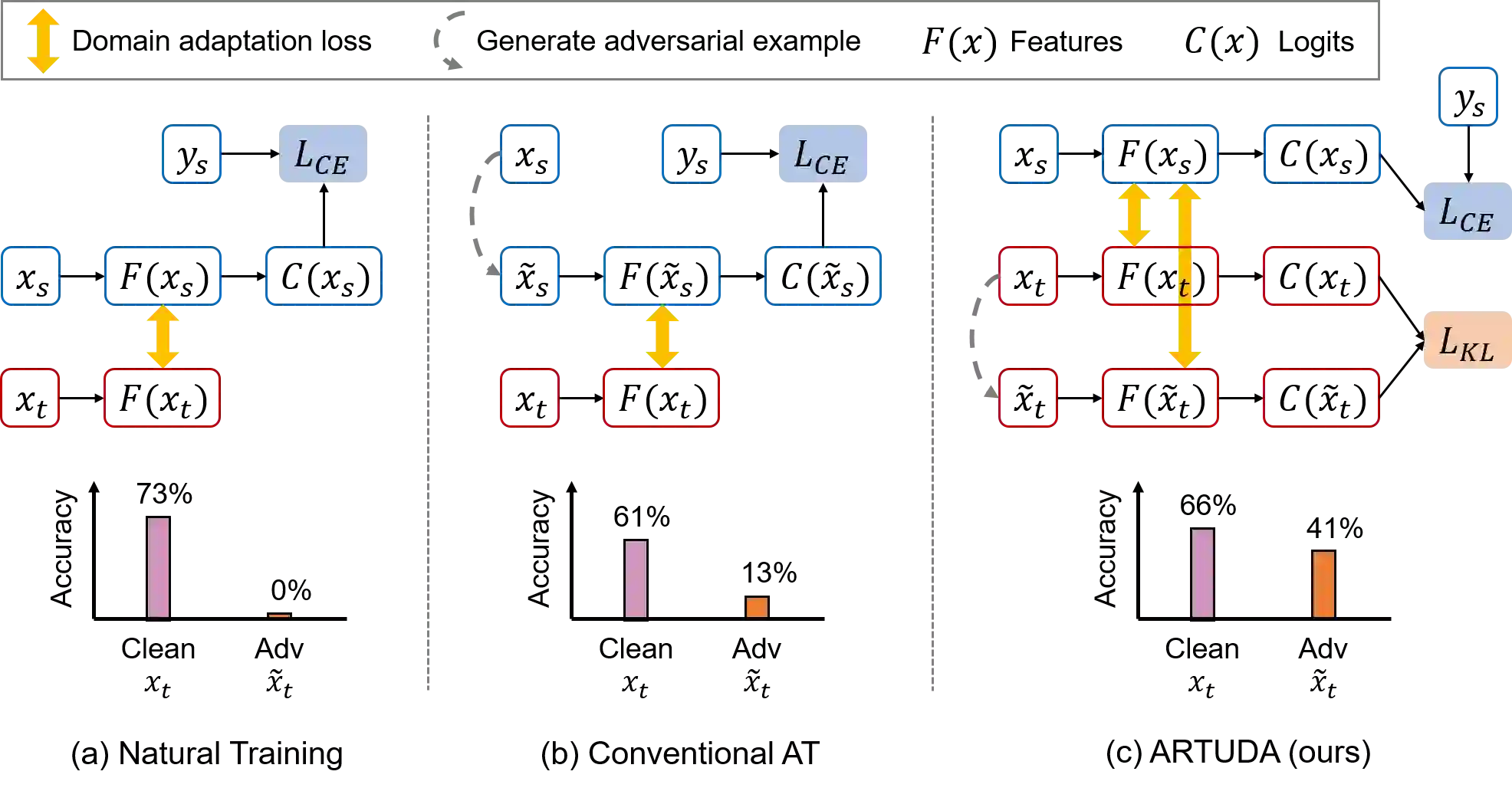

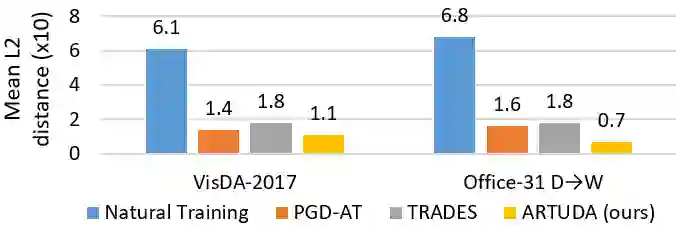

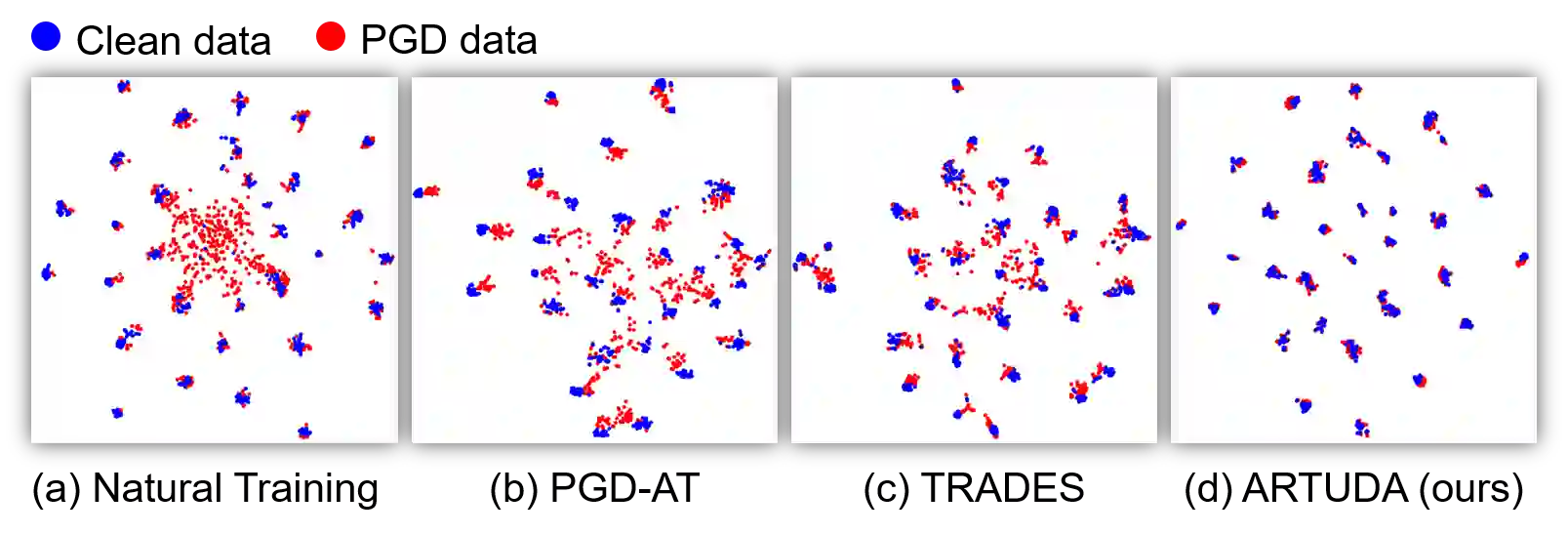

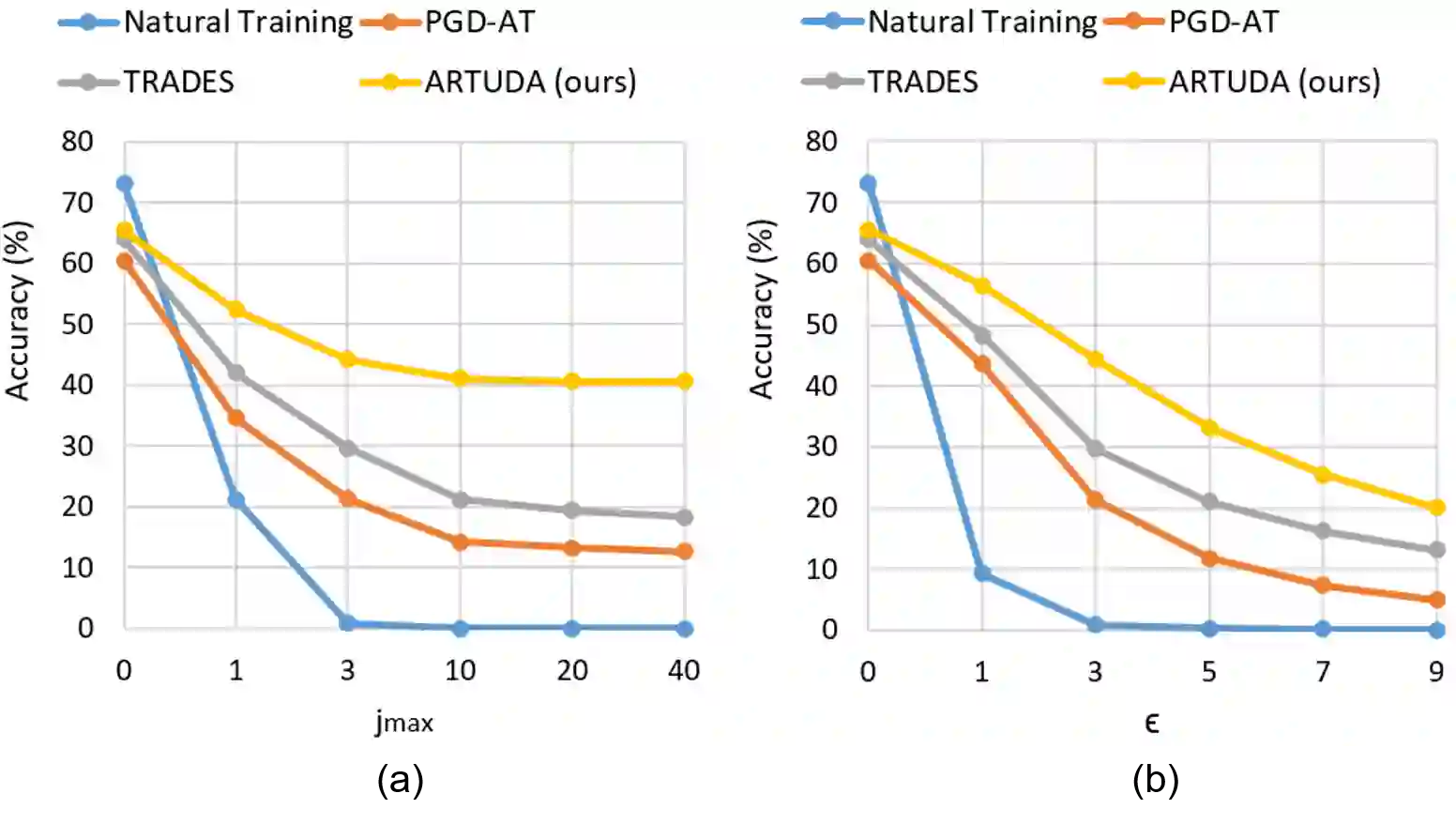

Unsupervised Domain Adaptation (UDA) methods aim to transfer knowledge from a labeled source domain to an unlabeled target domain. UDA has been extensively studied in the computer vision literature. Deep networks have been shown to be vulnerable to adversarial attacks. However, very little focus is devoted to improving the adversarial robustness of deep UDA models, causing serious concerns about model reliability. Adversarial Training (AT) has been considered to be the most successful adversarial defense approach. Nevertheless, conventional AT requires ground-truth labels to generate adversarial examples and train models, which limits its effectiveness in the unlabeled target domain. In this paper, we aim to explore AT to robustify UDA models: How to enhance the unlabeled data robustness via AT while learning domain-invariant features for UDA? To answer this, we provide a systematic study into multiple AT variants that potentially apply to UDA. Moreover, we propose a novel Adversarially Robust Training method for UDA accordingly, referred to as ARTUDA. Extensive experiments on multiple attacks and benchmarks show that ARTUDA consistently improves the adversarial robustness of UDA models.

翻译:未受监督的域适应(UDA)方法旨在将知识从一个标签源域转移到一个未标签的目标域。UDA在计算机视觉文献中已经进行了广泛的研究。深网络已经证明很容易受到对抗性攻击。但是,很少重视提高深度UDA模型的对抗性强力,引起对模型可靠性的严重关切。反向培训(AT)被认为是最成功的对抗性防御方法。然而,常规AT需要地面真实性标签来生成对抗性范例和培训模型,这限制了其在未标签目标域的效力。在本文件中,我们旨在探索ATA以强化UDA模型:如何在学习UDA的域变异特性的同时,通过AT加强未标记的数据的稳健性?对此,我们提供了对可能适用于UDA的多种AT变量的系统研究。此外,我们建议为UDA提出一个新的反向性罗伯斯特培训方法,因此称为ARTUDA。关于多次攻击的广泛实验和基准显示,ARTUDA的模型不断提高UDA的稳健性。