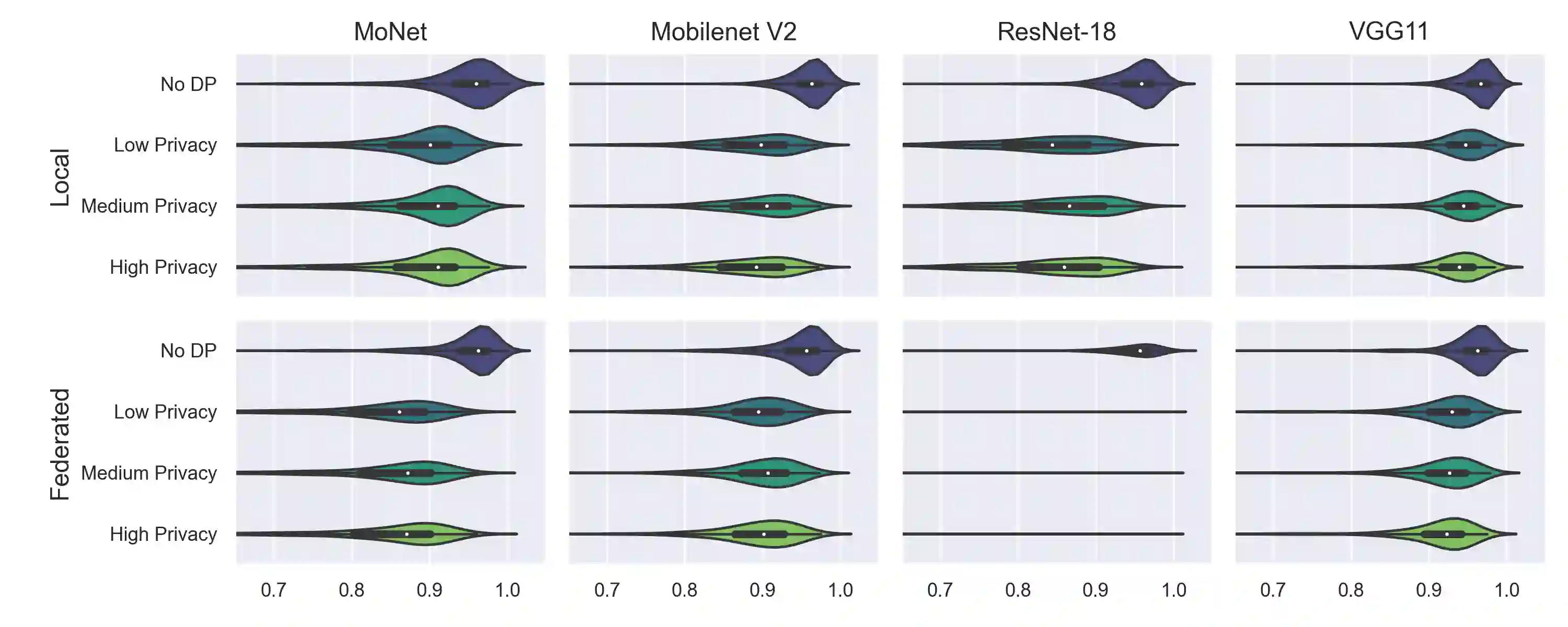

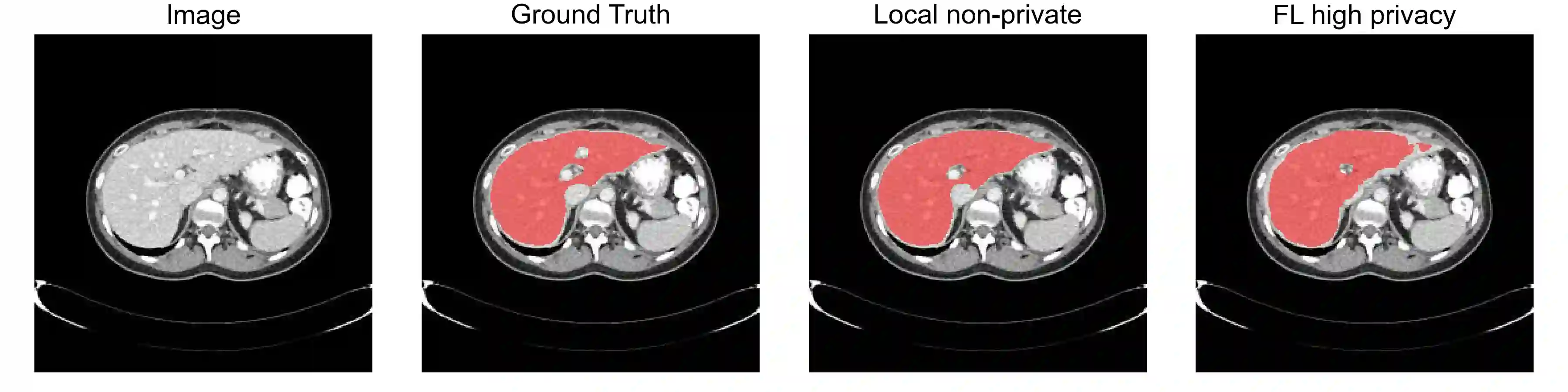

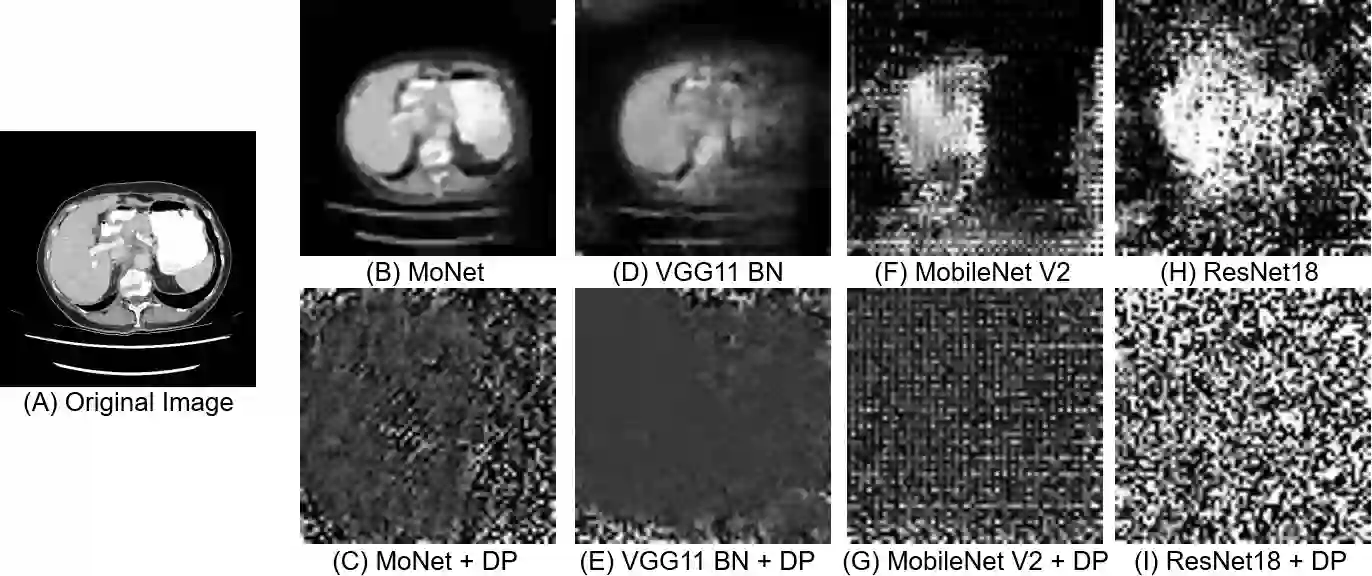

Collaborative machine learning techniques such as federated learning (FL) enable the training of models on effectively larger datasets without data transfer. Recent initiatives have demonstrated that segmentation models trained with FL can achieve performance similar to locally trained models. However, FL is not a fully privacy-preserving technique and privacy-centred attacks can disclose confidential patient data. Thus, supplementing FL with privacy-enhancing technologies (PTs) such as differential privacy (DP) is a requirement for clinical applications in a multi-institutional setting. The application of PTs to FL in medical imaging and the trade-offs between privacy guarantees and model utility, the ramifications on training performance and the susceptibility of the final models to attacks have not yet been conclusively investigated. Here we demonstrate the first application of differentially private gradient descent-based FL on the task of semantic segmentation in computed tomography. We find that high segmentation performance is possible under strong privacy guarantees with an acceptable training time penalty. We furthermore demonstrate the first successful gradient-based model inversion attack on a semantic segmentation model and show that the application of DP prevents it from divulging sensitive image features.

翻译:最近的举措表明,在FL培训的分化模式能够取得与当地培训的模式相似的性能。然而,FL并不是一种完全保护隐私的技术,而以隐私为中心的攻击可以披露保密病人数据。因此,在多机构环境下,对临床应用,如差异隐私(DP)等增强隐私的技术(PTs)是一种要求。在医学成像中将PT应用于FL以及隐私保障和模型效用之间的取舍、对培训绩效的影响以及最终模型是否容易受到攻击等都还没有得到彻底的调查。我们在这里展示了在计算成像中的基于差异的梯度的FL任务中首次应用了差异性梯度的FL。我们发现,在强大的隐私保障下高分化性能是有可能的,有可接受的培训时间罚款。我们进一步展示了第一个成功的基于梯度的反向攻击模型在语义分解模型上的应用,并表明DP的应用防止其分解敏感图像特征。