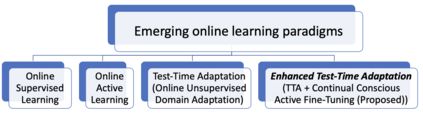

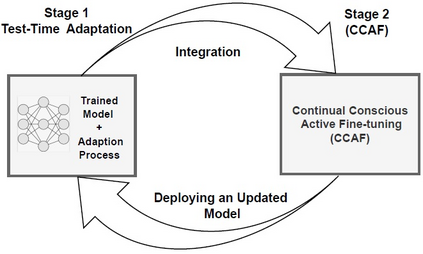

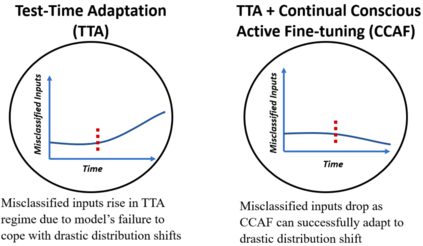

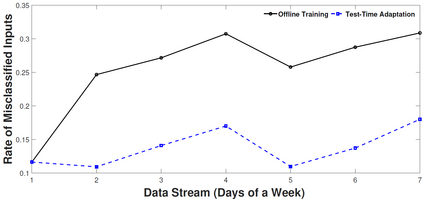

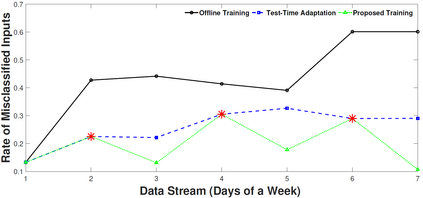

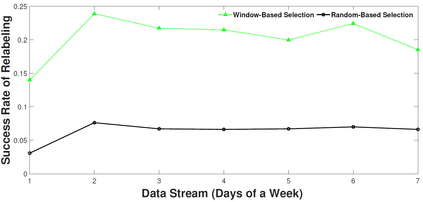

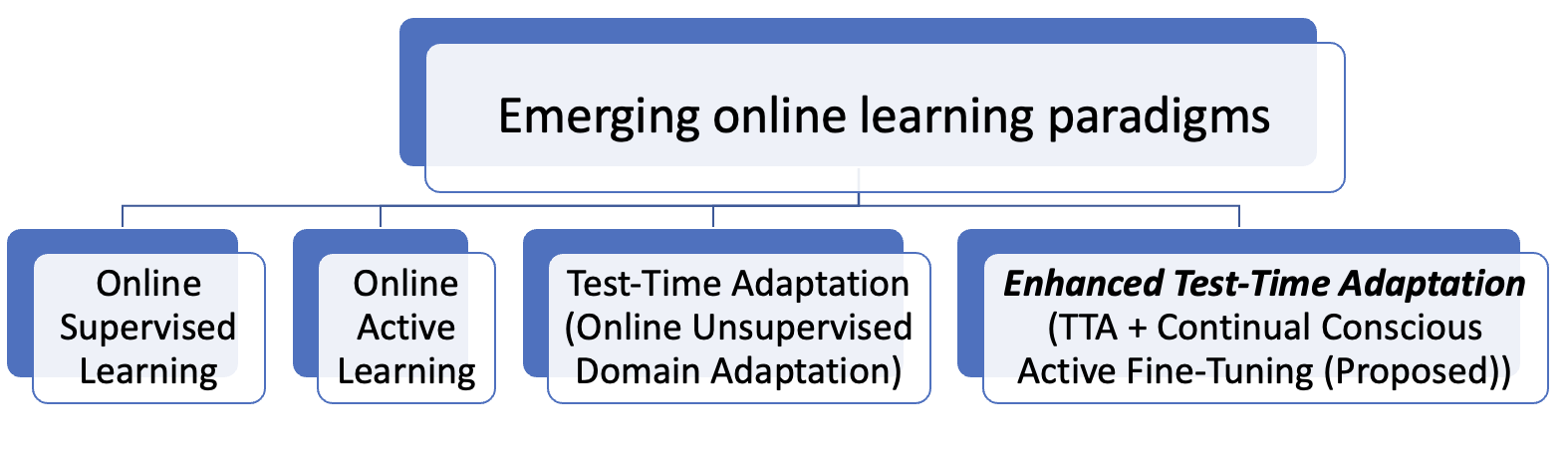

Unlike their offline traditional counterpart, online machine learning models are capable of handling data distribution shifts while serving at the test time. However, they have limitations in addressing this phenomenon. They are either expensive or unreliable. We propose augmenting an online learning approach called test-time adaptation with a continual conscious active fine-tuning layer to develop an enhanced variation that can handle drastic data distribution shifts reliably and cost-effectively. The proposed augmentation incorporates the following aspects: a continual aspect to confront the ever-ending data distribution shifts, a conscious aspect to imply that fine-tuning is a distribution-shift-aware process that occurs at the appropriate time to address the recently detected data distribution shifts, and an active aspect to indicate employing human-machine collaboration for the relabeling to be cost-effective and practical for diverse applications. Our empirical results show that the enhanced test-time adaptation variation outperforms the traditional variation by a factor of two.

翻译:与离线传统对应方不同的是,在线机器学习模式能够在测试时处理数据分配的转移,但在处理这一现象方面有其局限性,要么费用昂贵,要么不可靠。我们提议加强称为测试-时间适应的在线学习方法,并有一个持续自觉的积极微调层,以发展一种能够可靠和具有成本效益地处理数据分配急剧变化的强化变异。拟议的扩增包含以下几个方面:持续应对数据分配的变异,一个有意识的方面,意味着微调是一个在适当的时候出现的分配-感知过程,以适应最近检测到的数据分配变化,另一个积极方面是表明利用人力-机械合作进行重新标签,以使各种应用具有成本效益和实用性。我们的经验结果显示,强化的测试-时间变异比传统的变异成二倍。

相关内容

Source: Apple - iOS 8