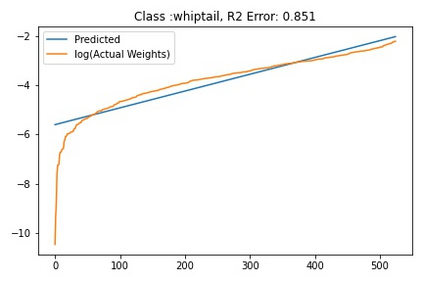

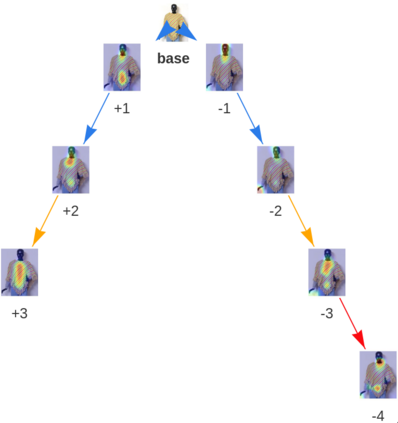

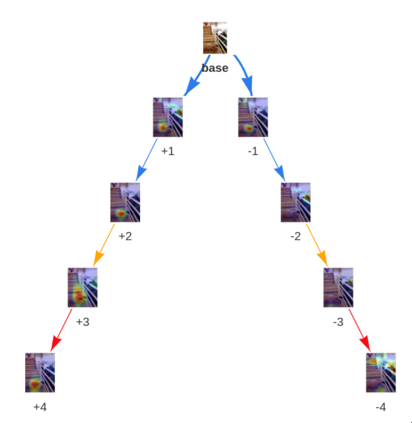

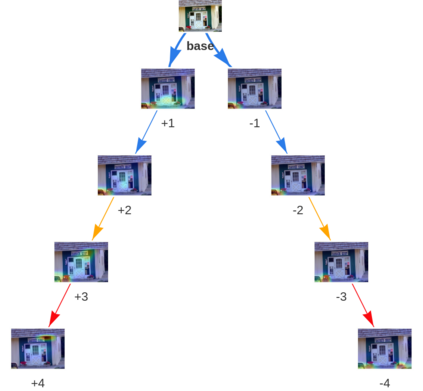

In recent years neural networks have achieved state-of-the-art accuracy for various tasks but the the interpretation of the generated outputs still remains difficult. In this work we attempt to provide a method to understand the learnt weights in the final fully connected layer in image classification models. We motivate our method by drawing a connection between the policy gradient objective in RL and supervised learning objective. We suggest that the commonly used cross entropy based supervised learning objective can be regarded as a special case of the policy gradient objective. Using this insight we propose a method to find the most discriminative and confusing parts of an image. Our method does not make any prior assumption about neural network achitecture and has low computational cost. We apply our method on publicly available pre-trained models and report the generated results.

翻译:近年来,神经网络实现了各种任务的最新准确性,但是对所产生产出的解释仍然困难重重。在这项工作中,我们试图提供一种方法来理解图像分类模型中最终完全连接层中学到的重量。我们通过在RL的政策梯度目标和受监督的学习目标之间建立联系来激励我们的方法。我们建议,通常使用的跨倍增监督的学习目标可以被视为政策梯度目标的一个特例。我们利用这种洞察力提出一种方法来寻找图像中最具歧视性和最混淆的部分。我们的方法并不预先假定神经网络的切片,而且计算成本较低。我们将我们的方法应用于公开提供的预先培训的模型,并报告产生的结果。