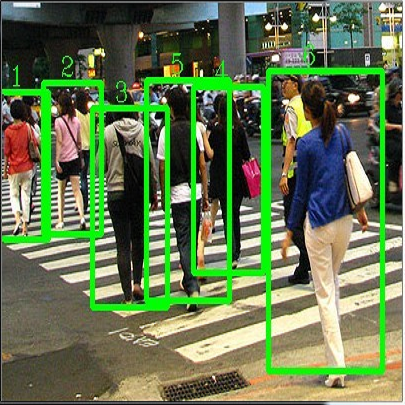

Deep learning-based detectors usually produce a redundant set of object bounding boxes including many duplicate detections of the same object. These boxes are then filtered using non-maximum suppression (NMS) in order to select exactly one bounding box per object of interest. This greedy scheme is simple and provides sufficient accuracy for isolated objects but often fails in crowded environments, since one needs to both preserve boxes for different objects and suppress duplicate detections. In this work we develop an alternative iterative scheme, where a new subset of objects is detected at each iteration. Detected boxes from the previous iterations are passed to the network at the following iterations to ensure that the same object would not be detected twice. This iterative scheme can be applied to both one-stage and two-stage object detectors with just minor modifications of the training and inference procedures. We perform extensive experiments with two different baseline detectors on four datasets and show significant improvement over the baseline, leading to state-of-the-art performance on CrowdHuman and WiderPerson datasets. The source code and the trained models are available at https://github.com/saic-vul/iterdet.

翻译:深层学习式探测器通常产生一套多余的物体捆绑盒,包括同一物体的许多重复探测。 这些盒子随后使用非最大抑制(NMS)过滤, 以便精确地选择每个感兴趣的物体的捆绑盒。 这种贪婪的图谋很简单, 能够为孤立的物体提供足够准确性, 但往往在拥挤的环境中失灵, 因为需要同时保存不同物体的盒子, 并抑制重复的探测。 在这项工作中, 我们开发了一套替代的迭接方案, 在每次迭代时都检测到一个新的物体子集。 在随后的迭代中, 从以前的迭代中检测到的盒子传递给网络, 以确保同一物体不会被两次探测。 这种迭接方案可以适用于一阶段和两阶段的物体探测器, 只是对培训和推断程序稍作修改。 我们在四个数据集上用两个不同的基线探测器进行广泛的实验, 并显示基线的显著改进, 导致在Crowdhuman和大Person数据集上进行状态的性能。 源码和经过训练的模型可以在 https://github.com/sac- vul/ fieldetert.